Amazon SageMaker built-in LightGBM now offers distributed training using Dask

AWS Machine Learning Blog

JANUARY 30, 2023

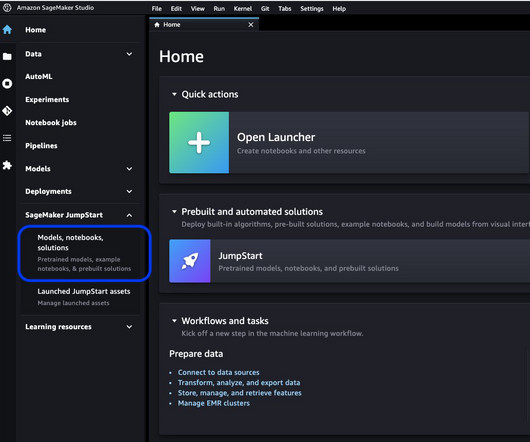

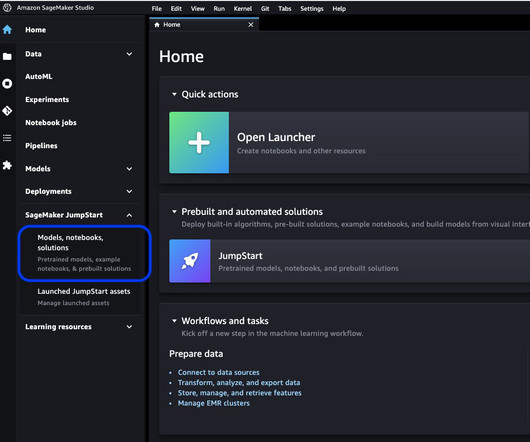

Amazon SageMaker provides a suite of built-in algorithms , pre-trained models , and pre-built solution templates to help data scientists and machine learning (ML) practitioners get started on training and deploying ML models quickly. You can use these algorithms and models for both supervised and unsupervised learning.

Let's personalize your content