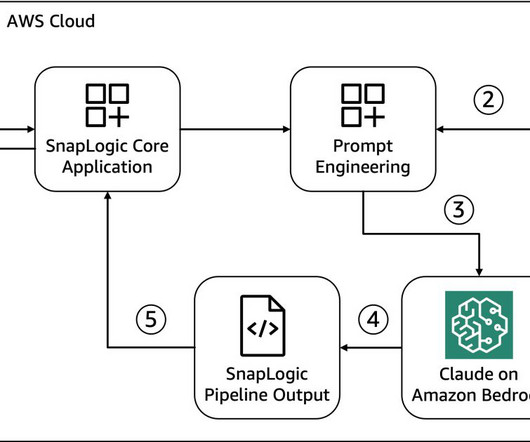

Unlocking generative AI for enterprises: How SnapLogic powers their low-code Agent Creator using Amazon Bedrock

AWS Machine Learning Blog

OCTOBER 23, 2024

Since joining SnapLogic in 2010, Greg has helped design and implement several key platform features including cluster processing, big data processing, the cloud architecture, and machine learning. He currently is working on Generative AI for data integration.

Let's personalize your content