Use AWS PrivateLink to set up private access to Amazon Bedrock

AWS Machine Learning Blog

OCTOBER 30, 2023

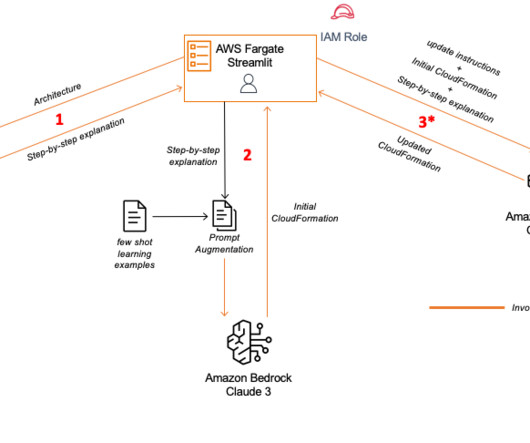

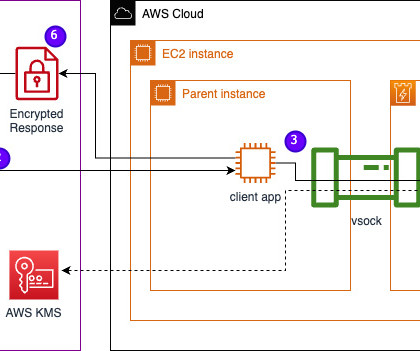

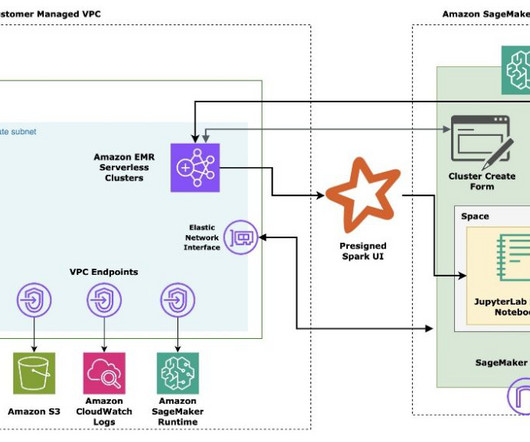

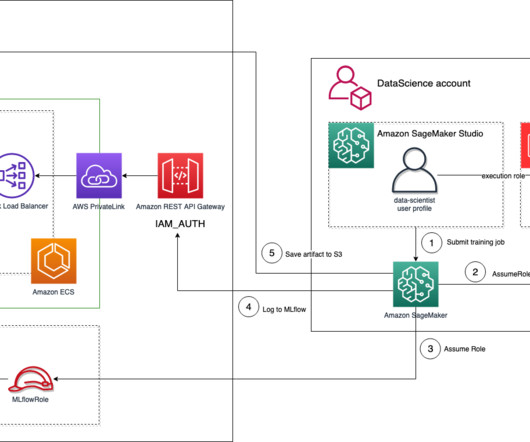

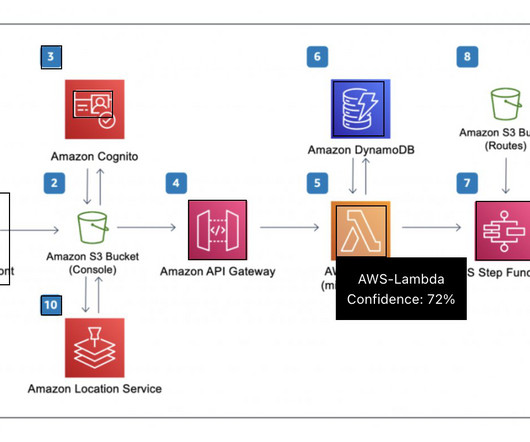

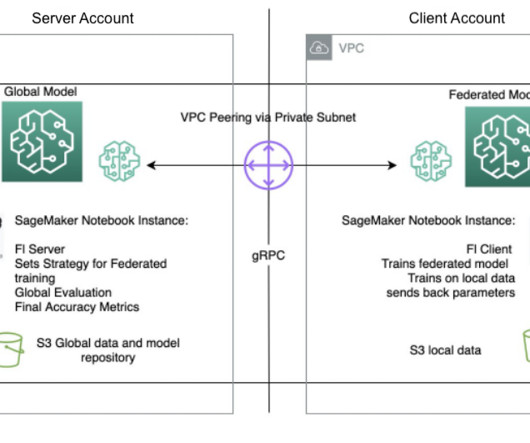

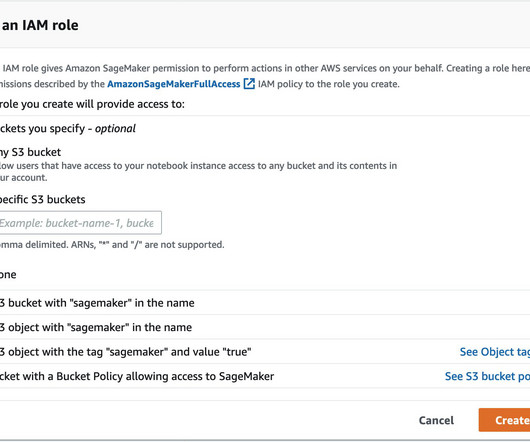

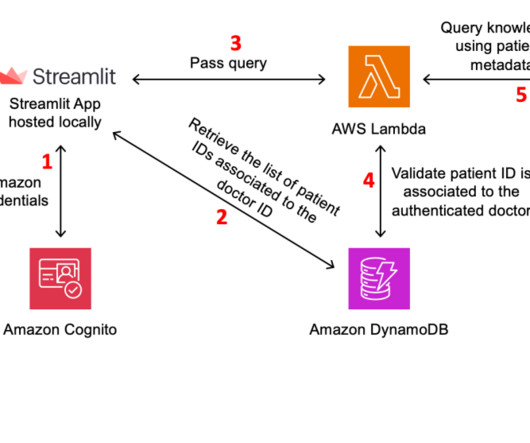

Amazon Bedrock is a fully managed service provided by AWS that offers developers access to foundation models (FMs) and the tools to customize them for specific applications. The workflow steps are as follows: AWS Lambda running in your private VPC subnet receives the prompt request from the generative AI application.

Let's personalize your content