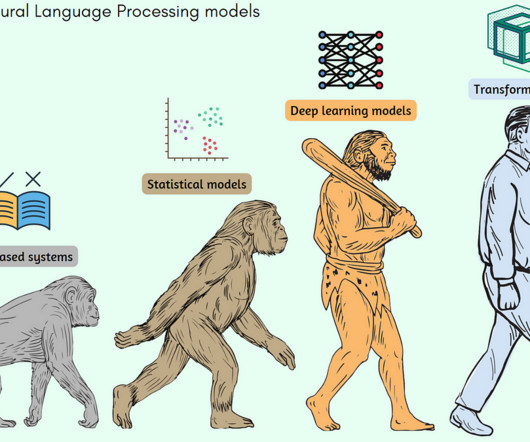

From Rulesets to Transformers: A Journey Through the Evolution of SOTA in NLP

Mlearning.ai

APRIL 8, 2023

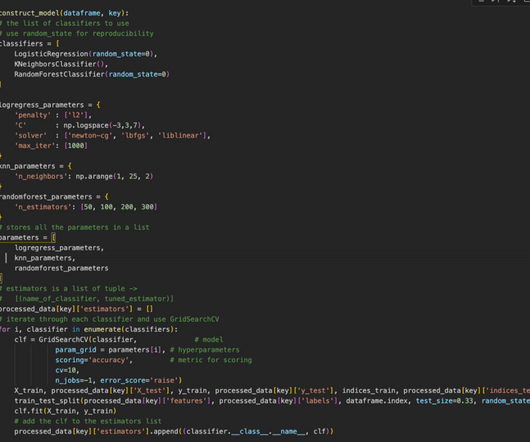

The earlier models that were SOTA for NLP mainly fell under the traditional machine learning algorithms. These included the Support vector machine (SVM) based models. 2014) Significant people : Geoffrey Hinton Yoshua Bengio Ilya Sutskever 5.

Let's personalize your content