A review of purpose-built accelerators for financial services

AWS Machine Learning Blog

SEPTEMBER 11, 2024

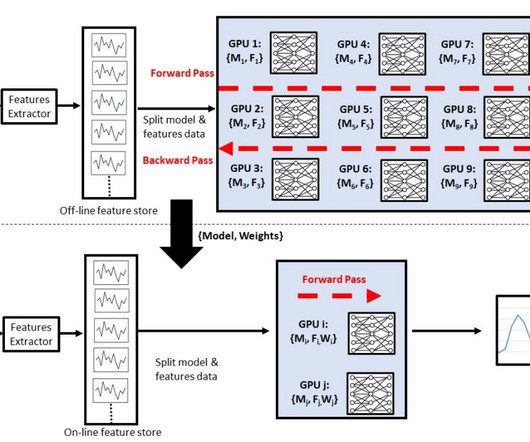

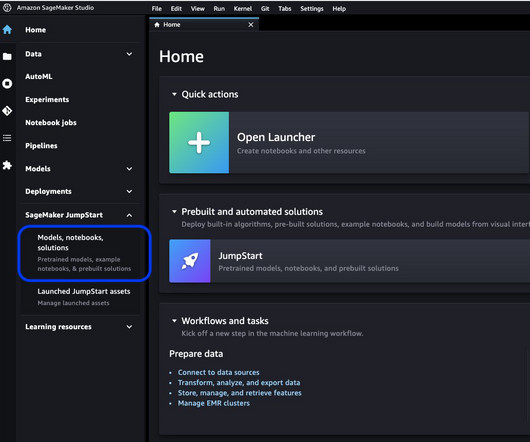

The following figure illustrates the idea of a large cluster of GPUs being used for learning, followed by a smaller number for inference. Examples of other PBAs now available include AWS Inferentia and AWS Trainium , Google TPU, and Graphcore IPU. Suppliers of data center GPUs include NVIDIA, AMD, Intel, and others.

Let's personalize your content