TAI #109: Cost and Capability Leaders Switching Places With GPT-4o Mini and LLama 3.1?

Towards AI

JULY 23, 2024

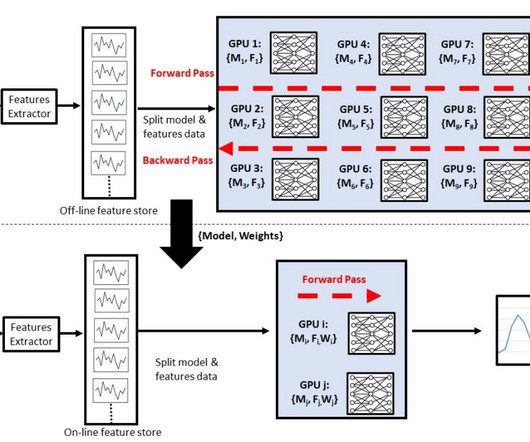

Competition at the leading edge of LLMs is certainly heating up, and it is only getting easier to train LLMs now that large H100 clusters are available at many companies, open datasets are released, and many techniques, best practices, and frameworks have been discovered and released.

Let's personalize your content