DeepMind

Dataconomy

MARCH 5, 2025

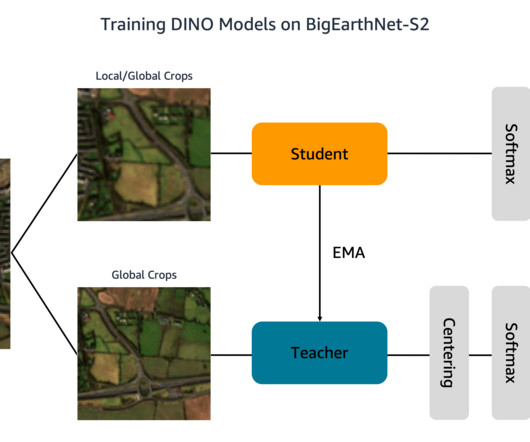

is dedicated to creating systems that can learn and adapt, a fundamental step toward achieving General-Purpose Artificial Intelligence (AGI). Technology and methodology DeepMind’s approach revolves around sophisticated machine learning methods that enable AI to interact with its environment and learn from experience.

Let's personalize your content