How to Make GridSearchCV Work Smarter, Not Harder

Mlearning.ai

SEPTEMBER 24, 2023

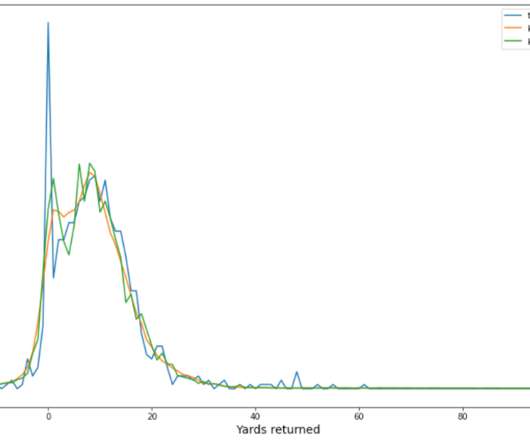

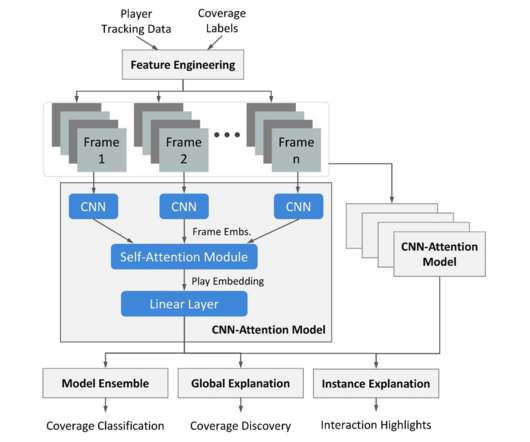

A brute-force search is a general problem-solving technique and algorithm paradigm. Figure 1: Brute Force Search It is a cross-validation technique. Figure 2: K-fold Cross Validation On the one hand, it is quite simple. Big O notation is a mathematical concept to describe the complexity of algorithms.

Let's personalize your content