Modern NLP: A Detailed Overview. Part 2: GPTs

Towards AI

JULY 23, 2023

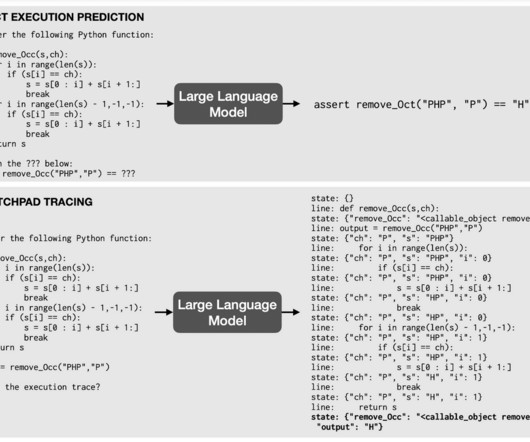

In the first part of the series, we talked about how Transformer ended the sequence-to-sequence modeling era of Natural Language Processing and understanding. Semi-Supervised Sequence Learning As we all know, supervised learning has a drawback, as it requires a huge labeled dataset to train.

Let's personalize your content