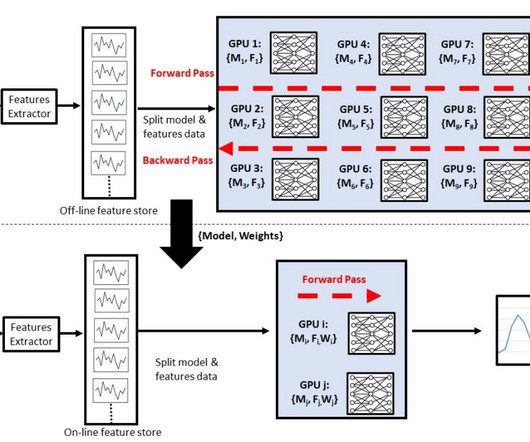

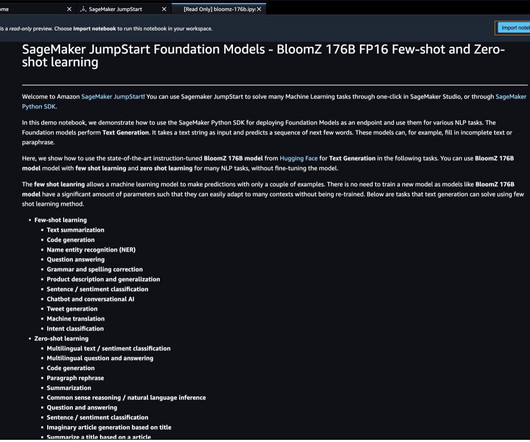

Federated Learning on AWS with FedML: Health analytics without sharing sensitive data – Part 1

AWS Machine Learning Blog

JANUARY 13, 2023

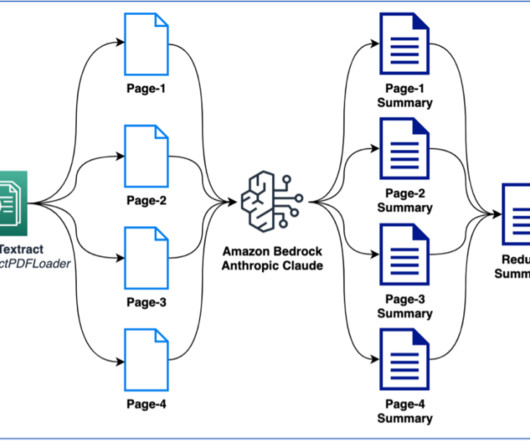

FL doesn’t require moving or sharing data across sites or with a centralized server during the model training process. In this two-part series, we demonstrate how you can deploy a cloud-based FL framework on AWS. Participants can either choose to maintain their data in their on-premises systems or in an AWS account that they control.

Let's personalize your content