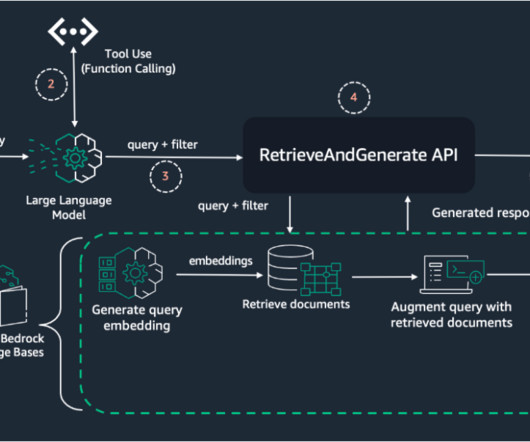

Streamline RAG applications with intelligent metadata filtering using Amazon Bedrock

NOVEMBER 20, 2024

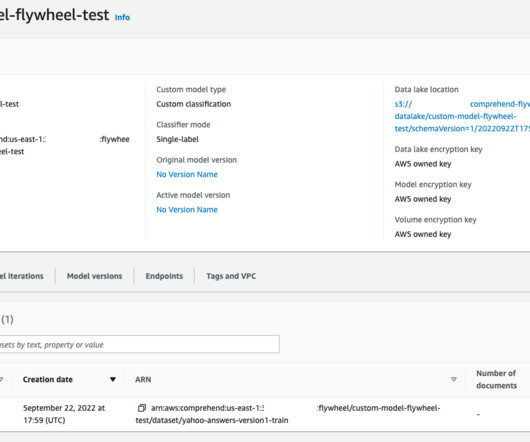

Knowledge base – You need a knowledge base created in Amazon Bedrock with ingested data and metadata. For detailed instructions on setting up a knowledge base, including data preparation, metadata creation, and step-by-step guidance, refer to Amazon Bedrock Knowledge Bases now supports metadata filtering to improve retrieval accuracy.

Let's personalize your content