Integrate HyperPod clusters with Active Directory for seamless multi-user login

AWS Machine Learning Blog

APRIL 22, 2024

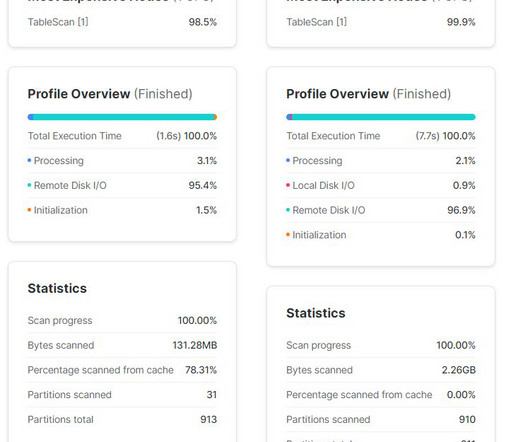

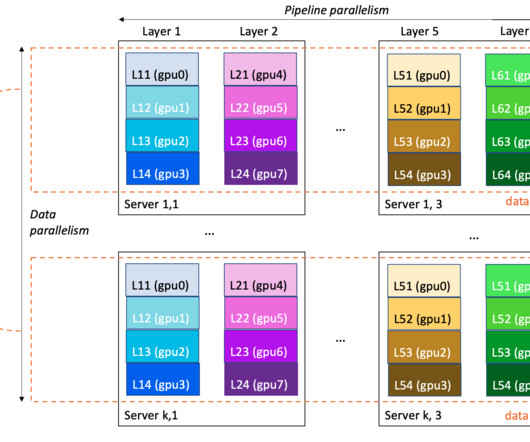

Amazon SageMaker HyperPod is purpose-built to accelerate foundation model (FM) training, removing the undifferentiated heavy lifting involved in managing and optimizing a large training compute cluster. In this solution, HyperPod cluster instances use the LDAPS protocol to connect to the AWS Managed Microsoft AD via an NLB.

Let's personalize your content