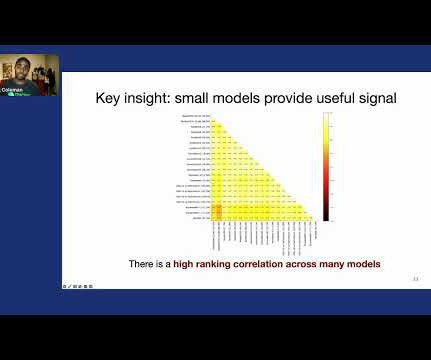

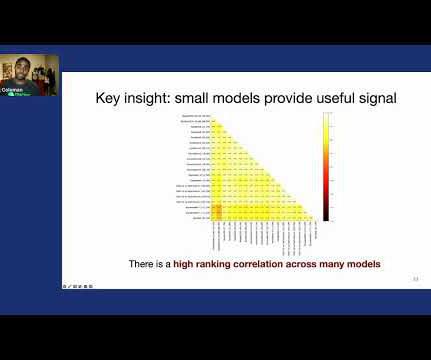

“Looking beyond GPUs for DNN Scheduling on Multi-Tenant Clusters” paper summary

Mlearning.ai

AUGUST 7, 2023

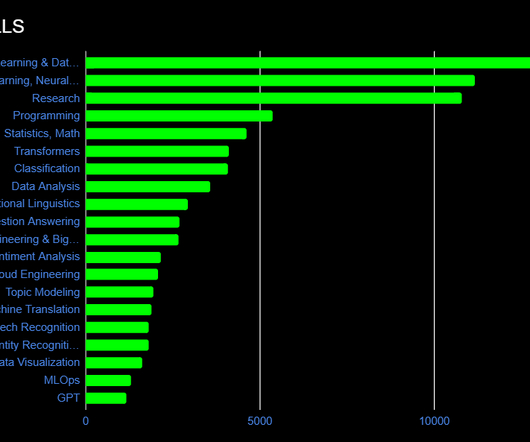

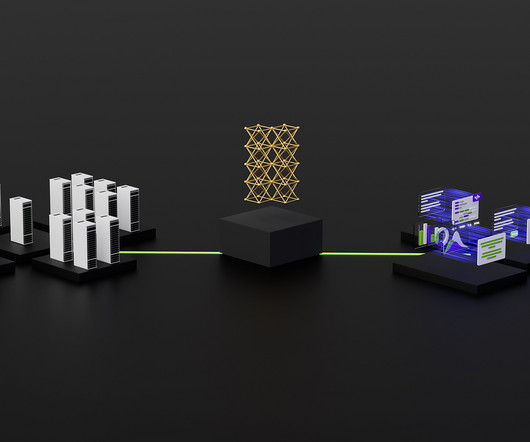

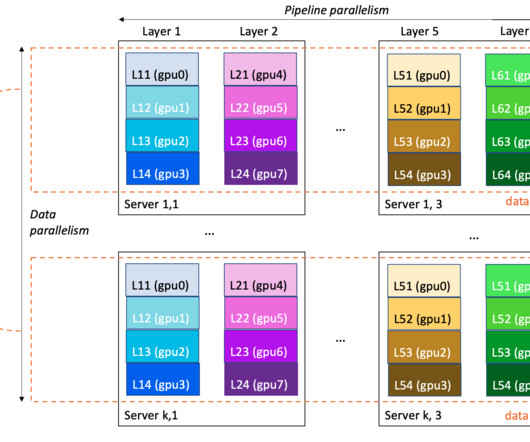

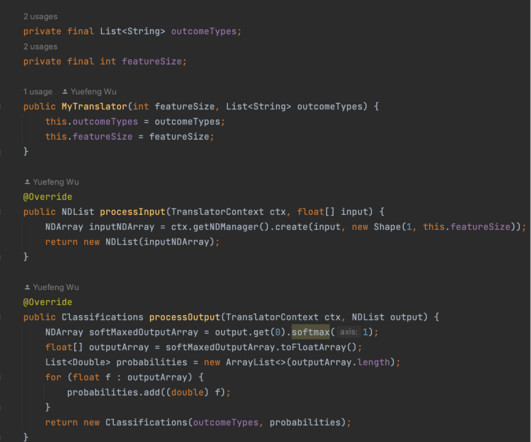

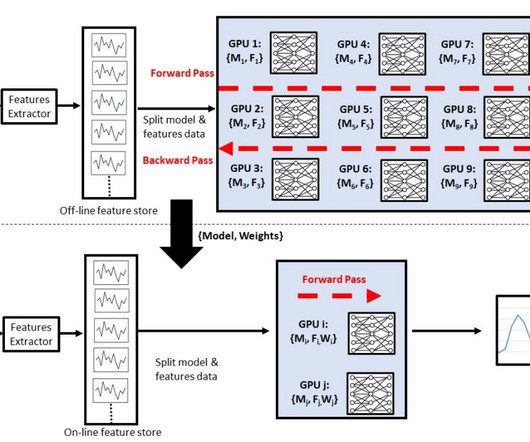

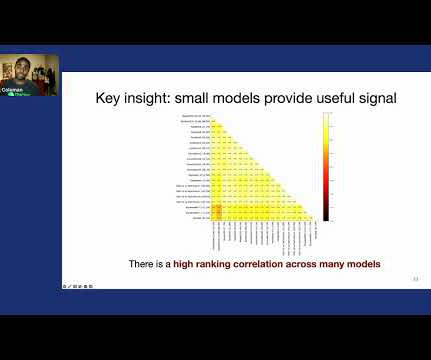

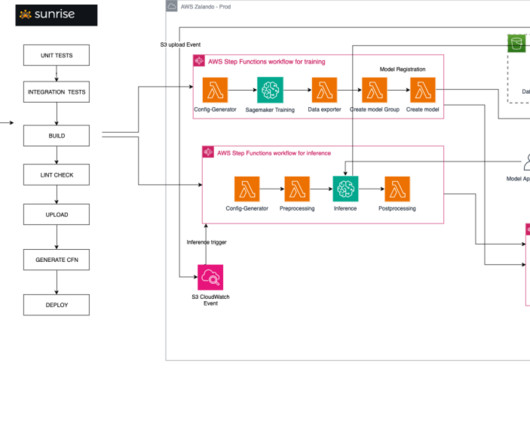

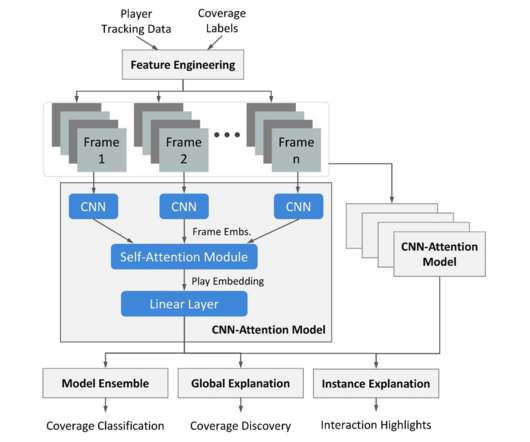

Introduction Training deep learning models is a heavy task from computation and memory requirement perspective. Enterprises, research and development teams shared GPU clusters for this purpose. on the clusters to get the jobs and allocate GPUs, CPUs, and system memory to the submitted tasks by different users.

Let's personalize your content