An Overview of Extreme Multilabel Classification (XML/XMLC)

Towards AI

APRIL 14, 2023

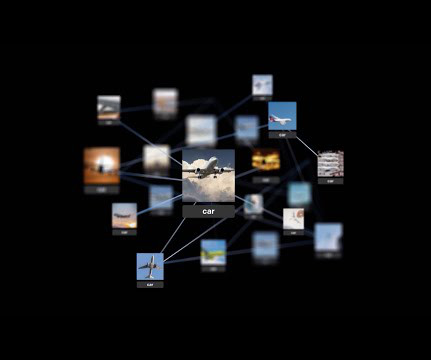

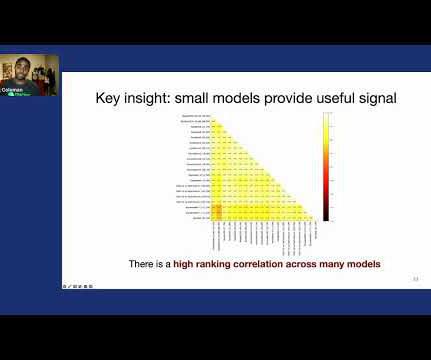

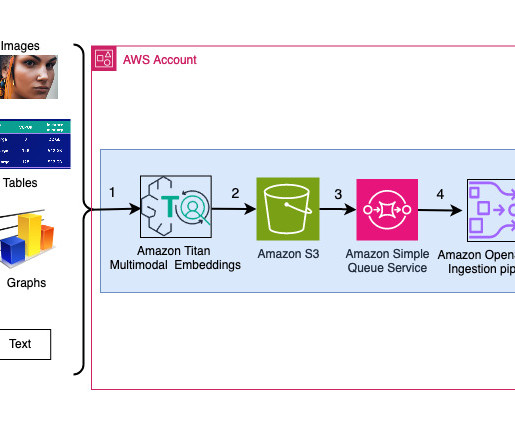

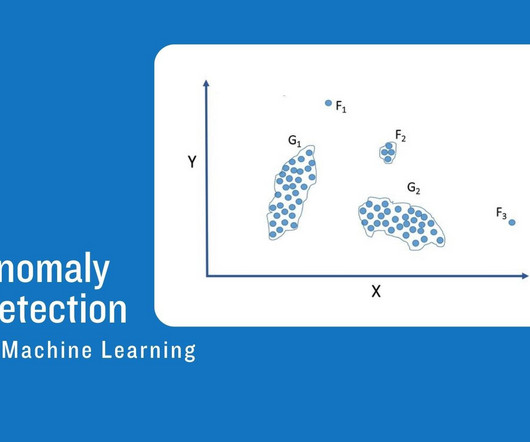

Last Updated on April 17, 2023 by Editorial Team Author(s): Kevin Berlemont, PhD Originally published on Towards AI. The prediction is then done using a k-nearest neighbor method within the embedding space. The feature space reduction is performed by aggregating clusters of features of balanced size.

Let's personalize your content