Acceleration Unlocked: DS3_v2 Instance Types on Azure now supported by Photon

databricks

MAY 1, 2023

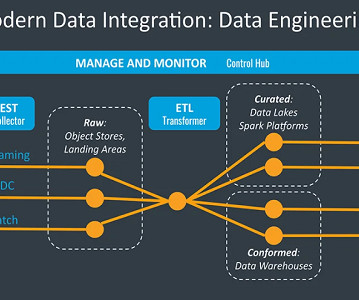

At Databricks, we offer maximal flexibility for choosing compute for ETL and ML/AI workloads. Staying true to the theme of flexibility, we announce.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Data Science Dojo

OCTOBER 31, 2024

According to Google AI, they work on projects that may not have immediate commercial applications but push the boundaries of AI research. With the continuous growth in AI, demand for remote data science jobs is set to rise. Specialists in this role help organizations ensure compliance with regulations and ethical standards.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Mlearning.ai

JULY 8, 2023

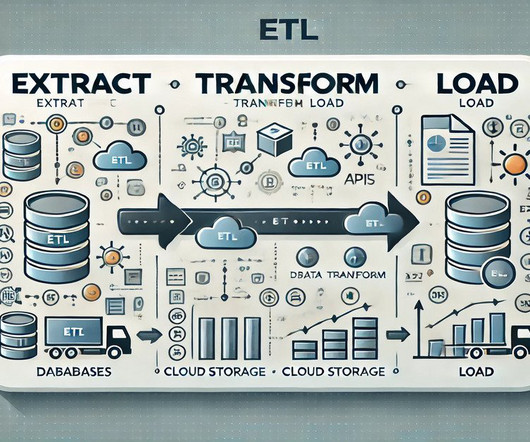

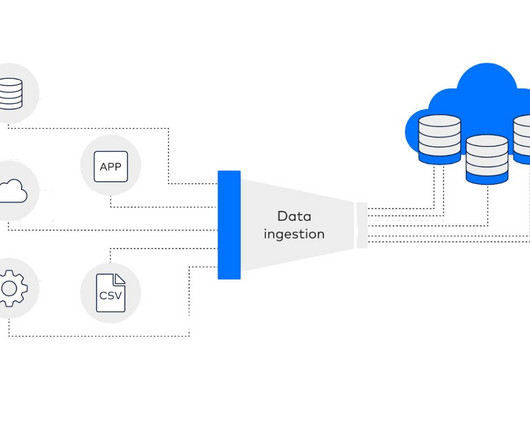

One of them is Azure functions. In this article we’re going to check what is an Azure function and how we can employ it to create a basic extract, transform and load (ETL) pipeline with minimal code. Extract, transform and Load Before we begin, let’s shed some light on what an ETL pipeline essentially is.

Data Science 101

JANUARY 17, 2020

Azure Machine Learning Datasets Learn all about Azure Datasets, why to use them, and how they help. AI Powered Speech Analytics for Amazon Connect This video walks thru the AWS products necessary for converting video to text, translating and performing basic NLP. Some news this week out of Microsoft and Amazon.

Data Science Dojo

FEBRUARY 20, 2023

It is used by businesses across industries for a wide range of applications, including fraud prevention, marketing automation, customer service, artificial intelligence (AI), chatbots, virtual assistants, and recommendations. Azure Machine Learning has a variety of prebuilt models, such as speech, language, image, and recommendation models.

Pickl AI

OCTOBER 15, 2024

Summary: Selecting the right ETL platform is vital for efficient data integration. Introduction In today’s data-driven world, businesses rely heavily on ETL platforms to streamline data integration processes. What is ETL in Data Integration? Let’s explore some real-world applications of ETL in different sectors.

Pickl AI

OCTOBER 17, 2024

Summary: This article explores the significance of ETL Data in Data Management. It highlights key components of the ETL process, best practices for efficiency, and future trends like AI integration and real-time processing, ensuring organisations can leverage their data effectively for strategic decision-making.

Pickl AI

DECEMBER 15, 2024

Familiarise yourself with ETL processes and their significance. ETL Process: Extract, Transform, Load processes that prepare data for analysis. Can You Explain the ETL Process? The ETL process involves three main steps: Extract: Data is collected from various sources. How Do You Ensure Data Quality in a Data Warehouse?

IBM Data Science in Practice

FEBRUARY 21, 2023

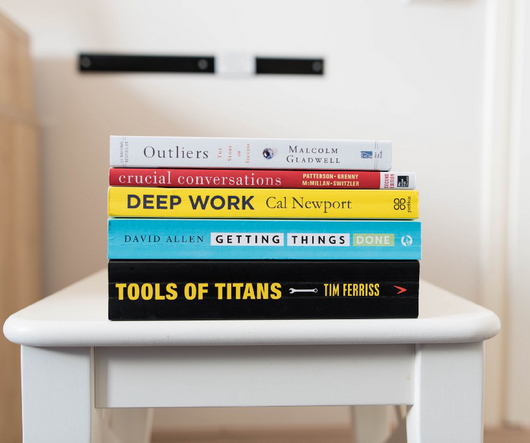

Just for AI Titans — Autonomous & Continuous AI Training — MLOPS on steroids. Photo by Jeroen den Otter on Unsplash Who should read this article: Machine and Deep Learning Engineers, Solution Architects, Data Scientist, AI Enthusiast, AI Founders What is covered in this article? Continuous training is the solution.

Pickl AI

APRIL 6, 2023

Accordingly, one of the most demanding roles is that of Azure Data Engineer Jobs that you might be interested in. The following blog will help you know about the Azure Data Engineering Job Description, salary, and certification course. How to Become an Azure Data Engineer?

Pickl AI

JUNE 7, 2024

Summary: Choosing the right ETL tool is crucial for seamless data integration. At the heart of this process lie ETL Tools—Extract, Transform, Load—a trio that extracts data, tweaks it, and loads it into a destination. Choosing the right ETL tool is crucial for smooth data management. What is ETL?

Women in Big Data

NOVEMBER 27, 2024

Evaluate integration capabilities with existing data sources and Extract Transform and Load (ETL) tools. Microsoft Azure Synapse Analytics Microsoft Azure Synapse Analytics is an integrated analytics service that combines data warehousing and big data capabilities into a unified platform.

ODSC - Open Data Science

FEBRUARY 6, 2025

50% Off ODSC East 2025 Passes, Prompt Engineering Techniques, AI Builders Week 3 Highlights, and AI Guardrails The ODSC East 2025 Preliminary Schedule isLIVE! Register by Friday for 50%off! Check out our first-announced speakers and updateshere! Lets dive into the schedule and key events that will shape this years conference.

Dataconomy

SEPTEMBER 4, 2023

Machine learning and AI analytics: Machine learning and AI analytics leverage advanced algorithms to automate the analysis of data, discover hidden patterns, and make predictions. AI and augmented analytics assist users in navigating complex data sets, offering valuable insights.

ODSC - Open Data Science

JUNE 12, 2023

Then we have some other ETL processes to constantly land the past 5 years of data into the Datamarts. Then we have some other ETL processes to constantly land the past 5 years of data into the Datamarts. Power BI Datamarts provide no-code/low-code datamart capabilities using Azure SQL Database technology in the background.

phData

SEPTEMBER 2, 2024

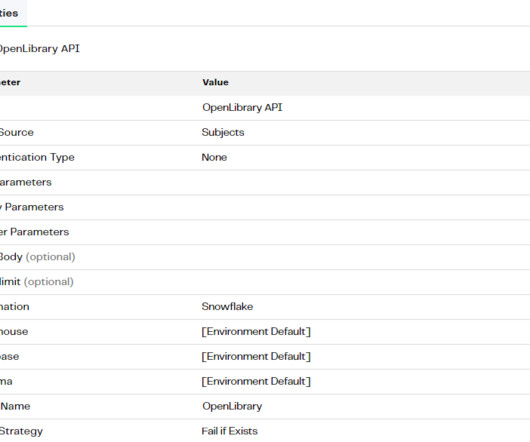

In this blog, we will cover the best practices for developing jobs in Matillion, an ETL/ELT tool built specifically for cloud database platforms. Matillion is a SaaS-based data integration platform that can be hosted in AWS, Azure, or GCP. Some of the supported ones for the Matillion ETL/ELT are GitHub , Bitbucket , and Azure DevOps.

Pickl AI

NOVEMBER 4, 2024

ETL Processes In Extract, Transform, Load (ETL) operations, ODBC facilitates the extraction of data from source databases, transformation of data into the desired format, and loading it into target systems, thus streamlining data warehousing efforts.

Pickl AI

SEPTEMBER 10, 2024

Summary: AI is revolutionising the way we use spreadsheet software like Excel. By integrating AI capabilities, Excel can now automate Data Analysis, generate insights, and even create visualisations with minimal human intervention. What is AI in Excel? You can automatically clean, organise, and analyse large datasets with AI.

ODSC - Open Data Science

JANUARY 18, 2024

EVENT — ODSC East 2024 In-Person and Virtual Conference April 23rd to 25th, 2024 Join us for a deep dive into the latest data science and AI trends, tools, and techniques, from LLMs to data analytics and from machine learning to responsible AI. Learn more about the cloud. Stay on top of data engineering trends. First, articles.

DagsHub

NOVEMBER 11, 2024

In this article, we’ll explore how AI can transform unstructured data into actionable intelligence, empowering you to make informed decisions, enhance customer experiences, and stay ahead of the competition. Let’s look at how we can convert unstructured data into better informative structures using new AI techniques and solutions.

Data Science Dojo

JULY 3, 2024

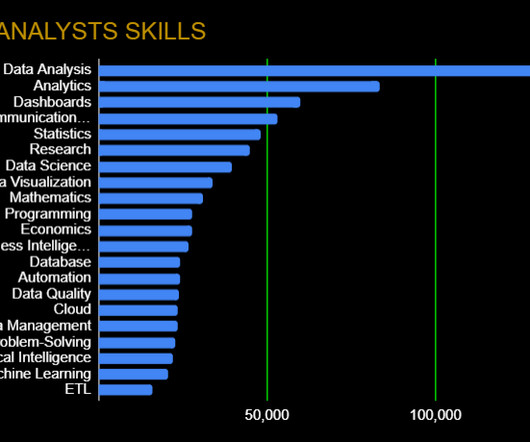

Data Engineering : Building and maintaining data pipelines, ETL (Extract, Transform, Load) processes, and data warehousing. Artificial Intelligence : Concepts of AI include neural networks, natural language processing (NLP), and reinforcement learning.

phData

AUGUST 9, 2024

Imagine you are building out a routine sales report in Snowflake AI Data Cloud when you come across a requirement for a field called “Is Platinum Customer.” Cloud Storage Upload Snowflake can easily upload files from cloud storage (AWS S3, Azure Storage, GCP Cloud Storage). This scenario is all too common to analytics engineers.

ODSC - Open Data Science

APRIL 3, 2023

Data Wrangling: Data Quality, ETL, Databases, Big Data The modern data analyst is expected to be able to source and retrieve their own data for analysis. Competence in data quality, databases, and ETL (Extract, Transform, Load) are essential. Cloud Services: Google Cloud Platform, AWS, Azure. Sign up now, start learning today !

IBM Journey to AI blog

JANUARY 18, 2023

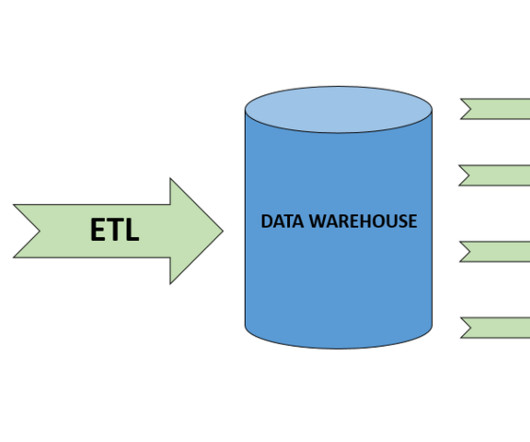

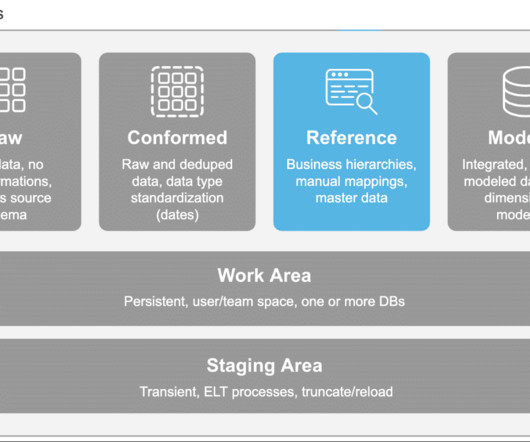

While traditional data warehouses made use of an Extract-Transform-Load (ETL) process to ingest data, data lakes instead rely on an Extract-Load-Transform (ELT) process. This adds an additional ETL step, making the data even more stale. Multiple products exist in the market, including Databricks, Azure Synapse and Amazon Athena.

phData

SEPTEMBER 25, 2024

While numerous ETL tools are available on the market, selecting the right one can be challenging. There are a few Key factors to consider when choosing an ETL tool, which includes: Business Requirement: What type or amount of data do you need to handle? It can be hosted on major cloud platforms like AWS, Azure, and GCP.

Pickl AI

NOVEMBER 4, 2024

Key components of data warehousing include: ETL Processes: ETL stands for Extract, Transform, Load. ETL is vital for ensuring data quality and integrity. Azure Microsoft Azure offers a range of services for Data Engineering, including Azure Data Lake for scalable storage and Azure Databricks for collaborative Data Analytics.

ODSC - Open Data Science

APRIL 6, 2023

These are used to extract, transform, and load (ETL) data between different systems. Many cloud providers, such as Amazon Web Services and Microsoft Azure, offer SQL-based database services that can be used to store and analyze data in the cloud. Data integration tools allow for the combining of data from multiple sources.

Pickl AI

JULY 25, 2023

They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. Data Integration and ETL (Extract, Transform, Load) Data Engineers develop and manage data pipelines that extract data from various sources, transform it into a suitable format, and load it into the destination systems.

DagsHub

OCTOBER 23, 2024

This article will discuss managing unstructured data for AI and ML projects. You will learn the following: Why unstructured data management is necessary for AI and ML projects. How to leverage Generative AI to manage unstructured data Benefits of applying proper unstructured data management processes to your AI/ML project.

Data Science Blog

FEBRUARY 4, 2023

In the era of Industry 4.0 , linking data from MES (Manufacturing Execution System) with that from ERP, CRM and PLM systems plays an important role in creating integrated monitoring and control of business processes.

Pickl AI

DECEMBER 16, 2024

Data Factory : Simplifies the creation of ETL pipelines to integrate data from diverse sources. It also integrates Advanced AI and Machine Learning capabilities to deliver predictive insights and automation, setting it apart from traditional analytics platforms. Power BI : Provides dynamic dashboards and reporting tools.

Precisely

MAY 23, 2024

The sudden popularity of cloud data platforms like Databricks , Snowflake , Amazon Redshift, Amazon RDS, Confluent Cloud , and Azure Synapse has accelerated the need for powerful data integration tools that can deliver large volumes of information from transactional applications to the cloud reliably, at scale, and in real time.

phData

NOVEMBER 28, 2023

In July 2023, Matillion launched their fully SaaS platform called Data Productivity Cloud, aiming to create a future-ready, everyone-ready, and AI-ready environment that companies can easily adopt and start automating their data pipelines coding, low-coding, or even no-coding at all. Why Does it Matter?

Pickl AI

JULY 25, 2024

AWS Glue A fully managed ETL service that makes it easy to prepare and load data for analytics. Popular options include Apache Kafka for real-time streaming, Apache Spark for batch and stream processing, Talend for ETL, and cloud-based solutions like AWS Glue, Azure Data Factory, and Google Cloud Dataflow.

Pickl AI

JULY 6, 2023

Let’s understand the key stages in the data flow process: Data Ingestion Data is fed into Hadoop’s distributed file system (HDFS) or other storage systems supported by Hive, such as Amazon S3 or Azure Data Lake Storage. The post Unfolding the Details of Hive in Hadoop appeared first on Pickl AI.

Pickl AI

APRIL 26, 2024

Data Warehousing and ETL Processes What is a data warehouse, and why is it important? Explain the Extract, Transform, Load (ETL) process. The ETL process involves extracting data from source systems, transforming it into a suitable format or structure, and loading it into a data warehouse or target system for analysis and reporting.

Precisely

MAY 23, 2024

The sudden popularity of cloud data platforms like Databricks , Snowflake , Amazon Redshift, Amazon RDS, Confluent Cloud , and Azure Synapse has accelerated the need for powerful data integration tools that can deliver large volumes of information from transactional applications to the cloud reliably, at scale, and in real time.

Pickl AI

JULY 24, 2023

Data warehousing and ETL (Extract, Transform, Load) procedures frequently involve batch processing. Cloud service providers provide a variety of services and applications for data intake, including Azure Event Hubs, Google Cloud Pub/Sub, and Amazon Kinesis.

phData

JANUARY 19, 2024

The platform features AI-powered tools that enable the integration of large language models (LLM) into your data pipelines, as well as a great connector library and a visual, low-code design that supports a wide range of data movement and transformation operations. With that, you can cover most of the necessary connections.

phData

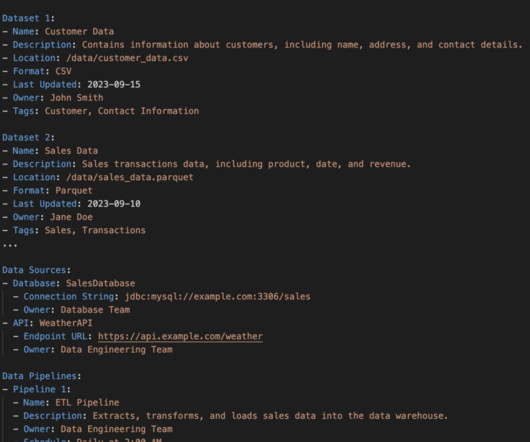

SEPTEMBER 19, 2023

Thankfully, there are tools available to help with metadata management, such as AWS Glue, Azure Data Catalog, or Alation, that can automate much of the process. However, this can be time-consuming and prone to human error, leading to misinformation. What are the Best Data Modeling Methodologies and Processes?

The MLOps Blog

OCTOBER 20, 2023

Some of the most widely adopted tools in this space are Deepnote , Amazon SageMaker , Google Vertex AI , and Azure Machine Learning. While often ignored by data scientists, I believe mastering ETL is core and critical to guarantee the success of any machine learning project. Aside neptune.ai

The MLOps Blog

MAY 31, 2023

To store Image data, Cloud storage like Amazon S3 and GCP buckets, Azure Blob Storage are some of the best options, whereas one might want to utilize Hadoop + Hive or BigQuery to store clickstream and other forms of text and tabular data. One might want to utilize an off-the-shelf ML Ops Platform to maintain different versions of data.

phData

SEPTEMBER 27, 2024

In traditional ETL (Extract, Transform, Load) processes in CDPs, staging areas were often temporary holding pens for data. Extract, Load, and Transform (ELT) using tools like dbt has largely replaced ETL. You might choose a cloud data warehouse like the Snowflake AI Data Cloud or BigQuery. directly from Snowflake.

phData

OCTOBER 28, 2024

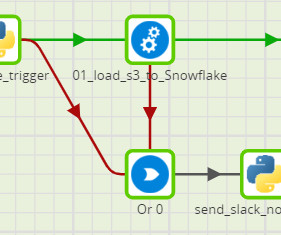

Modern low-code/no-code ETL tools allow data engineers and analysts to build pipelines seamlessly using a drag-and-drop and configure approach with minimal coding. One such option is the availability of Python Components in Matillion ETL, which allows us to run Python code inside the Matillion instance.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content