Research: A periodic table for machine learning

Dataconomy

APRIL 24, 2025

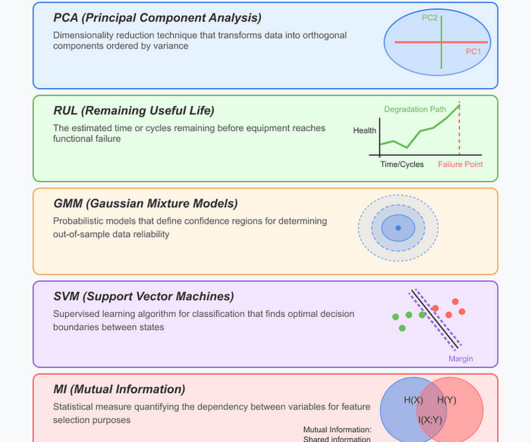

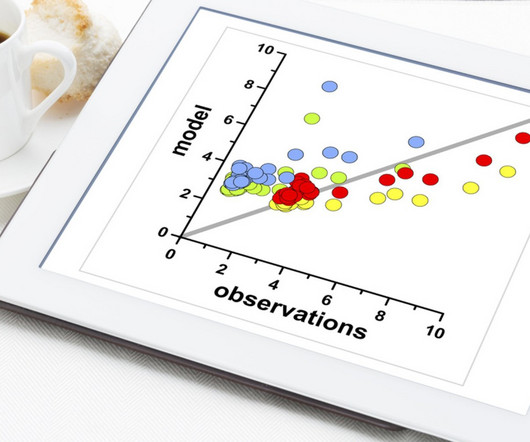

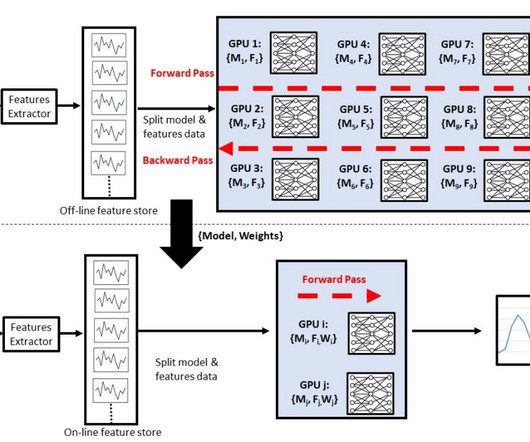

The idea is deceptively simple: represent most machine learning algorithmsclassification, regression, clustering, and even large language modelsas special cases of one general principle: learning the relationships between data points. A state-of-the-art image classification algorithm requiring zero human labels.

Let's personalize your content