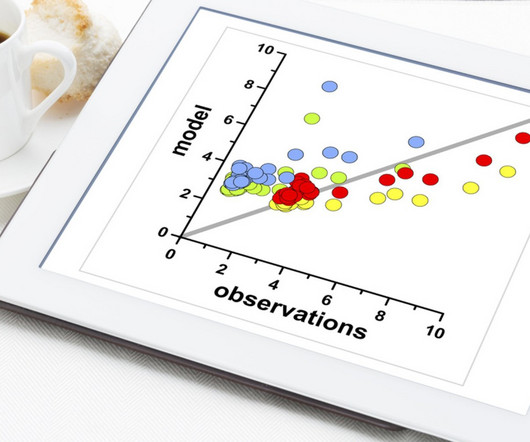

Predictive model validation

Dataconomy

MARCH 11, 2025

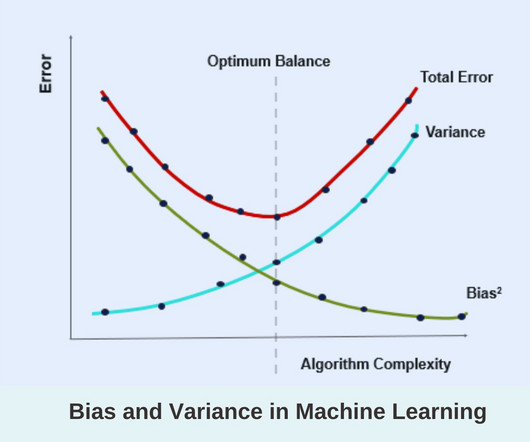

The role of the validation dataset The validation dataset occupies a unique position in the process of model evaluation, acting as an intermediary between training and testing. Definition of validation dataset A validation dataset is a separate subset used specifically for tuning a model during development.

Let's personalize your content