Top 15 Big Data Softwares to Know About in 2023

Analytics Vidhya

JULY 12, 2023

Best Big Data Softwares - Apache Hadoop, Apache Spark, apache Kafka, Apache Storm, Apache Cassandra, Apache Hive, zoho & more.

Analytics Vidhya

JULY 12, 2023

Best Big Data Softwares - Apache Hadoop, Apache Spark, apache Kafka, Apache Storm, Apache Cassandra, Apache Hive, zoho & more.

Women in Big Data

OCTOBER 9, 2024

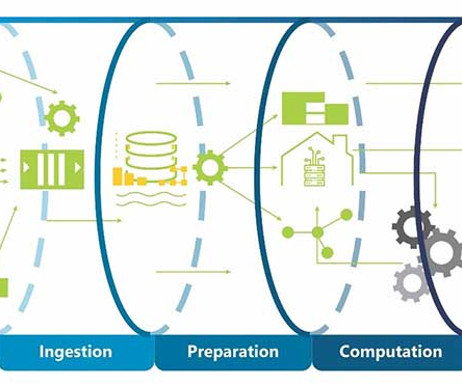

Data Ingestion: Data is collected and funneled into the pipeline using batch or real-time methods, leveraging tools like Apache Kafka, AWS Kinesis, or custom ETL scripts. This phase ensures quality and consistency using frameworks like Apache Spark or AWS Glue.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Pickl AI

DECEMBER 2, 2024

These frameworks facilitate the efficient processing of Big Data, enabling organisations to derive insights quickly.Some popular frameworks include: Apache Hadoop: An open-source framework that allows for distributed processing of large datasets across clusters of computers. It is known for its high fault tolerance and scalability.

Pickl AI

NOVEMBER 25, 2024

These frameworks facilitate the efficient processing of Big Data, enabling organisations to derive insights quickly.Some popular frameworks include: Apache Hadoop: An open-source framework that allows for distributed processing of large datasets across clusters of computers. It is known for its high fault tolerance and scalability.

Pickl AI

NOVEMBER 4, 2024

Among these tools, Apache Hadoop, Apache Spark, and Apache Kafka stand out for their unique capabilities and widespread usage. Apache Hadoop Hadoop is a powerful framework that enables distributed storage and processing of large data sets across clusters of computers.

Pickl AI

JULY 29, 2024

Setting up a Hadoop cluster involves the following steps: Hardware Selection Choose the appropriate hardware for the master node and worker nodes, considering factors such as CPU, memory, storage, and network bandwidth. Apache Hadoop, Cloudera, Hortonworks). Download and extract the Apache Hadoop distribution on all nodes.

Pickl AI

JULY 30, 2024

Integration with Big Data Ecosystems NiFi integrates seamlessly with Big Data technologies such as Apache Hadoop, Apache Kafka, and Apache Spark. This integration allows organizations to build robust data pipelines that leverage the strengths of each technology for data processing and analytics.

Let's personalize your content