How LotteON built a personalized recommendation system using Amazon SageMaker and MLOps

AWS Machine Learning Blog

MAY 16, 2024

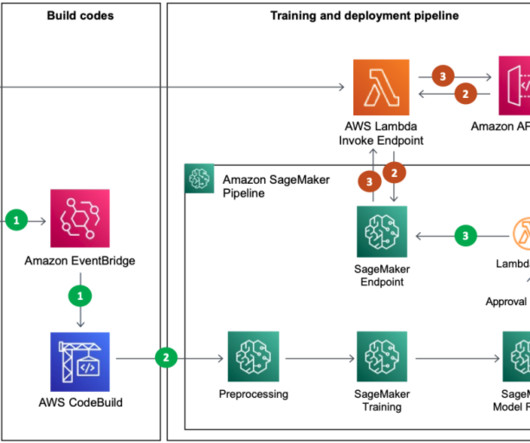

With Amazon EMR, which provides fully managed environments like Apache Hadoop and Spark, we were able to process data faster. Event-based pipeline automation After the preprocessing batch was complete and the training/test data was stored in Amazon S3, this event invoked CodeBuild and ran the training pipeline in SageMaker.

Let's personalize your content