Apache Kafka use cases: Driving innovation across diverse industries

IBM Journey to AI blog

SEPTEMBER 4, 2024

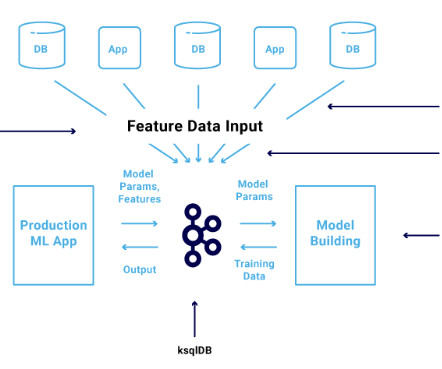

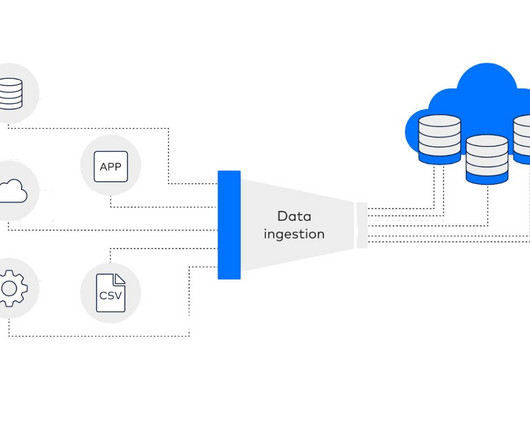

Apache Kafka is an open-source , distributed streaming platform that allows developers to build real-time, event-driven applications. With Apache Kafka, developers can build applications that continuously use streaming data records and deliver real-time experiences to users. How does Apache Kafka work?

Let's personalize your content