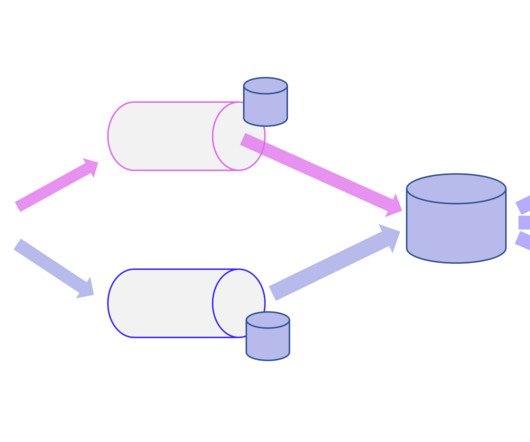

Event-driven architecture (EDA) enables a business to become more aware of everything that’s happening, as it’s happening

IBM Journey to AI blog

JANUARY 8, 2024

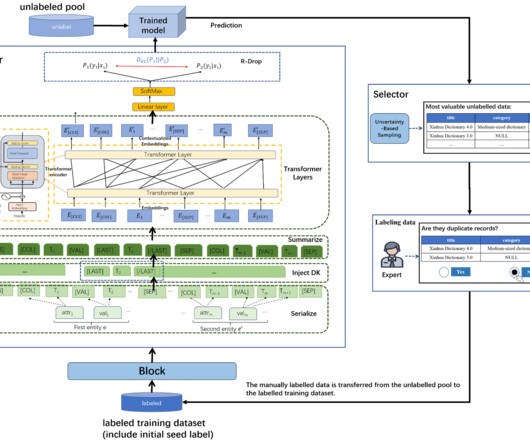

In modern enterprises, where operations leave a massive digital footprint, business events allow companies to become more adaptable and able to recognize and respond to opportunities or threats as they occur. Teams want more visibility and access to events so they can reuse and innovate on the work of others.

Let's personalize your content