Apache Kafka and Apache Flink: An open-source match made in heaven

IBM Journey to AI blog

NOVEMBER 3, 2023

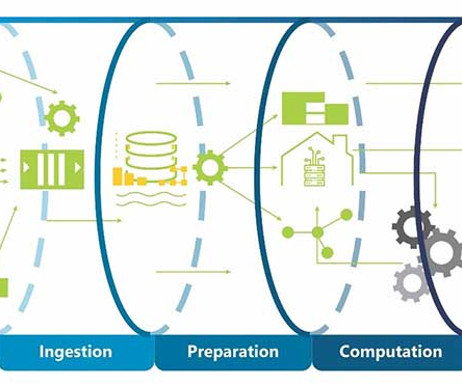

It allows your business to ingest continuous data streams as they happen and bring them to the forefront for analysis, enabling you to keep up with constant changes. Apache Kafka boasts many strong capabilities, such as delivering a high throughput and maintaining a high fault tolerance in the case of application failure.

Let's personalize your content