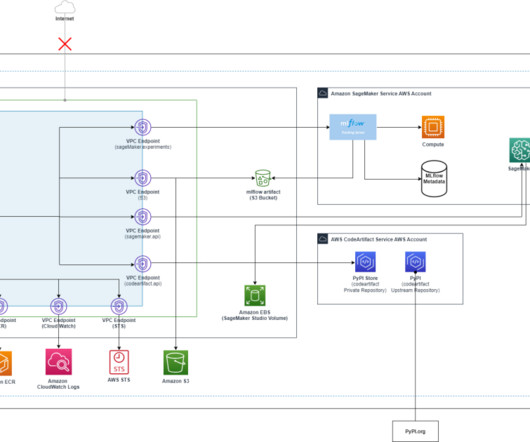

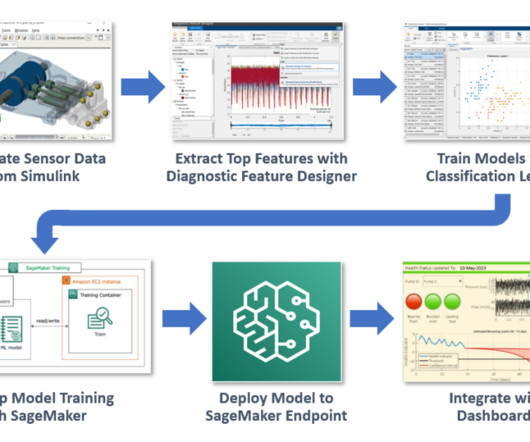

Building ML Model in AWS Sagemaker

Analytics Vidhya

FEBRUARY 21, 2022

Image: [link] Introduction Artificial Intelligence & Machine learning is the most exciting and disruptive area in the current era. The post Building ML Model in AWS Sagemaker appeared first on Analytics Vidhya. This article was published as a part of the Data Science Blogathon.

Let's personalize your content