Introducing Databricks Fleet Clusters for AWS

databricks

MAY 10, 2023

We're excited to announce the general availability of Databricks Fleet clusters on AWS. What are Fleet clusters? Databricks Fleet clusters unlock the potential.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

databricks

MAY 10, 2023

We're excited to announce the general availability of Databricks Fleet clusters on AWS. What are Fleet clusters? Databricks Fleet clusters unlock the potential.

AWS Machine Learning Blog

DECEMBER 24, 2024

The process of setting up and configuring a distributed training environment can be complex, requiring expertise in server management, cluster configuration, networking and distributed computing. To simplify infrastructure setup and accelerate distributed training, AWS introduced Amazon SageMaker HyperPod in late 2023.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

AWS Machine Learning Blog

NOVEMBER 26, 2024

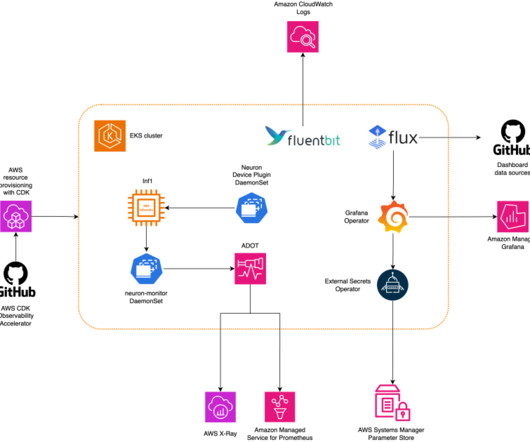

AWS Trainium and AWS Inferentia based instances, combined with Amazon Elastic Kubernetes Service (Amazon EKS), provide a performant and low cost framework to run LLMs efficiently in a containerized environment. Solution overview The steps to implement the solution are as follows: Create the EKS cluster.

AWS Machine Learning Blog

NOVEMBER 19, 2024

In 2018, I sat in the audience at AWS re:Invent as Andy Jassy announced AWS DeepRacer —a fully autonomous 1/18th scale race car driven by reinforcement learning. But AWS DeepRacer instantly captured my interest with its promise that even inexperienced developers could get involved in AI and ML.

AWS Machine Learning Blog

NOVEMBER 19, 2024

The excitement is building for the fourteenth edition of AWS re:Invent, and as always, Las Vegas is set to host this spectacular event. Third, we’ll explore the robust infrastructure services from AWS powering AI innovation, featuring Amazon SageMaker , AWS Trainium , and AWS Inferentia under AI/ML, as well as Compute topics.

AWS Machine Learning Blog

NOVEMBER 13, 2024

Prerequisites To implement the proposed solution, make sure that you have the following: An AWS account and a working knowledge of FMs, Amazon Bedrock , Amazon SageMaker , Amazon OpenSearch Service , Amazon S3 , and AWS Identity and Access Management (IAM). Amazon Titan Multimodal Embeddings model access in Amazon Bedrock.

NOVEMBER 27, 2024

To implement this solution, complete the following steps: Set up Zero-ETL integration from the AWS Management Console for Amazon Relational Database Service (Amazon RDS). An AWS Identity and Access Management (IAM) user with sufficient permissions to interact with the AWS Management Console and related AWS services.

AWS Machine Learning Blog

NOVEMBER 25, 2024

8B and 70B inference support on AWS Trainium and AWS Inferentia instances in Amazon SageMaker JumpStart. Trainium and Inferentia, enabled by the AWS Neuron software development kit (SDK), offer high performance and lower the cost of deploying Meta Llama 3.1 An AWS Identity and Access Management (IAM) role to access SageMaker.

AWS Machine Learning Blog

FEBRUARY 26, 2025

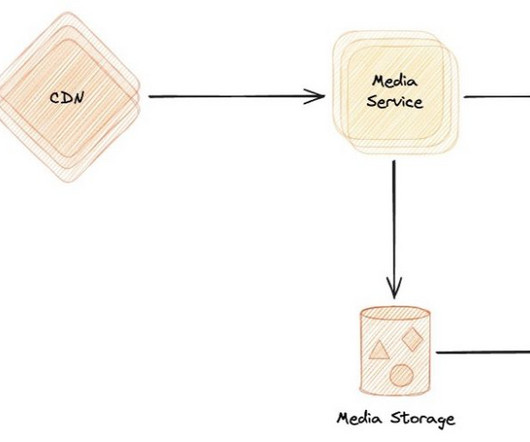

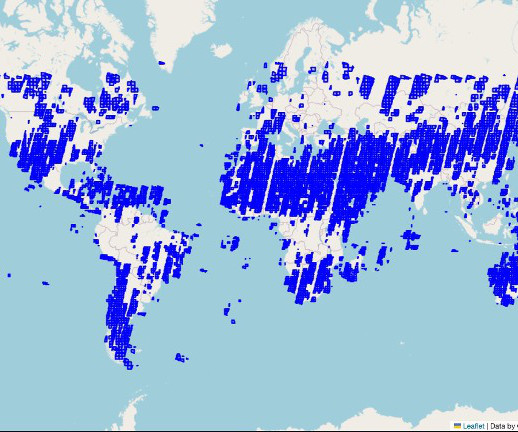

At ByteDance, we collaborated with Amazon Web Services (AWS) to deploy multimodal large language models (LLMs) for video understanding using AWS Inferentia2 across multiple AWS Regions around the world. Solution overview Weve collaborated with AWS since the first generation of Inferentia chips.

AWS Machine Learning Blog

MARCH 10, 2025

We walk through the journey Octus took from managing multiple cloud providers and costly GPU instances to implementing a streamlined, cost-effective solution using AWS services including Amazon Bedrock, AWS Fargate , and Amazon OpenSearch Service. Along the way, it also simplified operations as Octus is an AWS shop more generally.

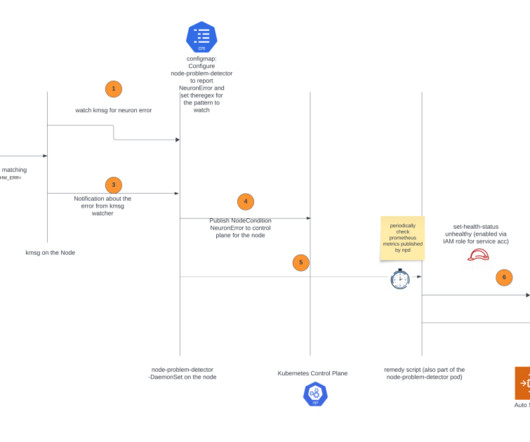

AWS Machine Learning Blog

JULY 25, 2024

In the post, we introduce the AWS Neuron node problem detector and recovery DaemonSet for AWS Trainium and AWS Inferentia on Amazon Elastic Kubernetes Service (Amazon EKS). eks-5e0fdde Install the required AWS Identity and Access Management (IAM) role for the service account and the node problem detector plugin.

AWS Machine Learning Blog

APRIL 17, 2024

Despite the availability of advanced distributed training libraries, it’s common for training and inference jobs to need hundreds of accelerators (GPUs or purpose-built ML chips such as AWS Trainium and AWS Inferentia ), and therefore tens or hundreds of instances. or later NPM version 10.0.0

AWS Machine Learning Blog

OCTOBER 24, 2024

Prerequisites Before you begin, make sure you have the following prerequisites in place: An AWS account and role with the AWS Identity and Access Management (IAM) privileges to deploy the following resources: IAM roles. For this post we’ll use a provisioned Amazon Redshift cluster. A SageMaker domain. Database name : Enter dev.

AWS Machine Learning Blog

FEBRUARY 21, 2025

Communication between the two systems was established through Kerberized Apache Livy (HTTPS) connections over AWS PrivateLink. Responsibility for maintenance and troubleshooting: Rockets DevOps/Technology team was responsible for all upgrades, scaling, and troubleshooting of the Hadoop cluster, which was installed on bare EC2 instances.

AWS Machine Learning Blog

OCTOBER 16, 2024

Although setting up a processing cluster is an alternative, it introduces its own set of complexities, from data distribution to infrastructure management. We use the purpose-built geospatial container with SageMaker Processing jobs for a simplified, managed experience to create and run a cluster. format("/".join(tile_prefix),

AWS Machine Learning Blog

MARCH 3, 2025

These recipes include a training stack validated by Amazon Web Services (AWS) , which removes the tedious work of experimenting with different model configurations, minimizing the time it takes for iterative evaluation and testing. The launcher will interface with your cluster with Slurm or Kubernetes native constructs.

AWS Machine Learning Blog

JUNE 11, 2024

Starting with the AWS Neuron 2.18 release , you can now launch Neuron DLAMIs (AWS Deep Learning AMIs) and Neuron DLCs (AWS Deep Learning Containers) with the latest released Neuron packages on the same day as the Neuron SDK release. AWS DLCs provide a set of Docker images that are pre-installed with deep learning frameworks.

AWS Machine Learning Blog

FEBRUARY 13, 2025

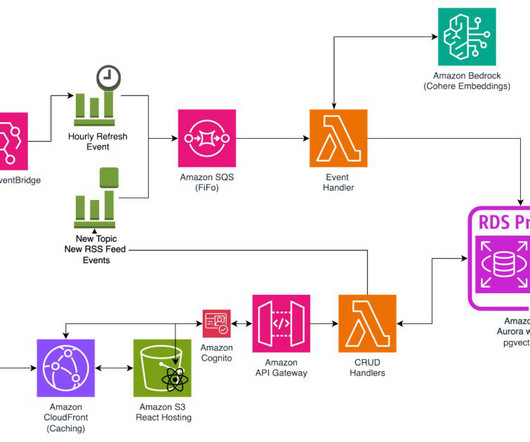

Amazon Bedrock offers a serverless experience, so you can get started quickly, privately customize FMs with your own data, and integrate and deploy them into your applications using Amazon Web Services (AWS) services without having to manage infrastructure. AWS Lambda The API is a Fastify application written in TypeScript.

AWS Machine Learning Blog

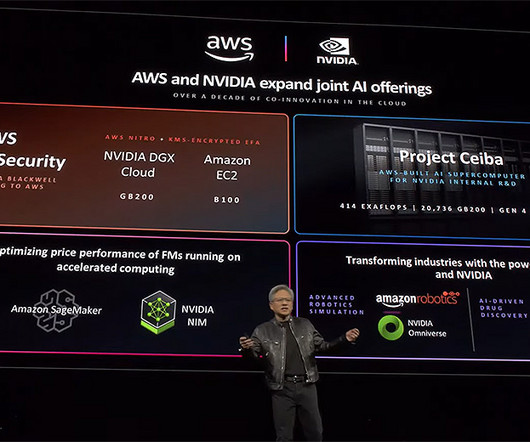

APRIL 11, 2024

AWS was delighted to present to and connect with over 18,000 in-person and 267,000 virtual attendees at NVIDIA GTC, a global artificial intelligence (AI) conference that took place March 2024 in San Jose, California, returning to a hybrid, in-person experience for the first time since 2019.

AWS Machine Learning Blog

SEPTEMBER 18, 2024

The compute clusters used in these scenarios are composed of more than thousands of AI accelerators such as GPUs or AWS Trainium and AWS Inferentia , custom machine learning (ML) chips designed by Amazon Web Services (AWS) to accelerate deep learning workloads in the cloud.

AWS Machine Learning Blog

JULY 31, 2024

Amazon Web Services (AWS) is committed to supporting the development of cutting-edge generative artificial intelligence (AI) technologies by companies and organizations across the globe. Let’s dive in and explore how these organizations are transforming what’s possible with generative AI on AWS.

AWS Machine Learning Blog

APRIL 22, 2024

Amazon SageMaker HyperPod is purpose-built to accelerate foundation model (FM) training, removing the undifferentiated heavy lifting involved in managing and optimizing a large training compute cluster. In this solution, HyperPod cluster instances use the LDAPS protocol to connect to the AWS Managed Microsoft AD via an NLB.

APRIL 13, 2023

At AWS, we have played a key role in democratizing ML and making it accessible to anyone who wants to use it, including more than 100,000 customers of all sizes and industries. AWS has the broadest and deepest portfolio of AI and ML services at all three layers of the stack. Today’s FMs, such as the large language models (LLMs) GPT3.5

AWS Machine Learning Blog

NOVEMBER 27, 2024

Launching a machine learning (ML) training cluster with Amazon SageMaker training jobs is a seamless process that begins with a straightforward API call, AWS Command Line Interface (AWS CLI) command, or AWS SDK interaction. Surya Kari is a Senior Generative AI Data Scientist at AWS.

Smart Data Collective

FEBRUARY 20, 2022

AWS (Amazon Web Services), the comprehensive and evolving cloud computing platform provided by Amazon, is comprised of infrastructure as a service (IaaS), platform as a service (PaaS) and packaged software as a service (SaaS). With its wide array of tools and convenience, AWS has already become a popular choice for many SaaS companies.

AWS Machine Learning Blog

DECEMBER 4, 2024

Orchestrate with Tecton-managed EMR clusters – After features are deployed, Tecton automatically creates the scheduling, provisioning, and orchestration needed for pipelines that can run on Amazon EMR compute engines. You can view and create EMR clusters directly through the SageMaker notebook.

AWS Machine Learning Blog

SEPTEMBER 4, 2024

A challenge for DevOps engineers is the additional complexity that comes from using Kubernetes to manage the deployment stage while resorting to other tools (such as the AWS SDK or AWS CloudFormation ) to manage the model building pipeline. kubectl for working with Kubernetes clusters. eksctl for working with EKS clusters.

AWS Machine Learning Blog

APRIL 4, 2025

Integration with existing systems on AWS: Lumi seamlessly integrated SageMaker Asynchronous Inference endpoints with their existing loan processing pipeline. Using Databricks on AWS for model training, they built a pipeline to host the model in SageMaker AI, optimizing data flow and results retrieval. Follow him on LinkedIn.

AWS Machine Learning Blog

MAY 1, 2024

Llama2 by Meta is an example of an LLM offered by AWS. To learn more about Llama 2 on AWS, refer to Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart. Virginia) and US West (Oregon) AWS Regions, and most recently announced general availability in the US East (Ohio) Region.

AWS Machine Learning Blog

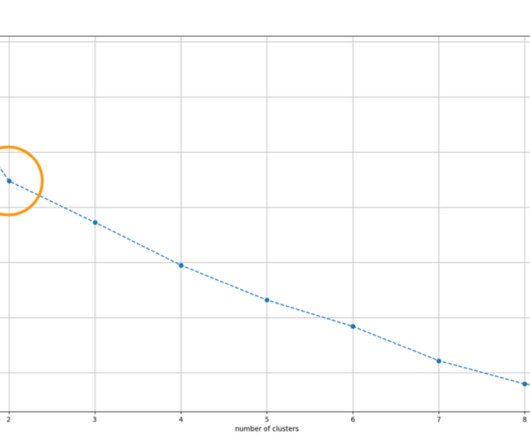

APRIL 4, 2023

AWS provides various services catered to time series data that are low code/no code, which both machine learning (ML) and non-ML practitioners can use for building ML solutions. In this post, we seek to separate a time series dataset into individual clusters that exhibit a higher degree of similarity between its data points and reduce noise.

AWS Machine Learning Blog

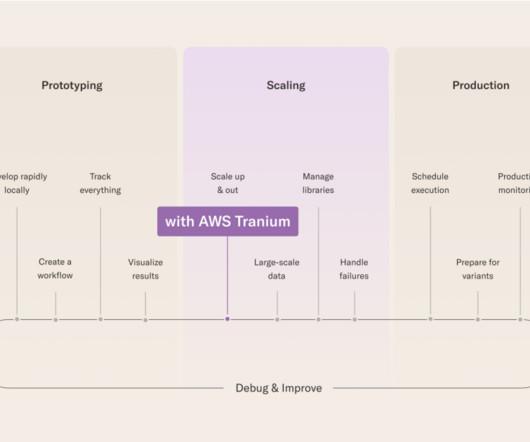

APRIL 29, 2024

For AWS and Outerbounds customers, the goal is to build a differentiated machine learning and artificial intelligence (ML/AI) system and reliably improve it over time. First, the AWS Trainium accelerator provides a high-performance, cost-effective, and readily available solution for training and fine-tuning large models.

Smart Data Collective

NOVEMBER 7, 2022

We shared a blog post on seven well-known companies that shifted to the cloud , but many small businesses are using cloud computing as well. One of the best known options is Amazon Web Services (AWS). What is Amazon Web Services (AWS)? AWS is a collection of remote computing services (or web services). AWS Lambda.

AWS Machine Learning Blog

JANUARY 24, 2024

We demonstrate how to build an end-to-end RAG application using Cohere’s language models through Amazon Bedrock and a Weaviate vector database on AWS Marketplace. Additionally, you can securely integrate and easily deploy your generative AI applications using the AWS tools you are already familiar with.

AWS Machine Learning Blog

JUNE 25, 2024

Amazon Web Services is excited to announce the launch of the AWS Neuron Monitor container , an innovative tool designed to enhance the monitoring capabilities of AWS Inferentia and AWS Trainium chips on Amazon Elastic Kubernetes Service (Amazon EKS). The Container Insights dashboard also shows cluster status and alarms.

AWS Machine Learning Blog

NOVEMBER 20, 2024

In this post, we explore how you can use Amazon Q Business , the AWS generative AI-powered assistant, to build a centralized knowledge base for your organization, unifying structured and unstructured datasets from different sources to accelerate decision-making and drive productivity. Choose Create database. aligned identity provider (IdP).

AWS Machine Learning Blog

MAY 31, 2023

With containers, scaling on a cluster becomes much easier. In late 2022, AWS announced the general availability of Amazon EC2 Trn1 instances powered by AWS Trainium accelerators, which are purpose built for high-performance deep learning training. What you get is an ML development environment that is consistent and portable.

AWS Machine Learning Blog

JANUARY 30, 2025

You can now use DeepSeek-R1 to build, experiment, and responsibly scale your generative AI ideas on AWS. The MoE architecture allows activation of 37 billion parameters, enabling efficient inference by routing queries to the most relevant expert clusters. 48xlarge instance in the AWS Region you are deploying.

AWS Machine Learning Blog

NOVEMBER 14, 2024

We recently announced the general availability of cross-account sharing of Amazon SageMaker Model Registry using AWS Resource Access Manager (AWS RAM) , making it easier to securely share and discover machine learning (ML) models across your AWS accounts.

AWS Machine Learning Blog

APRIL 29, 2024

Close collaboration with AWS Trainium has also played a major role in making the Arcee platform extremely performant, not only accelerating model training but also reducing overall costs and enforcing compliance and data integrity in the secure AWS environment. Our cluster consisted of 16 nodes, each equipped with a trn1n.32xlarge

AWS Machine Learning Blog

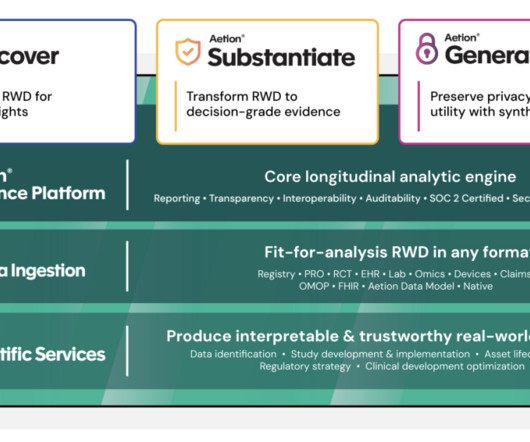

JANUARY 30, 2025

Smart Subgroups For a user-specified patient population, the Smart Subgroups feature identifies clusters of patients with similar characteristics (for example, similar prevalence profiles of diagnoses, procedures, and therapies). The features are stored in Amazon S3 and encrypted with AWS Key Management Service (AWS KMS) for downstream use.

AWS Machine Learning Blog

MAY 30, 2024

Because Amazon Bedrock is serverless, you don’t have to manage infrastructure, and you can securely integrate and deploy generative AI capabilities into your applications using the AWS services you are already familiar with. AWS Prototyping developed an AWS Cloud Development Kit (AWS CDK) stack for deployment following AWS best practices.

IBM Journey to AI blog

OCTOBER 26, 2023

In this journey, we are seeing an increased interest in migrating and deploying MAS on AWS Cloud. The growing need for Maximo migration to AWS Cloud Migrating to cloud helps organizations to drive the operational resiliency and reliability, at the same time keeping software up to date with minimal upgrade effort and infrastructure constraint.

AWS Machine Learning Blog

JUNE 27, 2024

In this post, we describe how we built our cutting-edge productivity agent NinjaLLM, the backbone of MyNinja.ai, using AWS Trainium chips. For training, we chose to use a cluster of trn1.32xlarge instances to take advantage of Trainium chips. We used a cluster of 32 instances in order to efficiently parallelize the training.

AWS Machine Learning Blog

OCTOBER 5, 2023

In this post, we walk through how to fine-tune Llama 2 on AWS Trainium , a purpose-built accelerator for LLM training, to reduce training times and costs. We review the fine-tuning scripts provided by the AWS Neuron SDK (using NeMo Megatron-LM), the various configurations we used, and the throughput results we saw.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content