Build a Serverless News Data Pipeline using ML on AWS Cloud

KDnuggets

NOVEMBER 18, 2021

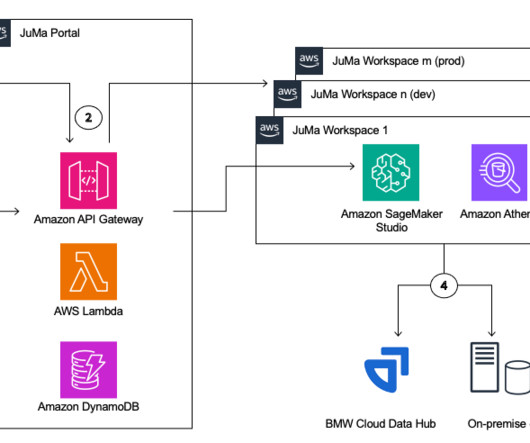

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

AWS Machine Learning Blog

NOVEMBER 26, 2024

AWS AI chips, Trainium and Inferentia, enable you to build and deploy generative AI models at higher performance and lower cost. Datadog, an observability and security platform, provides real-time monitoring for cloud infrastructure and ML operations. To get started, see AWS Inferentia and AWS Trainium Monitoring.

AWS Machine Learning Blog

OCTOBER 24, 2024

Machine learning (ML) helps organizations to increase revenue, drive business growth, and reduce costs by optimizing core business functions such as supply and demand forecasting, customer churn prediction, credit risk scoring, pricing, predicting late shipments, and many others. Let’s learn about the services we will use to make this happen.

How to Learn Machine Learning

DECEMBER 24, 2024

If you’re diving into the world of machine learning, AWS Machine Learning provides a robust and accessible platform to turn your data science dreams into reality. Whether you’re a solo developer or part of a large enterprise, AWS provides scalable solutions that grow with your needs. Hey dear reader!

AWS Machine Learning Blog

DECEMBER 4, 2024

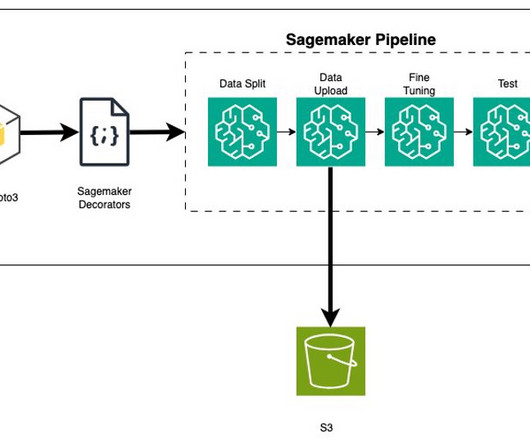

Businesses are under pressure to show return on investment (ROI) from AI use cases, whether predictive machine learning (ML) or generative AI. Only 54% of ML prototypes make it to production, and only 5% of generative AI use cases make it to production. Using SageMaker, you can build, train and deploy ML models.

NOVEMBER 24, 2023

With that, the need for data scientists and machine learning (ML) engineers has grown significantly. Data scientists and ML engineers require capable tooling and sufficient compute for their work. Data scientists and ML engineers require capable tooling and sufficient compute for their work.

Data Science Dojo

FEBRUARY 20, 2023

Machine learning (ML) is the technology that automates tasks and provides insights. It allows data scientists to build models that can automate specific tasks. It comes in many forms, with a range of tools and platforms designed to make working with ML more efficient. It also has ML algorithms built into the platform.

AWS Machine Learning Blog

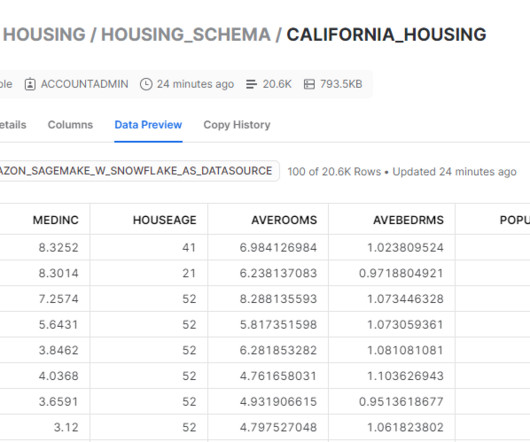

MARCH 8, 2023

Amazon SageMaker is a fully managed machine learning (ML) service. With SageMaker, data scientists and developers can quickly and easily build and train ML models, and then directly deploy them into a production-ready hosted environment. We add this data to Snowflake as a new table.

AUGUST 17, 2023

Amazon Redshift is the most popular cloud data warehouse that is used by tens of thousands of customers to analyze exabytes of data every day. SageMaker Studio is the first fully integrated development environment (IDE) for ML. To do this, we provide an AWS CloudFormation template to create a stack that contains the resources.

AWS Machine Learning Blog

MARCH 1, 2023

Statistical methods and machine learning (ML) methods are actively developed and adopted to maximize the LTV. In this post, we share how Kakao Games and the Amazon Machine Learning Solutions Lab teamed up to build a scalable and reliable LTV prediction solution by using AWS data and ML services such as AWS Glue and Amazon SageMaker.

AWS Machine Learning Blog

FEBRUARY 21, 2025

Lets assume that the question What date will AWS re:invent 2024 occur? The corresponding answer is also input as AWS re:Invent 2024 takes place on December 26, 2024. If the question was Whats the schedule for AWS events in December?, This setup uses the AWS SDK for Python (Boto3) to interact with AWS services.

AWS Machine Learning Blog

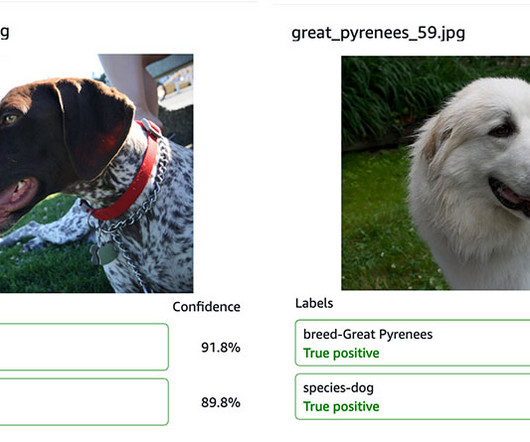

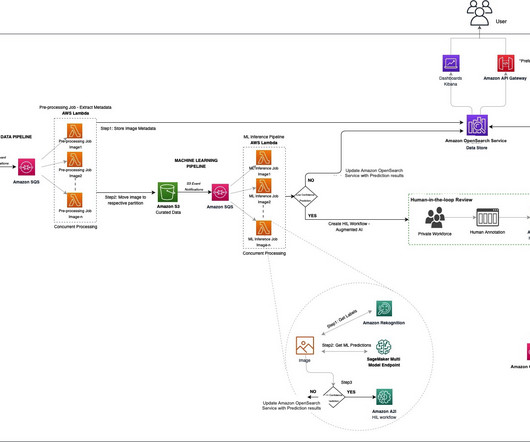

OCTOBER 18, 2023

Purina used artificial intelligence (AI) and machine learning (ML) to automate animal breed detection at scale. The solution focuses on the fundamental principles of developing an AI/ML application workflow of data preparation, model training, model evaluation, and model monitoring. DynamoDB is used to store the pet attributes.

The MLOps Blog

MAY 17, 2023

From data processing to quick insights, robust pipelines are a must for any ML system. Often the Data Team, comprising Data and ML Engineers , needs to build this infrastructure, and this experience can be painful. However, efficient use of ETL pipelines in ML can help make their life much easier.

IBM Journey to AI blog

MAY 15, 2024

Data engineers build data pipelines, which are called data integration tasks or jobs, as incremental steps to perform data operations and orchestrate these data pipelines in an overall workflow. Organizations can harness the full potential of their data while reducing risk and lowering costs.

AWS Machine Learning Blog

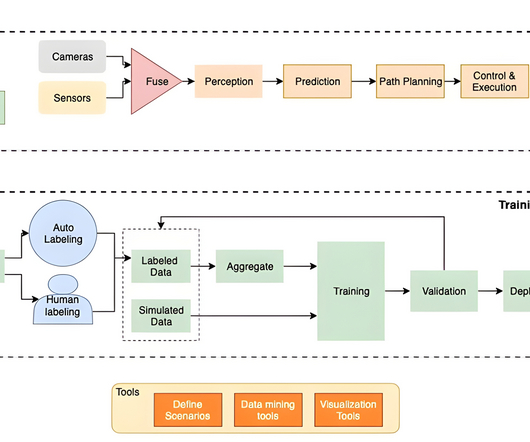

FEBRUARY 23, 2023

Identification of relevant representation data from a huge volume of data – This is essential to reduce biases in the datasets so that common scenarios (driving at normal speed with obstruction) don’t create class imbalance. To yield better accuracy, DNNs require large volumes of diverse, good quality data.

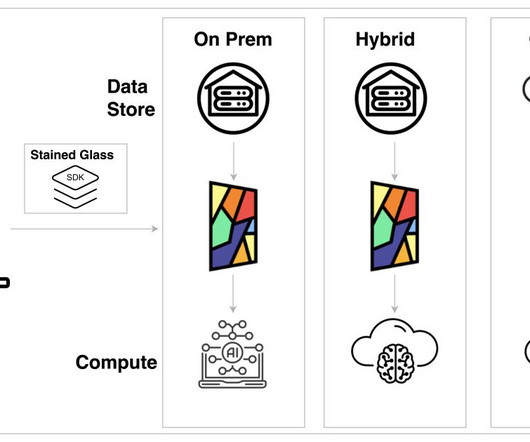

Towards AI

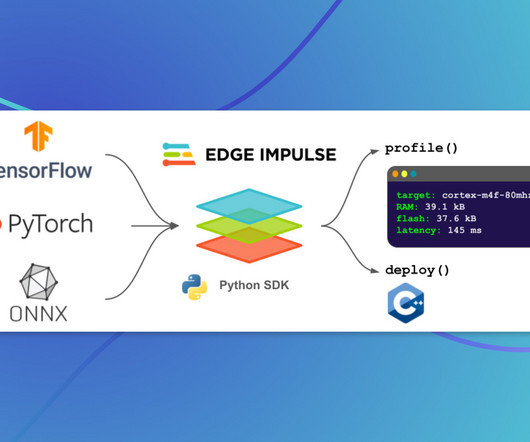

APRIL 4, 2023

Last Updated on April 4, 2023 by Editorial Team Introducing a Python SDK that allows enterprises to effortlessly optimize their ML models for edge devices. With their groundbreaking web-based Studio platform, engineers have been able to collect data, develop and tune ML models, and deploy them to devices.

Mlearning.ai

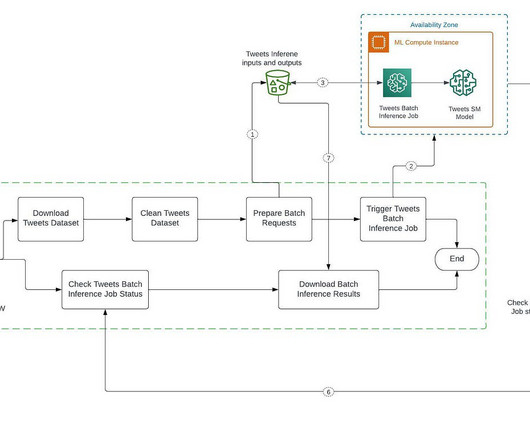

APRIL 6, 2023

Automate and streamline our ML inference pipeline with SageMaker and Airflow Building an inference data pipeline on large datasets is a challenge many companies face. The Batch job automatically launches an ML compute instance, deploys the model, and processes the input data in batches, producing the output predictions.

Pickl AI

DECEMBER 26, 2024

Each platform offers unique capabilities tailored to varying needs, making the platform a critical decision for any Data Science project. Major Cloud Platforms for Data Science Amazon Web Services ( AWS ), Microsoft Azure, and Google Cloud Platform (GCP) dominate the cloud market with their comprehensive offerings.

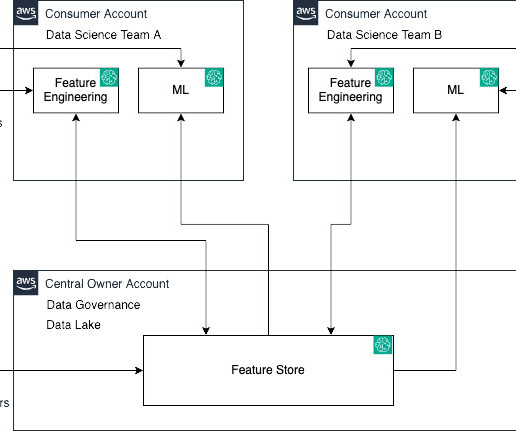

AWS Machine Learning Blog

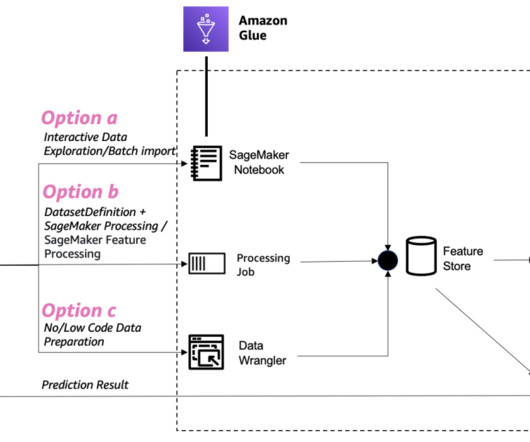

FEBRUARY 13, 2024

Amazon SageMaker Feature Store is a fully managed, purpose-built repository to store, share, and manage features for machine learning (ML) models. Features are inputs to ML models used during training and inference. SageMaker Feature Store now makes it effortless to share, discover, and access feature groups across AWS accounts.

Precisely

APRIL 11, 2024

In an era where cloud technology is not just an option but a necessity for competitive business operations, the collaboration between Precisely and Amazon Web Services (AWS) has set a new benchmark for mainframe and IBM i modernization. Solution page Precisely on Amazon Web Services (AWS) Precisely brings data integrity to the AWS cloud.

AWS Machine Learning Blog

FEBRUARY 1, 2024

Consider the following picture, which is an AWS view of the a16z emerging application stack for large language models (LLMs). This pipeline could be a batch pipeline if you prepare contextual data in advance, or a low-latency pipeline if you’re incorporating new contextual data on the fly.

phData

AUGUST 6, 2024

As today’s world keeps progressing towards data-driven decisions, organizations must have quality data created from efficient and effective data pipelines. For customers in Snowflake, Snowpark is a powerful tool for building these effective and scalable data pipelines.

AWS Machine Learning Blog

FEBRUARY 24, 2023

AWS recently released Amazon SageMaker geospatial capabilities to provide you with satellite imagery and geospatial state-of-the-art machine learning (ML) models, reducing barriers for these types of use cases. For more information, refer to Preview: Use Amazon SageMaker to Build, Train, and Deploy ML Models Using Geospatial Data.

AWS Machine Learning Blog

JUNE 18, 2024

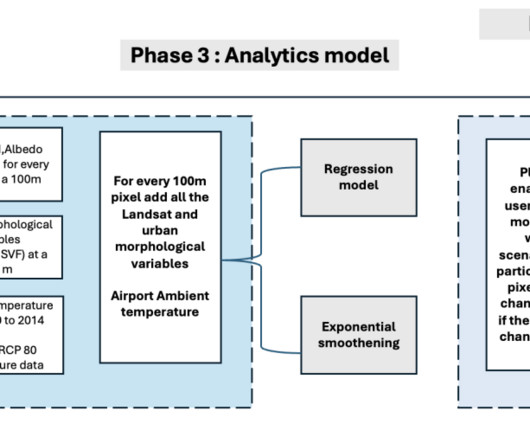

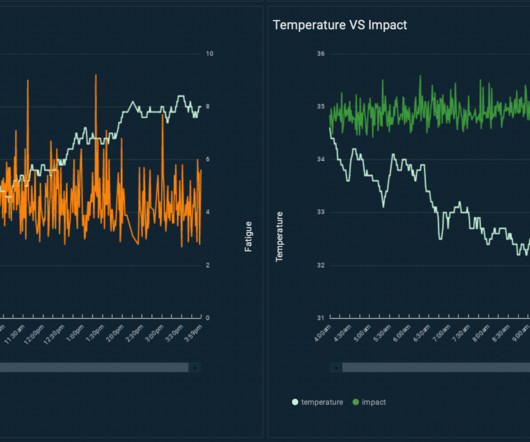

On December 6 th -8 th 2023, the non-profit organization, Tech to the Rescue , in collaboration with AWS, organized the world’s largest Air Quality Hackathon – aimed at tackling one of the world’s most pressing health and environmental challenges, air pollution. As always, AWS welcomes your feedback.

AWS Machine Learning Blog

AUGUST 22, 2024

This makes managing and deploying these updates across a large-scale deployment pipeline while providing consistency and minimizing downtime a significant undertaking. Generative AI applications require continuous ingestion, preprocessing, and formatting of vast amounts of data from various sources.

AWS Machine Learning Blog

FEBRUARY 5, 2025

The following diagram illustrates the data pipeline for indexing and query in the foundational search architecture. The listing indexer AWS Lambda function continuously polls the queue and processes incoming listing updates.

AWS Machine Learning Blog

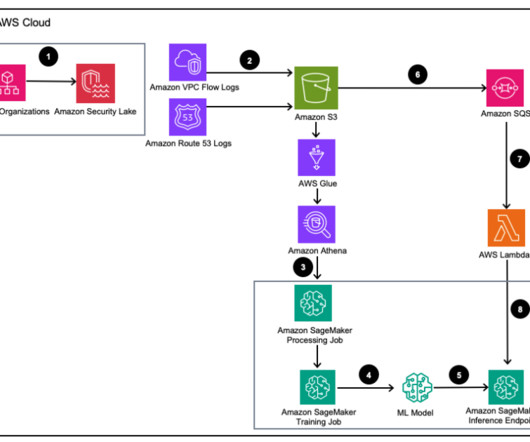

DECEMBER 20, 2023

Whether logs are coming from Amazon Web Services (AWS), other cloud providers, on-premises, or edge devices, customers need to centralize and standardize security data. After the security log data is stored in Amazon Security Lake, the question becomes how to analyze it.

AWS Machine Learning Blog

DECEMBER 15, 2023

We are excited to announce the launch of Amazon DocumentDB (with MongoDB compatibility) integration with Amazon SageMaker Canvas , allowing Amazon DocumentDB customers to build and use generative AI and machine learning (ML) solutions without writing code. Choose Add connection. Drag your table of choice to the blank canvas.

AWS Machine Learning Blog

MARCH 15, 2024

This approach can help heart stroke patients, doctors, and researchers with faster diagnosis, enriched decision-making, and more informed, inclusive research work on stroke-related health issues, using a cloud-native approach with AWS services for lightweight lift and straightforward adoption. Stroke victims can lose around 1.9

AWS Machine Learning Blog

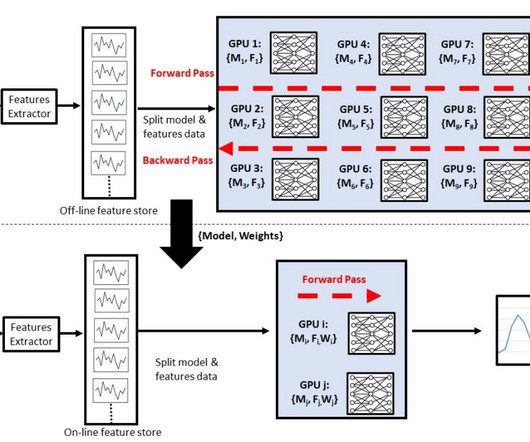

SEPTEMBER 18, 2023

Machine learning (ML) is becoming increasingly complex as customers try to solve more and more challenging problems. This complexity often leads to the need for distributed ML, where multiple machines are used to train a single model. SageMaker is a fully managed service for building, training, and deploying ML models.

AWS Machine Learning Blog

MARCH 14, 2024

In this post we highlight how the AWS Generative AI Innovation Center collaborated with the AWS Professional Services and PGA TOUR to develop a prototype virtual assistant using Amazon Bedrock that could enable fans to extract information about any event, player, hole or shot level details in a seamless interactive manner.

AWS Machine Learning Blog

APRIL 5, 2024

SageMaker geospatial capabilities make it straightforward for data scientists and machine learning (ML) engineers to build, train, and deploy models using geospatial data. Janosch Woschitz is a Senior Solutions Architect at AWS, specializing in AI/ML. Outside work, he is a travel enthusiast.

Towards AI

OCTOBER 4, 2023

Advanced-Data Engineering and ML Ops with Infrastructure as Code This member-only story is on us. Photo by Markus Winkler on Unsplash This story explains how to create and orchestrate machine learning pipelines with AWS Step Functions and deploy them using Infrastructure as Code. Upgrade to access all of Medium.

AWS Machine Learning Blog

APRIL 19, 2023

Since 2018, our team has been developing a variety of ML models to enable betting products for NFL and NCAA football. These models are then pushed to an Amazon Simple Storage Service (Amazon S3) bucket using DVC, a version control tool for ML models. Business requirements We are the US squad of the Sportradar AI department.

The MLOps Blog

JUNE 27, 2023

For example, if you use AWS, you may prefer Amazon SageMaker as an MLOps platform that integrates with other AWS services. Knowledge and skills in the organization Evaluate the level of expertise and experience of your ML team and choose a tool that matches their skill set and learning curve.

AWS Machine Learning Blog

FEBRUARY 1, 2024

To unlock the potential of generative AI technologies, however, there’s a key prerequisite: your data needs to be appropriately prepared. In this post, we describe how use generative AI to update and scale your data pipeline using Amazon SageMaker Canvas for data prep.

AWS Machine Learning Blog

DECEMBER 5, 2023

AWS is especially well suited to provide enterprises the tools necessary for deploying LLMs at scale to enable critical decision-making. In their implementation of generative AI technology, enterprises have real concerns about data exposure and ownership of confidential information that may be sent to LLMs.

AWS Machine Learning Blog

APRIL 12, 2023

In this post, we describe how AWS Partner Airis Solutions used Amazon Lookout for Equipment , AWS Internet of Things (IoT) services, and CloudRail sensor technologies to provide a state-of-the-art solution to address these challenges. It’s an easy way to run analytics on IoT data to gain accurate insights.

AWS Machine Learning Blog

AUGUST 8, 2024

As one of the largest AWS customers, Twilio engages with data, artificial intelligence (AI), and machine learning (ML) services to run their daily workloads. Data is the foundational layer for all generative AI and ML applications. Access to Amazon Bedrock FMs isn’t granted by default.

The MLOps Blog

MARCH 20, 2023

Long-term ML project involves developing and sustaining applications or systems that leverage machine learning models, algorithms, and techniques. An example of a long-term ML project will be a bank fraud detection system powered by ML models and algorithms for pattern recognition. 2 Ensuring and maintaining high-quality data.

AWS Machine Learning Blog

AUGUST 21, 2024

By walking through this specific implementation, we aim to showcase how you can adapt batch inference to suit various data processing needs, regardless of the data source or nature. Prerequisites To use the batch inference feature, make sure you have satisfied the following requirements: An active AWS account.

AWS Machine Learning Blog

SEPTEMBER 11, 2024

These activities cover disparate fields such as basic data processing, analytics, and machine learning (ML). ML is often associated with PBAs, so we start this post with an illustrative figure. The ML paradigm is learning followed by inference. The union of advances in hardware and ML has led us to the current day.

Iguazio

JANUARY 16, 2024

Since AI is a central pillar of their value offering, Sense has invested heavily in a robust engineering organization including a large number of data and AI professionals. This includes a data team, an analytics team, DevOps, AI/ML, and a data science team. Gennaro Frazzingaro, Head of AI/ML at Sense.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content