Reducing hallucinations in LLM agents with a verified semantic cache using Amazon Bedrock Knowledge Bases

AWS Machine Learning Blog

FEBRUARY 21, 2025

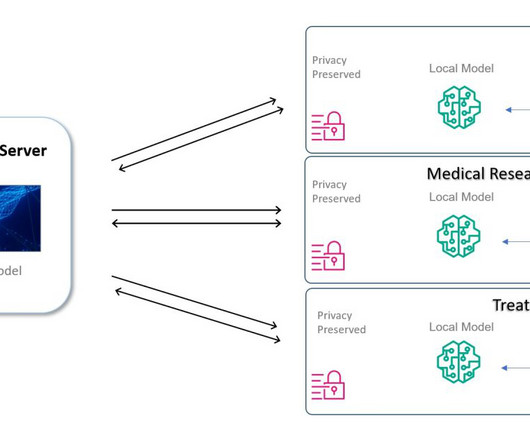

Lets assume that the question What date will AWS re:invent 2024 occur? The corresponding answer is also input as AWS re:Invent 2024 takes place on December 26, 2024. If the question was Whats the schedule for AWS events in December?, This setup uses the AWS SDK for Python (Boto3) to interact with AWS services.

Let's personalize your content