Build a Serverless News Data Pipeline using ML on AWS Cloud

KDnuggets

NOVEMBER 18, 2021

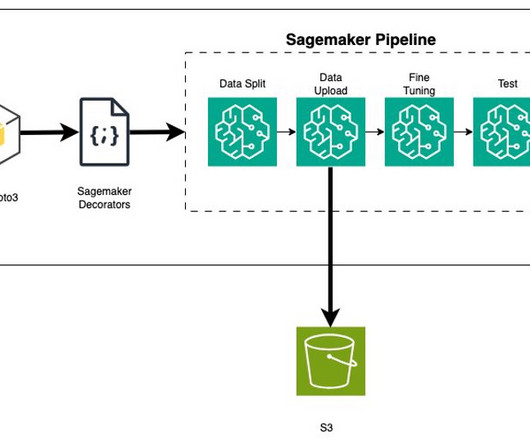

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

Analytics Vidhya

AUGUST 3, 2021

ArticleVideo Book This article was published as a part of the Data Science Blogathon Introduction Apache Spark is a framework used in cluster computing environments. The post Building a Data Pipeline with PySpark and AWS appeared first on Analytics Vidhya.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

Analytics Vidhya

APRIL 23, 2024

It offers a scalable and extensible solution for automating complex workflows, automating repetitive tasks, and monitoring data pipelines. This article explores the intricacies of automating ETL pipelines using Apache Airflow on AWS EC2.

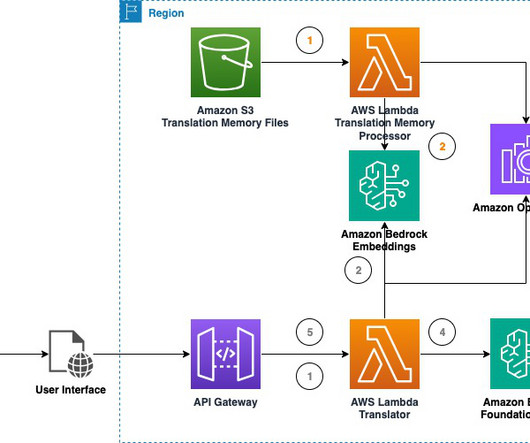

AWS Machine Learning Blog

JANUARY 7, 2025

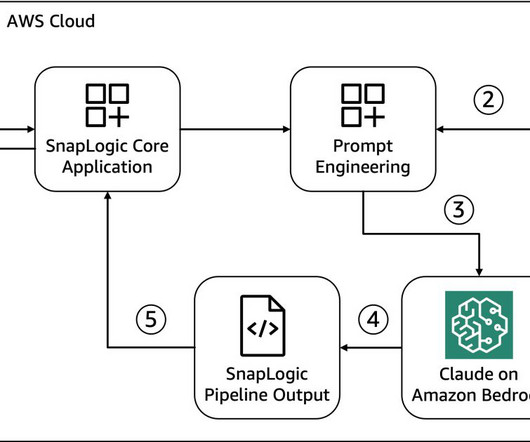

The translation playground could be adapted into a scalable serverless solution as represented by the following diagram using AWS Lambda , Amazon Simple Storage Service (Amazon S3), and Amazon API Gateway. To run the project code, make sure that you have fulfilled the AWS CDK prerequisites for Python.

How to Learn Machine Learning

DECEMBER 24, 2024

If you’re diving into the world of machine learning, AWS Machine Learning provides a robust and accessible platform to turn your data science dreams into reality. Whether you’re a solo developer or part of a large enterprise, AWS provides scalable solutions that grow with your needs. Hey dear reader!

AWS Machine Learning Blog

FEBRUARY 21, 2025

Lets assume that the question What date will AWS re:invent 2024 occur? The corresponding answer is also input as AWS re:Invent 2024 takes place on December 26, 2024. If the question was Whats the schedule for AWS events in December?, This setup uses the AWS SDK for Python (Boto3) to interact with AWS services.

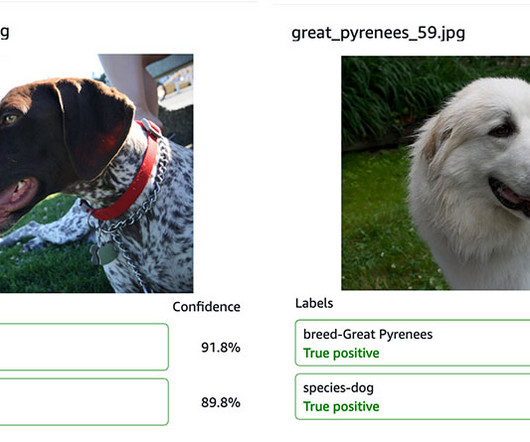

AWS Machine Learning Blog

OCTOBER 18, 2023

This post details how Purina used Amazon Rekognition Custom Labels , AWS Step Functions , and other AWS Services to create an ML model that detects the pet breed from an uploaded image and then uses the prediction to auto-populate the pet attributes. AWS CodeBuild is a fully managed continuous integration service in the cloud.

Data Science Dojo

JULY 6, 2023

Data engineering tools are software applications or frameworks specifically designed to facilitate the process of managing, processing, and transforming large volumes of data. Amazon Redshift: Amazon Redshift is a cloud-based data warehousing service provided by Amazon Web Services (AWS).

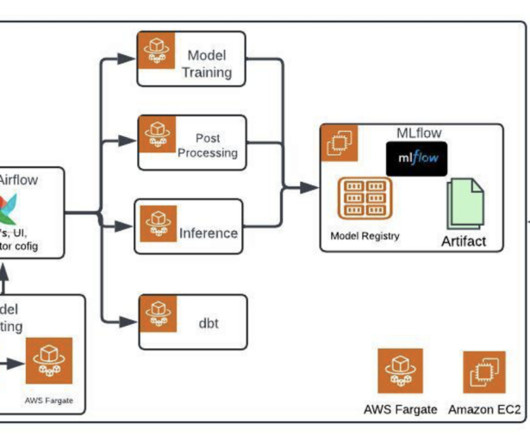

AWS Machine Learning Blog

SEPTEMBER 18, 2024

In addition to its groundbreaking AI innovations, Zeta Global has harnessed Amazon Elastic Container Service (Amazon ECS) with AWS Fargate to deploy a multitude of smaller models efficiently. Airflow for workflow orchestration Airflow schedules and manages complex workflows, defining tasks and dependencies in Python code.

AWS Machine Learning Blog

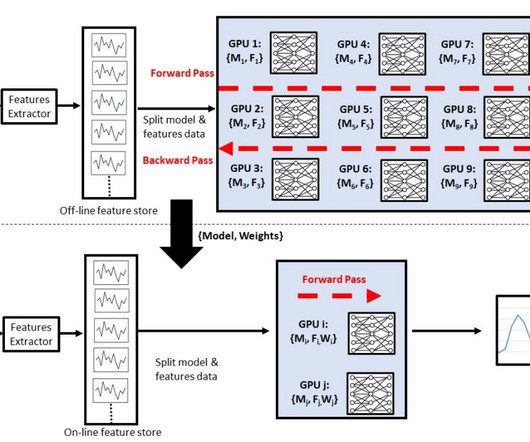

FEBRUARY 23, 2023

SageMaker has developed the distributed data parallel library , which splits data per node and optimizes the communication between the nodes. You can use the SageMaker Python SDK to trigger a job with data parallelism with minimal modifications to the training script. Each node has a copy of the DNN.

AWS Machine Learning Blog

JUNE 18, 2024

On December 6 th -8 th 2023, the non-profit organization, Tech to the Rescue , in collaboration with AWS, organized the world’s largest Air Quality Hackathon – aimed at tackling one of the world’s most pressing health and environmental challenges, air pollution. This is done to optimize performance and minimize cost of LLM invocation.

AWS Machine Learning Blog

MARCH 1, 2023

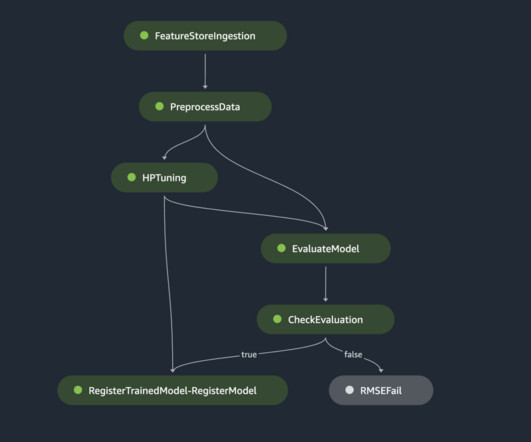

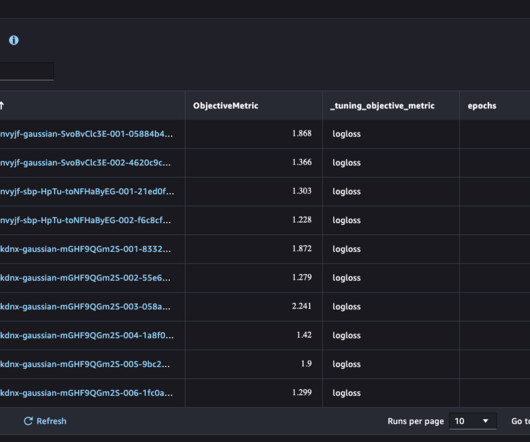

In this post, we share how Kakao Games and the Amazon Machine Learning Solutions Lab teamed up to build a scalable and reliable LTV prediction solution by using AWS data and ML services such as AWS Glue and Amazon SageMaker. The ETL pipeline, MLOps pipeline, and ML inference should be rebuilt in a different AWS account.

phData

AUGUST 6, 2024

As today’s world keeps progressing towards data-driven decisions, organizations must have quality data created from efficient and effective data pipelines. For customers in Snowflake, Snowpark is a powerful tool for building these effective and scalable data pipelines.

AWS Machine Learning Blog

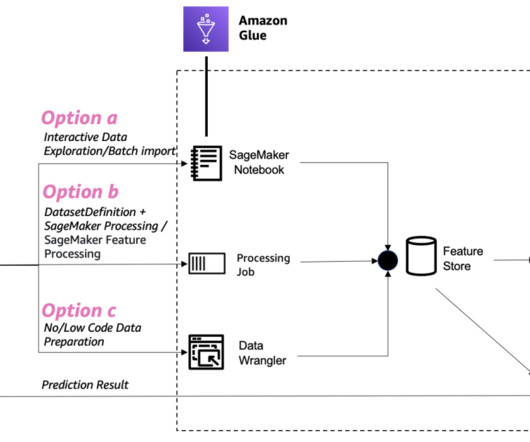

MARCH 8, 2023

In order to train a model using data stored outside of the three supported storage services, the data first needs to be ingested into one of these services (typically Amazon S3). This requires building a data pipeline (using tools such as Amazon SageMaker Data Wrangler ) to move data into Amazon S3.

The MLOps Blog

MAY 17, 2023

We also discuss different types of ETL pipelines for ML use cases and provide real-world examples of their use to help data engineers choose the right one. What is an ETL data pipeline in ML? Xoriant It is common to use ETL data pipeline and data pipeline interchangeably.

AWS Machine Learning Blog

AUGUST 22, 2024

This makes managing and deploying these updates across a large-scale deployment pipeline while providing consistency and minimizing downtime a significant undertaking. Generative AI applications require continuous ingestion, preprocessing, and formatting of vast amounts of data from various sources.

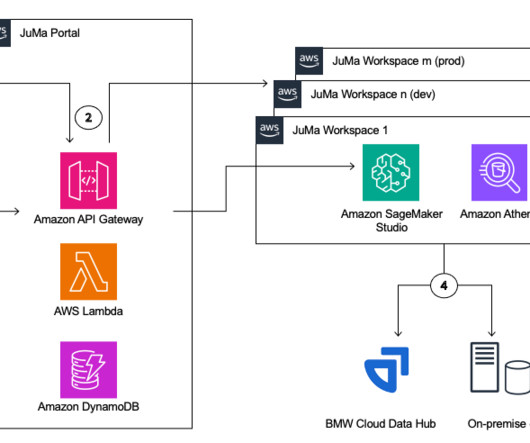

NOVEMBER 24, 2023

In this post, we will talk about how BMW Group, in collaboration with AWS Professional Services, built its Jupyter Managed (JuMa) service to address these challenges. It is powered by Amazon SageMaker Studio and provides JupyterLab for Python and Posit Workbench for R.

Mlearning.ai

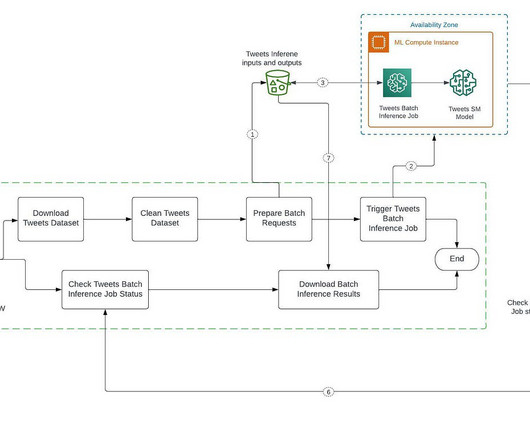

APRIL 6, 2023

Automate and streamline our ML inference pipeline with SageMaker and Airflow Building an inference data pipeline on large datasets is a challenge many companies face. We use DAG (Directed Acyclic Graph) in Airflow, DAGs describe how to run a workflow by defining the pipeline in Python, that is configuration as code.

AWS Machine Learning Blog

AUGUST 12, 2024

Furthermore, the democratization of AI and ML through AWS and AWS Partner solutions is accelerating its adoption across all industries. For example, a health-tech company may be looking to improve patient care by predicting the probability that an elderly patient may become hospitalized by analyzing both clinical and non-clinical data.

ODSC - Open Data Science

APRIL 23, 2025

Confluent Confluent provides a robust data streaming platform built around Apache Kafka. AI credits from Confluent can be used to implement real-time data pipelines, monitor data flows, and run stream-based ML applications. Modal Modal offers serverless compute tailored for data-intensive workloads.

Data Science Connect

JANUARY 27, 2023

Data engineering is a crucial field that plays a vital role in the data pipeline of any organization. It is the process of collecting, storing, managing, and analyzing large amounts of data, and data engineers are responsible for designing and implementing the systems and infrastructure that make this possible.

AUGUST 17, 2023

Amazon Redshift uses SQL to analyze structured and semi-structured data across data warehouses, operational databases, and data lakes, using AWS-designed hardware and ML to deliver the best price-performance at any scale. To do this, we provide an AWS CloudFormation template to create a stack that contains the resources.

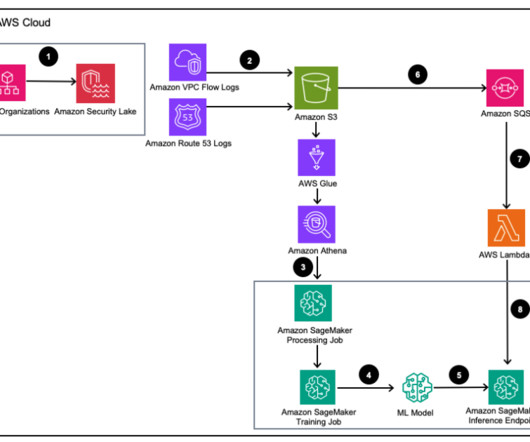

AWS Machine Learning Blog

DECEMBER 20, 2023

Whether logs are coming from Amazon Web Services (AWS), other cloud providers, on-premises, or edge devices, customers need to centralize and standardize security data. After the security log data is stored in Amazon Security Lake, the question becomes how to analyze it. Subscribe an AWS Lambda function to the SQS queue.

DrivenData Labs

MAY 21, 2024

This better reflects the common Python practice of having your top level module be the project name. Some projects manage this folder like the data folder and sync it to a canonical store (e.g., AWS S3) separately from source code. Run with pipx ¶ We all know that Python environment management is tricky.

Mlearning.ai

MARCH 15, 2023

Build a Stocks Price Prediction App powered by Snowflake, AWS, Python and Streamlit — Part 2 of 3 A comprehensive guide to develop machine learning applications from start to finish. Introduction Welcome Back, Let's continue with our Data Science journey to create the Stock Price Prediction web application.

AWS Machine Learning Blog

AUGUST 21, 2024

By walking through this specific implementation, we aim to showcase how you can adapt batch inference to suit various data processing needs, regardless of the data source or nature. Prerequisites To use the batch inference feature, make sure you have satisfied the following requirements: An active AWS account.

Data Science Dojo

JULY 3, 2024

Data science bootcamps are intensive short-term educational programs designed to equip individuals with the skills needed to enter or advance in the field of data science. They cover a wide range of topics, ranging from Python, R, and statistics to machine learning and data visualization.

NOVEMBER 24, 2023

This use case highlights how large language models (LLMs) are able to become a translator between human languages (English, Spanish, Arabic, and more) and machine interpretable languages (Python, Java, Scala, SQL, and so on) along with sophisticated internal reasoning.

AWS Machine Learning Blog

SEPTEMBER 18, 2023

With Ray and AIR, the same Python code can scale seamlessly from a laptop to a large cluster. The full code can be found on the aws-samples-for-ray GitHub repository. It integrates smoothly with other data processing libraries like Spark, Pandas, NumPy, and more, as well as ML frameworks like TensorFlow and PyTorch.

AWS Machine Learning Blog

SEPTEMBER 28, 2023

This process significantly benefits from the MLOps features of SageMaker, which streamline the data science workflow by harnessing the powerful cloud infrastructure of AWS. Click here to open the AWS console and follow along. About the Authors Nick Biso is a Machine Learning Engineer at AWS Professional Services.

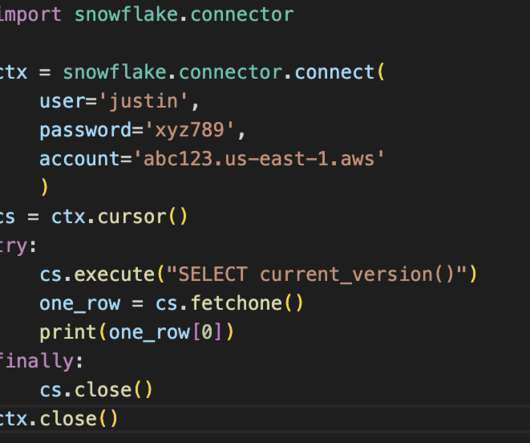

phData

JANUARY 5, 2023

Python is the top programming language used by data engineers in almost every industry. Python has proven proficient in setting up pipelines, maintaining data flows, and transforming data with its simple syntax and proficiency in automation. Why Connect Snowflake to Python?

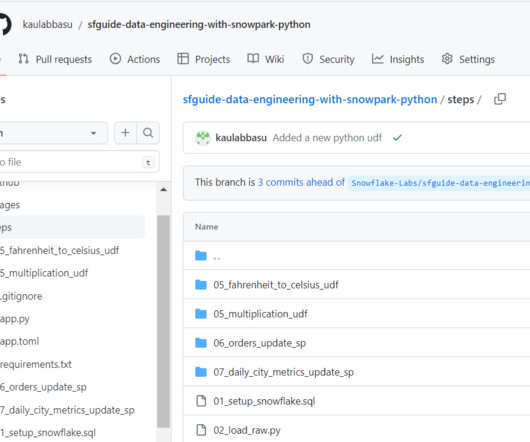

phData

JULY 2, 2024

Snowpark, offered by the Snowflake AI Data Cloud , consists of libraries and runtimes that enable secure deployment and processing of non-SQL code, such as Python, Java, and Scala. In this blog, we’ll cover the steps to get started, including: How to set up an existing Snowpark project on your local system using a Python IDE.

ODSC - Open Data Science

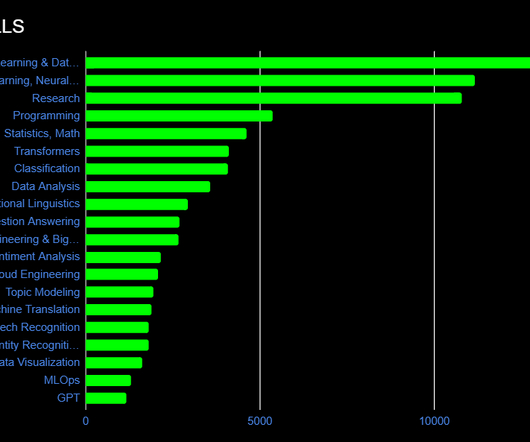

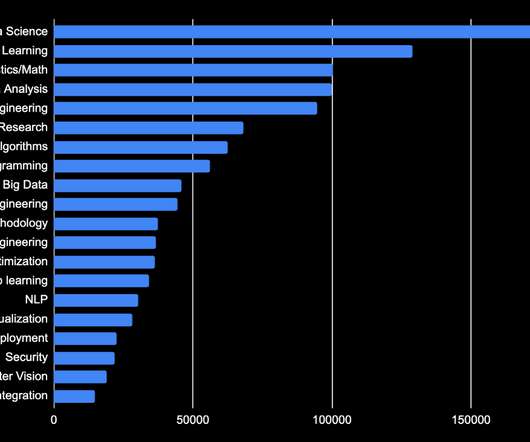

FEBRUARY 17, 2023

Cloud Computing, APIs, and Data Engineering NLP experts don’t go straight into conducting sentiment analysis on their personal laptops. Data Engineering Platforms Spark is still the leader for data pipelines but other platforms are gaining ground. Knowing some SQL is also essential.

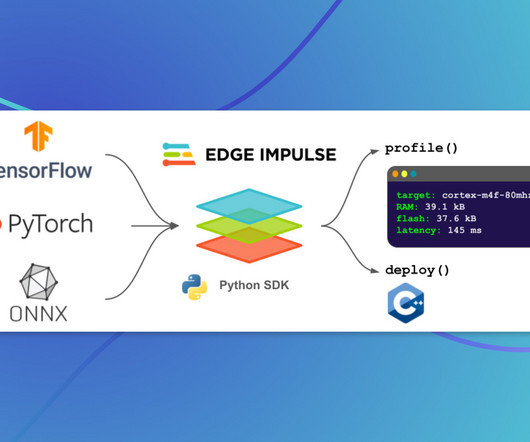

Towards AI

APRIL 4, 2023

Last Updated on April 4, 2023 by Editorial Team Introducing a Python SDK that allows enterprises to effortlessly optimize their ML models for edge devices. Coupled with BYOM, the new Python SDK streamlines workflows even further, letting ML teams leverage Edge Impulse directly from their own development environments.

AWS Machine Learning Blog

SEPTEMBER 11, 2024

In terms of resulting speedups, the approximate order is programming hardware, then programming against PBA APIs, then programming in an unmanaged language such as C++, then a managed language such as Python. Examples of other PBAs now available include AWS Inferentia and AWS Trainium , Google TPU, and Graphcore IPU.

AWS Machine Learning Blog

APRIL 5, 2024

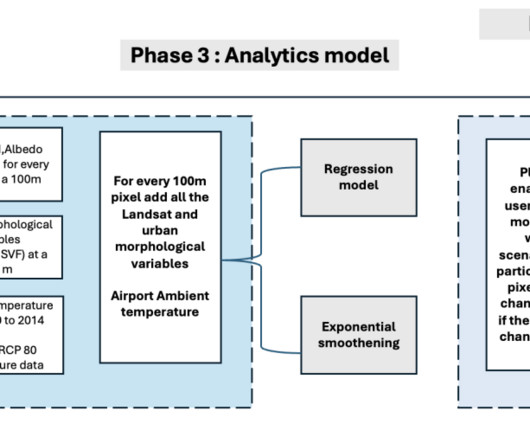

Phase 1: Data pipeline The Landsat 8 satellite captures detailed imagery of the area of interest every 15 days at 11:30 AM, providing a comprehensive view of the city’s landscape and environment. Data acquisition and preprocessing To implement the modules, Gramener used the SageMaker geospatial notebook within Amazon SageMaker Studio.

AWS Machine Learning Blog

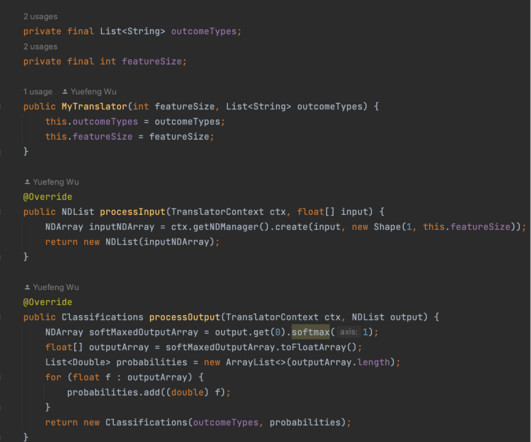

APRIL 19, 2023

Right now, most deep learning frameworks are built for Python, but this neglects the large number of Java developers and developers who have existing Java code bases they want to integrate the increasingly powerful capabilities of deep learning into. The DJL continues to grow in its ability to support different hardware, models, and engines.

ODSC - Open Data Science

FEBRUARY 2, 2023

This doesn’t mean anything too complicated, but could range from basic Excel work to more advanced reporting to be used for data visualization later on. Computer Science and Computer Engineering Similar to knowing statistics and math, a data scientist should know the fundamentals of computer science as well.

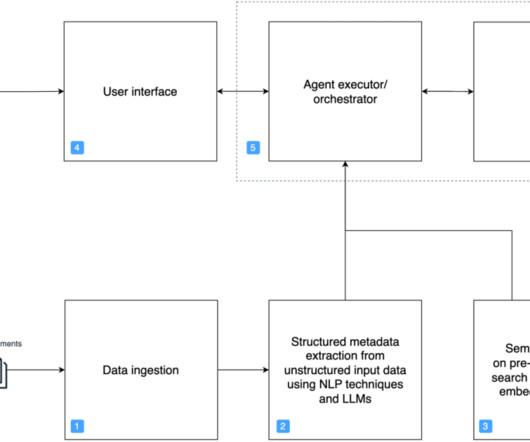

AWS Machine Learning Blog

DECEMBER 6, 2023

For this architecture, we propose an implementation on GitHub , with loosely coupled components where the backend (5), data pipelines (1, 2, 3) and front end (4) can evolve separately. Deploy the solution To install this solution in your AWS account, complete the following steps: Clone the repository on GitHub.

O'Reilly Media

SEPTEMBER 15, 2021

Cloud certifications, specifically in AWS and Microsoft Azure, were most strongly associated with salary increases. As we’ll see later, cloud certifications (specifically in AWS and Microsoft Azure) were the most popular and appeared to have the largest effect on salaries. Salaries were lower regardless of education or job title.

Towards AI

MAY 30, 2024

Prime_otter_86438 is working on a Python library to make ML training and running models on any microcontroller in real time for classification easy for beginners. They are seeking assistance from an expert to improve the model and make the Python package easier for the end user. If this sounds fun, connect with them in the thread!

The MLOps Blog

MARCH 15, 2023

In this post, you will learn about the 10 best data pipeline tools, their pros, cons, and pricing. A typical data pipeline involves the following steps or processes through which the data passes before being consumed by a downstream process, such as an ML model training process.

Pickl AI

JULY 25, 2023

Data engineers are essential professionals responsible for designing, constructing, and maintaining an organization’s data infrastructure. They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content