How Druva used Amazon Bedrock to address foundation model complexity when building Dru, Druva’s backup AI copilot

AWS Machine Learning Blog

NOVEMBER 1, 2024

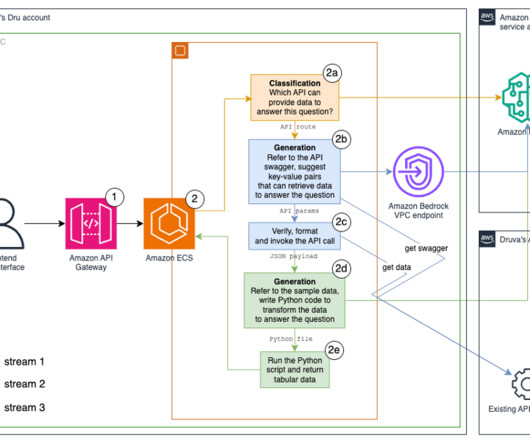

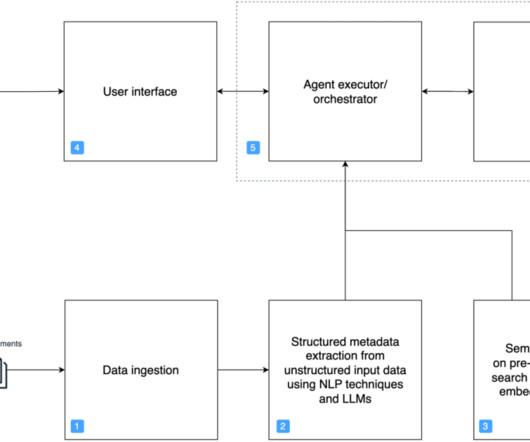

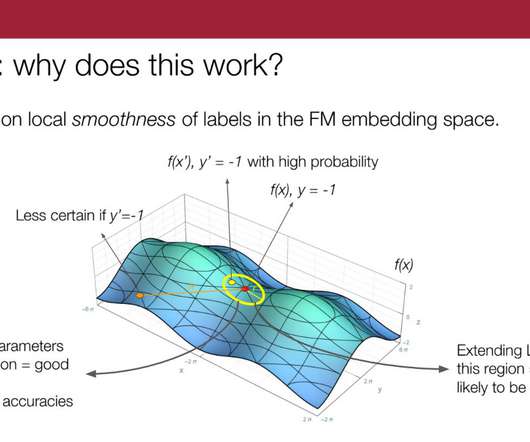

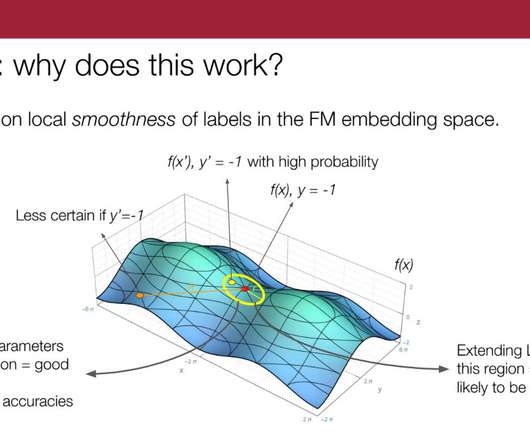

We tried different methods, including k-nearest neighbor (k-NN) search of vector embeddings, BM25 with synonyms , and a hybrid of both across fields including API routes, descriptions, and hypothetical questions. The FM resides in a separate AWS account and virtual private cloud (VPC) from the backend services.

Let's personalize your content