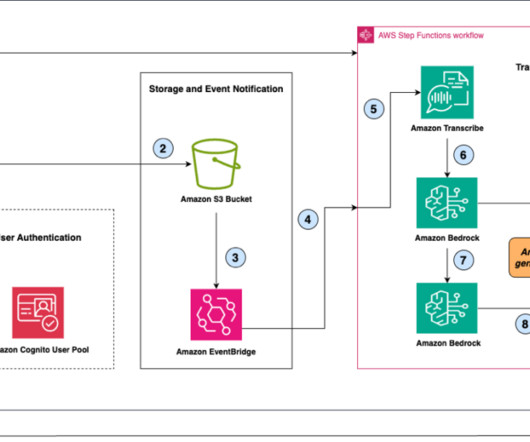

Transcribe, translate, and summarize live streams in your browser with AWS AI and generative AI services

AWS Machine Learning Blog

NOVEMBER 13, 2024

From gaming and entertainment to education and corporate events, live streams have become a powerful medium for real-time engagement and content consumption. By offering real-time translations into multiple languages, viewers from around the world can engage with live content as if it were delivered in their first language.

Let's personalize your content