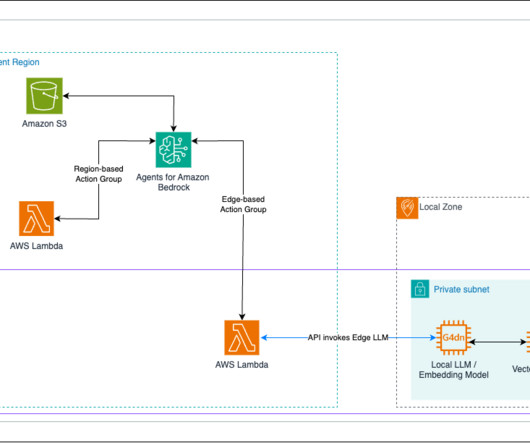

Implement RAG while meeting data residency requirements using AWS hybrid and edge services

JANUARY 14, 2025

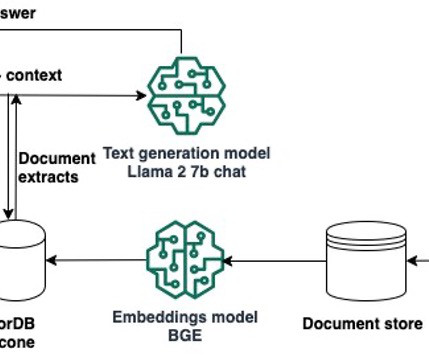

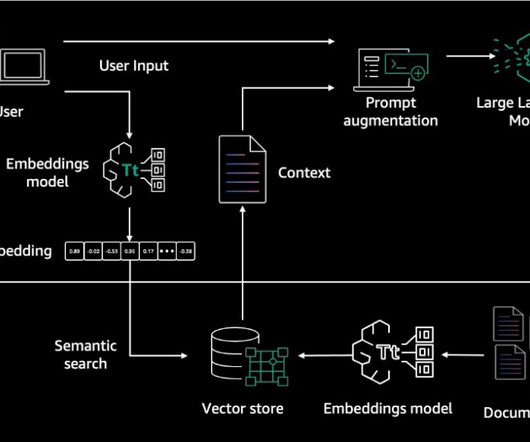

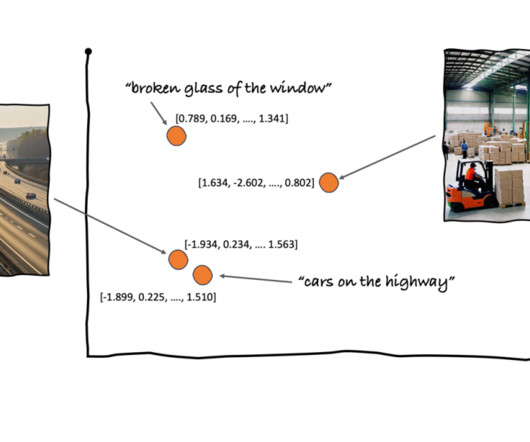

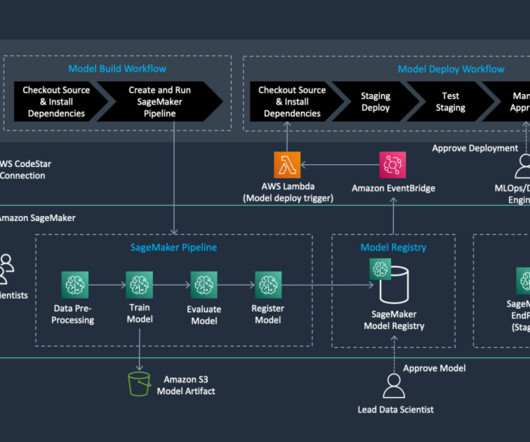

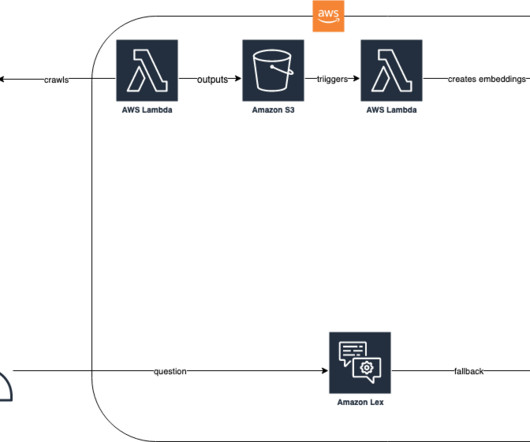

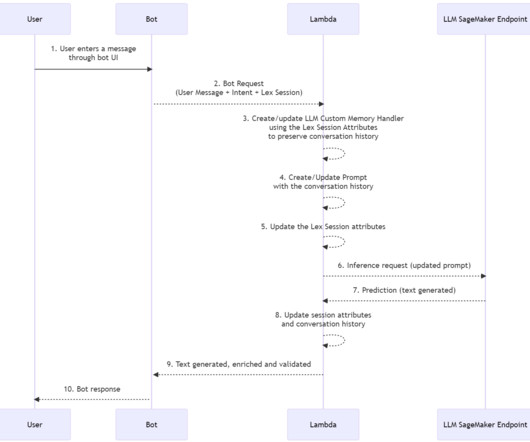

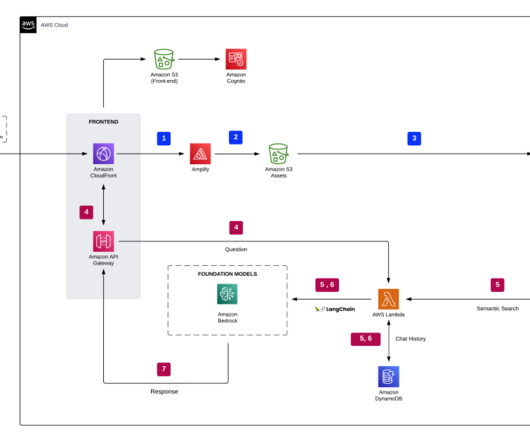

In this post, we show how to extend Amazon Bedrock Agents to hybrid and edge services such as AWS Outposts and AWS Local Zones to build distributed Retrieval Augmented Generation (RAG) applications with on-premises data for improved model outcomes.

Let's personalize your content