Data lakes vs. data warehouses: Decoding the data storage debate

Data Science Dojo

JANUARY 12, 2023

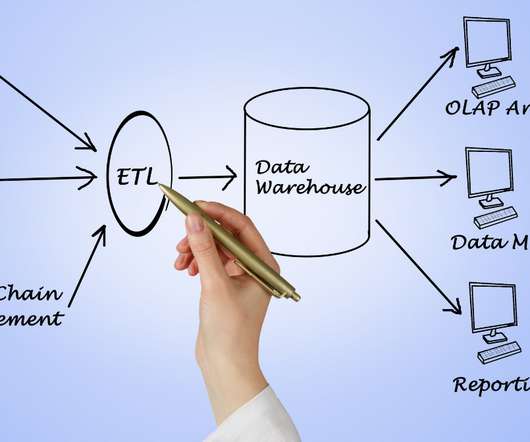

When it comes to data, there are two main types: data lakes and data warehouses. What is a data lake? An enormous amount of raw data is stored in its original format in a data lake until it is required for analytics applications. Which one is right for your business?

Let's personalize your content