Data Integrity for AI: What’s Old is New Again

Precisely

JANUARY 9, 2025

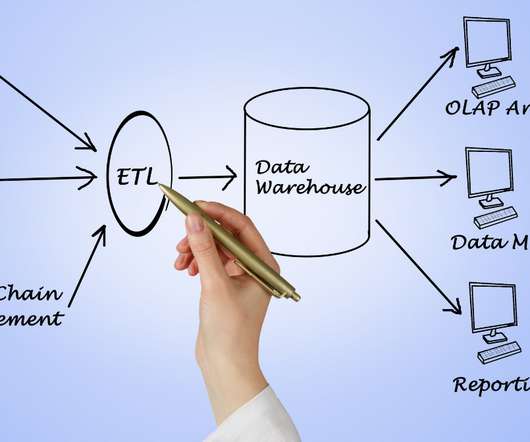

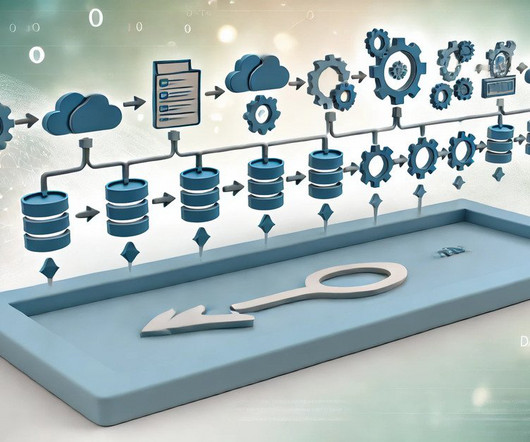

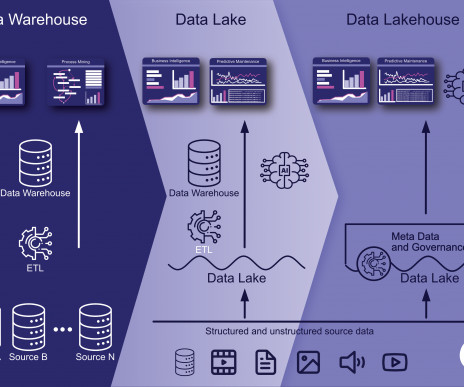

Data marts involved the creation of built-for-purpose analytic repositories meant to directly support more specific business users and reporting needs (e.g., But those end users werent always clear on which data they should use for which reports, as the data definitions were often unclear or conflicting. A data lake!

Let's personalize your content