Schedule & Run ETLs with Jupysql and GitHub Actions

KDnuggets

MAY 1, 2023

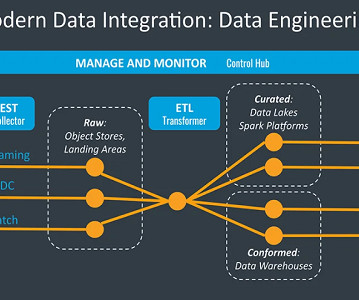

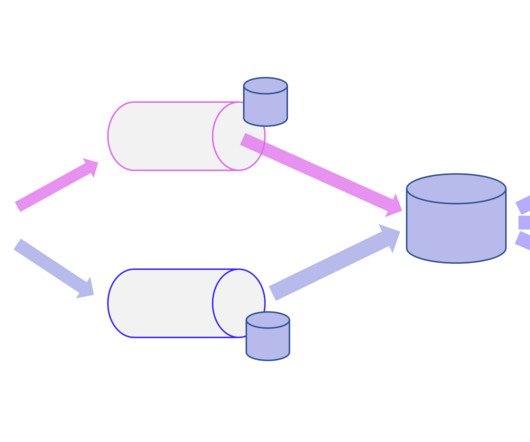

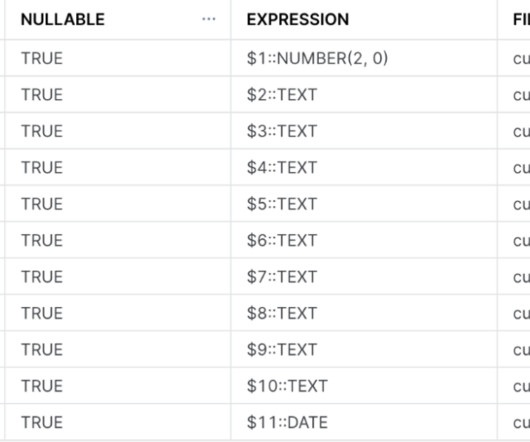

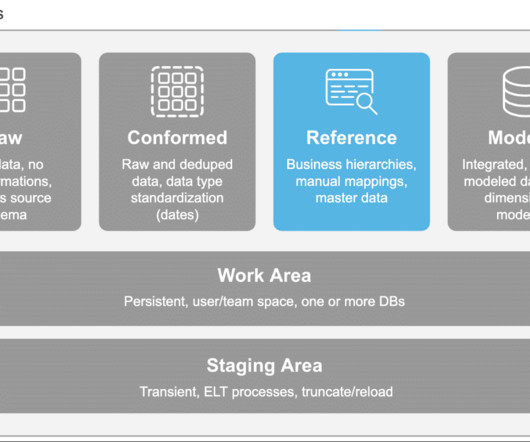

This blog provided you with a comprehensive overview of ETL and JupySQL, including a brief introduction to ETLs and JupySQL. We also demonstrated how to schedule an example ETL notebook via GitHub actions, which allows you to automate the process of executing ETLs and JupySQL from Jupyter.

Let's personalize your content