Understanding ETL Tools as a Data-Centric Organization

Smart Data Collective

SEPTEMBER 8, 2021

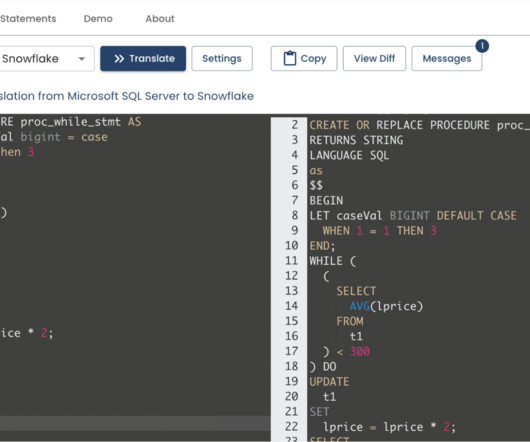

The ETL process is defined as the movement of data from its source to destination storage (typically a Data Warehouse) for future use in reports and analyzes. Understanding the ETL Process. Before you understand what is ETL tool , you need to understand the ETL Process first. Types of ETL Tools.

Let's personalize your content