How to Build ETL Data Pipeline in ML

The MLOps Blog

MAY 17, 2023

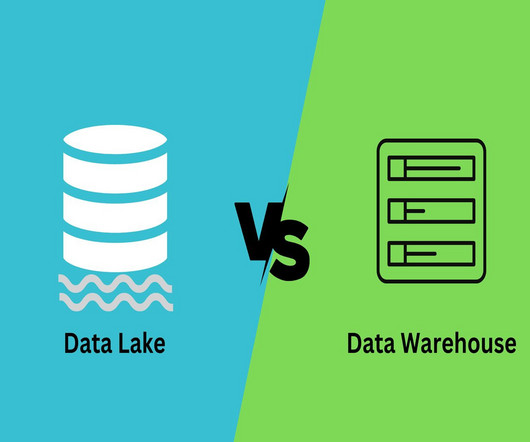

However, efficient use of ETL pipelines in ML can help make their life much easier. This article explores the importance of ETL pipelines in machine learning, a hands-on example of building ETL pipelines with a popular tool, and suggests the best ways for data engineers to enhance and sustain their pipelines.

Let's personalize your content