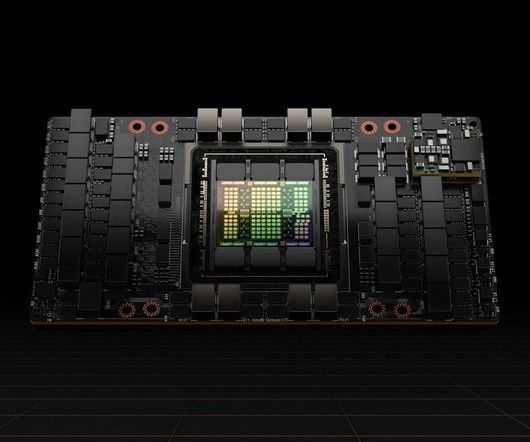

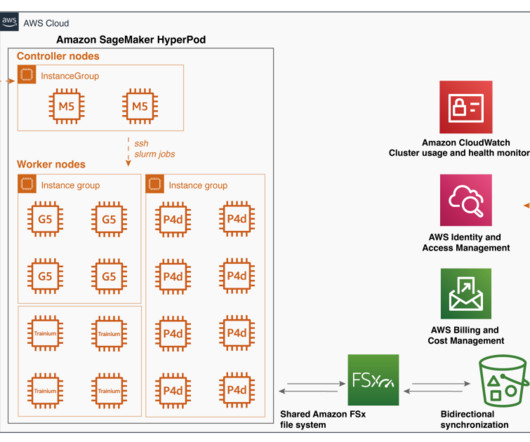

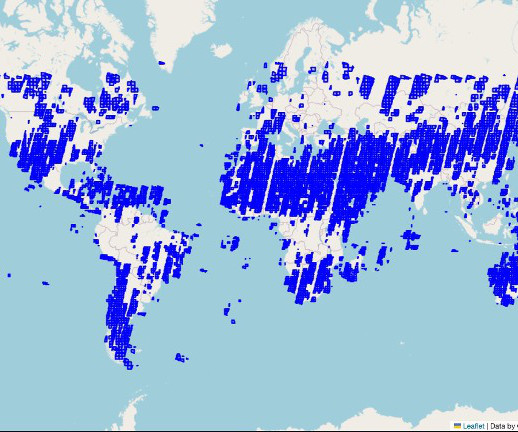

AI Company Plans to Run Clusters of 10,000 Nvidia H100 GPUs in International Waters

NOVEMBER 1, 2023

Del Complex hopes floating its computer clusters in the middle of the ocean will allow it a level of autonomy unlikely to be found on land. Government …

Let's personalize your content