Accelerating Mixtral MoE fine-tuning on Amazon SageMaker with QLoRA

AWS Machine Learning Blog

NOVEMBER 22, 2024

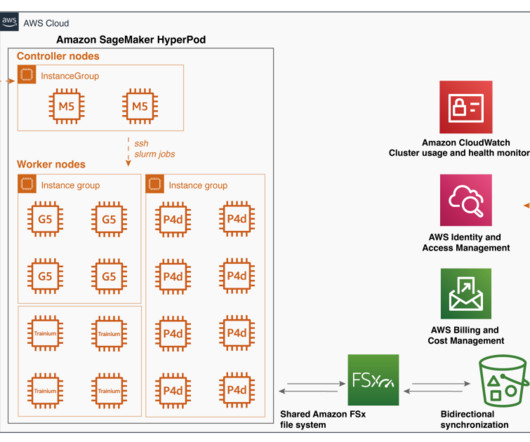

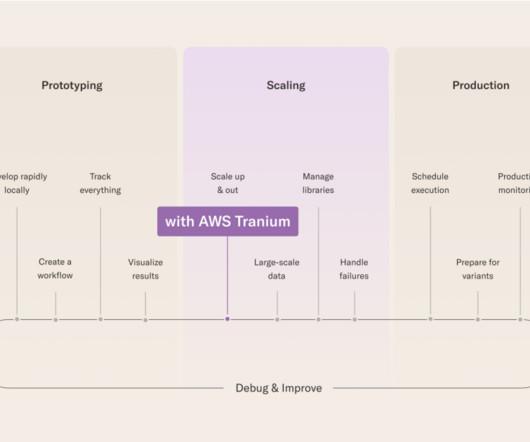

Although QLoRA helps optimize memory during fine-tuning, we will use Amazon SageMaker Training to spin up a resilient training cluster, manage orchestration, and monitor the cluster for failures. To take complete advantage of this multi-GPU cluster, we use the recent support of QLoRA and PyTorch FSDP. 24xlarge compute instance.

Let's personalize your content