Scale LLMs with PyTorch 2.0 FSDP on Amazon EKS – Part 2

AWS Machine Learning Blog

APRIL 1, 2024

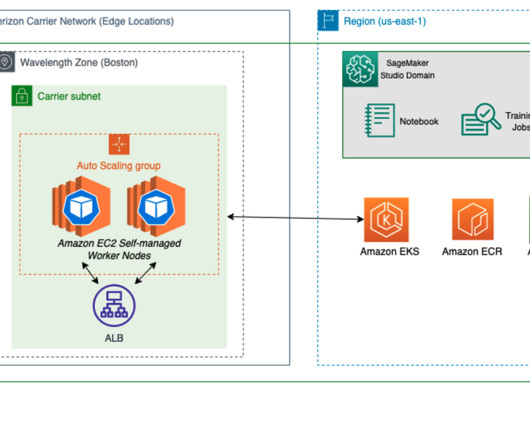

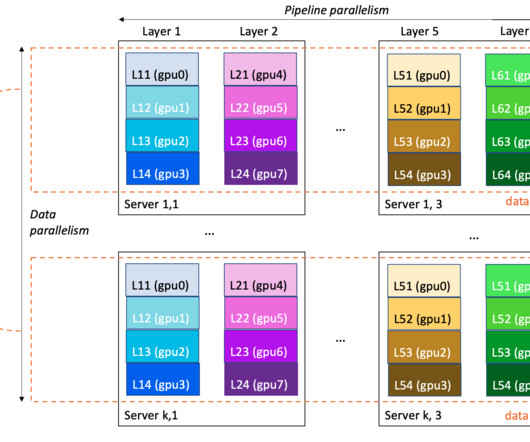

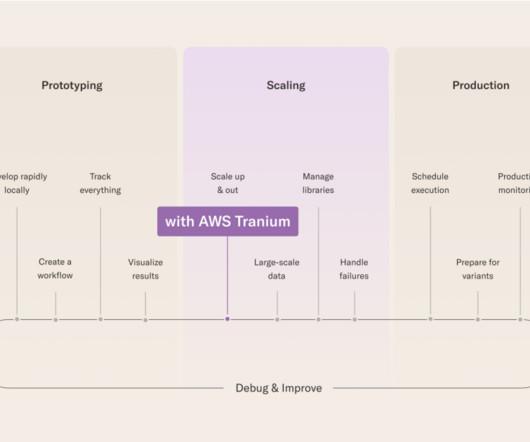

Distributed model training requires a cluster of worker nodes that can scale. Amazon Elastic Kubernetes Service (Amazon EKS) is a popular Kubernetes-conformant service that greatly simplifies the process of running AI/ML workloads, making it more manageable and less time-consuming.

Let's personalize your content