Healthcare revolution: Vector databases for patient similarity search and precision diagnosis

Data Science Dojo

JANUARY 30, 2024

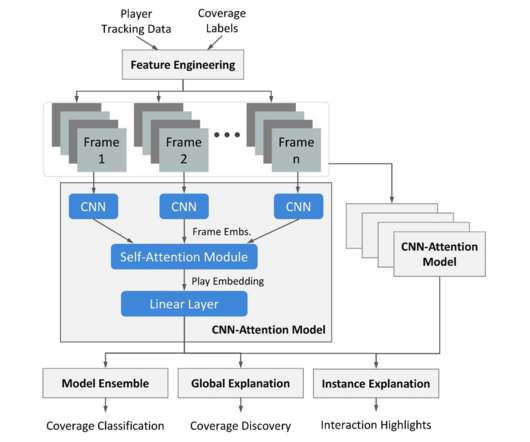

Exploring Disease Mechanisms : Vector databases facilitate the identification of patient clusters that share similar disease progression patterns. Here are a few key components of the discussed process described below: Feature engineering : Transforming raw clinical data into meaningful numerical representations suitable for vector space.

Let's personalize your content