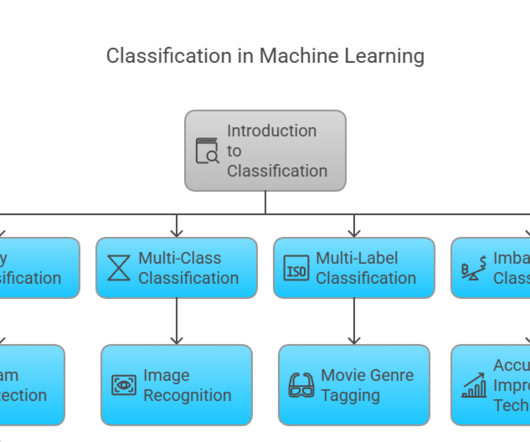

Classifiers in Machine Learning

Pickl AI

APRIL 13, 2025

Examples include: Spam vs. Not Spam Disease Positive vs. Negative Fraudulent Transaction vs. Legitimate Transaction Popular algorithms for binary classification include Logistic Regression, Support Vector Machines (SVM), and Decision Trees. Each instance is assigned to one of several predefined categories.

Let's personalize your content