Can CatBoost with Cross-Validation Handle Student Engagement Data with Ease?

Towards AI

NOVEMBER 6, 2024

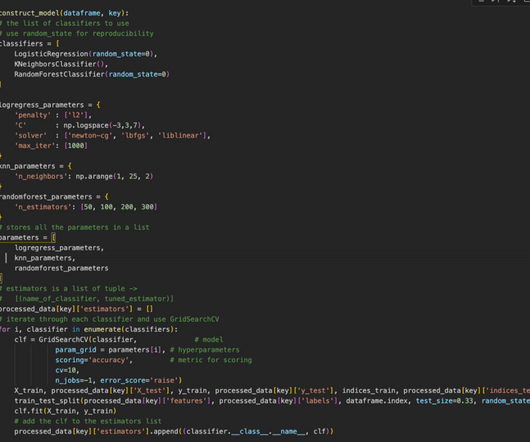

Real-world applications of CatBoost in predicting student engagement By the end of this story, you’ll discover the power of CatBoost, both with and without cross-validation, and how it can empower educational platforms to optimize resources and deliver personalized experiences. Key Advantages of CatBoost How CatBoost Works?

Let's personalize your content