Text Classification in NLP using Cross Validation and BERT

Mlearning.ai

FEBRUARY 15, 2023

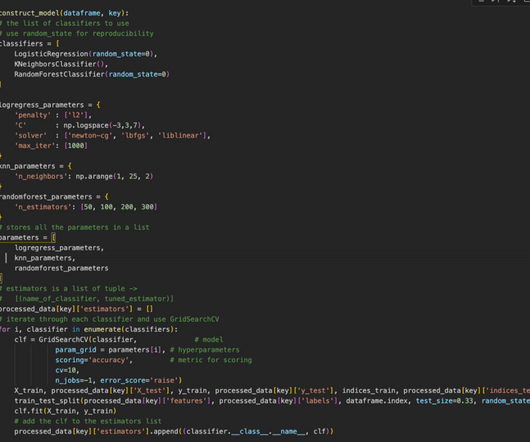

Some important things that were considered during these selections were: Random Forest : The ultimate feature importance in a Random forest is the average of all decision tree feature importance. A random forest is an ensemble classifier that makes predictions using a variety of decision trees. link] Ganaie, M.

Let's personalize your content