Artificial Intelligence Using Python: A Comprehensive Guide

Pickl AI

JULY 12, 2024

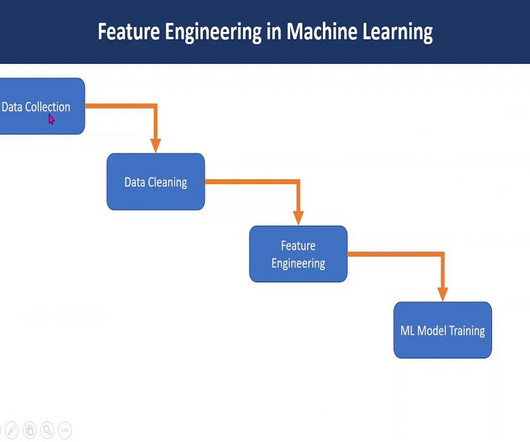

Exploratory Data Analysis (EDA) EDA is a crucial preliminary step in understanding the characteristics of the dataset. EDA guides subsequent preprocessing steps and informs the selection of appropriate AI algorithms based on data insights. Popular models include decision trees, support vector machines (SVM), and neural networks.

Let's personalize your content