Learn Data Analysis with Julia

KDnuggets

JULY 24, 2024

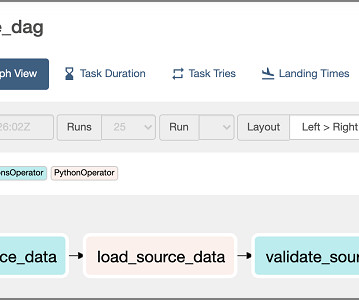

Setup the environment, load the data, perform data analysis and visualization, and create the data pipeline all using Julia programming language.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

JULY 24, 2024

Setup the environment, load the data, perform data analysis and visualization, and create the data pipeline all using Julia programming language.

Dataconomy

JUNE 3, 2025

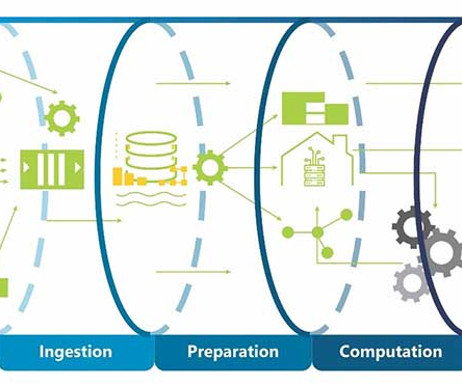

Data pipelines are essential in our increasingly data-driven world, enabling organizations to automate the flow of information from diverse sources to analytical platforms. What are data pipelines? Purpose of a data pipeline Data pipelines serve various essential functions within an organization.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Analytics Vidhya

FEBRUARY 28, 2024

IT industries rely heavily on real-time insights derived from streaming data sources. Handling and processing the streaming data is the hardest work for Data Analysis.

Analytics Vidhya

JUNE 14, 2024

While many ETL tools exist, dbt (data build tool) is emerging as a game-changer. This article dives into the core functionalities of dbt, exploring its unique strengths and how […] The post Transforming Your Data Pipeline with dbt(data build tool) appeared first on Analytics Vidhya.

Data Science Dojo

NOVEMBER 25, 2024

Live Data Analysis: Applications that can analyze and act on continuously flowing data, such as financial market updates, weather reports, or social media feeds, in real-time. Latency While streaming promises real-time processing, it can introduce latency, particularly with large or complex data streams.

Smart Data Collective

OCTOBER 17, 2022

Data pipelines automatically fetch information from various disparate sources for further consolidation and transformation into high-performing data storage. There are a number of challenges in data storage , which data pipelines can help address. Choosing the right data pipeline solution.

Data Science Dojo

OCTOBER 2, 2023

This means that you can use natural language prompts to perform advanced data analysis tasks, generate visualizations, and train machine learning models without the need for complex coding knowledge. With Code Interpreter, you can perform tasks such as data analysis, visualization, coding, math, and more.

Dataconomy

MAY 26, 2017

Amazon Kinesis is a platform to build pipelines for streaming data at the scale of terabytes per hour. The post Amazon Kinesis vs. Apache Kafka For Big Data Analysis appeared first on Dataconomy. Parts of the Kinesis platform are.

Analytics Vidhya

APRIL 3, 2023

Introduction Companies can access a large pool of data in the modern business environment, and using this data in real-time may produce insightful results that can spur corporate success. Real-time dashboards such as GCP provide strong data visualization and actionable information for decision-makers.

Data Science Dojo

SEPTEMBER 11, 2024

Let’s explore each of these components and its application in the sales domain: Synapse Data Engineering: Synapse Data Engineering provides a powerful Spark platform designed for large-scale data transformations through Lakehouse. Here, we changed the data types of columns and dealt with missing values.

Data Science Dojo

JULY 5, 2023

The development of a Machine Learning Model can be divided into three main stages: Building your ML data pipeline: This stage involves gathering data, cleaning it, and preparing it for modeling. Cleaning data: Once the data has been gathered, it needs to be cleaned.

Dataconomy

MAY 16, 2023

It involves data collection, cleaning, analysis, and interpretation to uncover patterns, trends, and correlations that can drive decision-making. The rise of machine learning applications in healthcare Data scientists, on the other hand, concentrate on data analysis and interpretation to extract meaningful insights.

IBM Data Science in Practice

DECEMBER 7, 2022

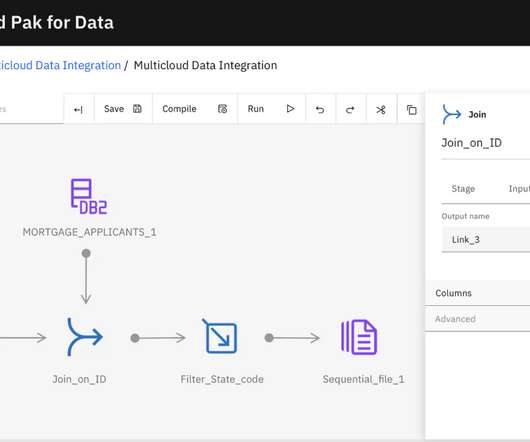

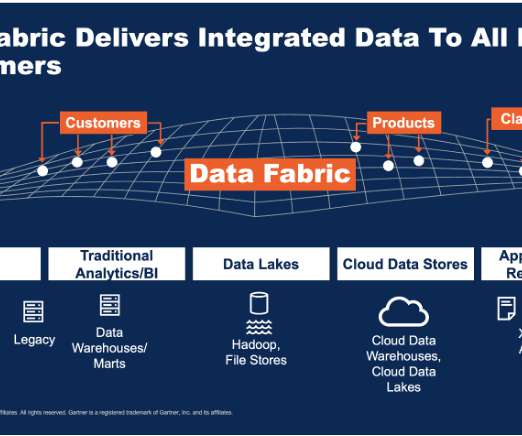

One of the key elements that builds a data fabric architecture is to weave integrated data from many different sources, transform and enrich data, and deliver it to downstream data consumers. This leaves more time for data analysis. Let’s use address data as an example.

IBM Data Science in Practice

NOVEMBER 28, 2022

Implementing a data fabric architecture is the answer. What is a data fabric? Data fabric is defined by IBM as “an architecture that facilitates the end-to-end integration of various data pipelines and cloud environments through the use of intelligent and automated systems.” This leaves more time for data analysis.

Dataconomy

MARCH 19, 2025

This helps facilitate data-driven decision-making for businesses, enabling them to operate more efficiently and identify new opportunities. Definition and significance of data science The significance of data science cannot be overstated. Data visualization developer: Creates interactive dashboards for data analysis.

Dataconomy

MARCH 20, 2025

These APIs simplify user interactions and expedite the development of data pipelines. For instance, STATS LLC employs it for sports data analysis, while agricultural innovations use TensorFlow to optimize cucumber sorting based on texture.

Pickl AI

MARCH 19, 2025

Summary: Data engineering tools streamline data collection, storage, and processing. Learning these tools is crucial for building scalable data pipelines. offers Data Science courses covering these tools with a job guarantee for career growth. Below are 20 essential tools every data engineer should know.

ODSC - Open Data Science

FEBRUARY 24, 2023

There are many well-known libraries and platforms for data analysis such as Pandas and Tableau, in addition to analytical databases like ClickHouse, MariaDB, Apache Druid, Apache Pinot, Google BigQuery, Amazon RedShift, etc. These tools will help make your initial data exploration process easy. You can watch it on demand here.

Pickl AI

DECEMBER 26, 2024

Key Features Tailored for Data Science These platforms offer specialised features to enhance productivity. Managed services like AWS Lambda and Azure Data Factory streamline data pipeline creation, while pre-built ML models in GCPs AI Hub reduce development time. Below are key strategies for achieving this.

phData

APRIL 7, 2025

Once you gain access to a KNIME Business Hub instance within your company, you can perform various tasks such as: Collaborating Better with Colleagues: You and your team can share workflows and data sets on KNIME Business Hub, allowing for seamless collaboration when working on data analysis.

Data Science Dojo

AUGUST 11, 2023

Pandas is a library for data analysis. It provides a high-level interface for working with data frames. Matplotlib is a library for plotting data. It provides a wide range of visualization tools.

Data Science Dojo

FEBRUARY 20, 2023

Spark is a general-purpose distributed data processing engine that can handle large volumes of data for applications like data analysis, fraud detection, and machine learning. It provides a variety of tools for data engineering, including model training and deployment.

ODSC - Open Data Science

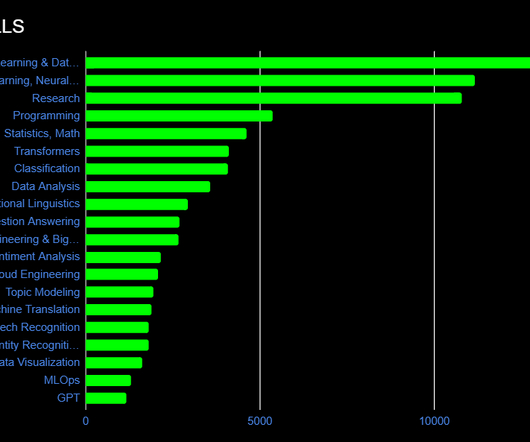

FEBRUARY 17, 2023

Knowing how spaCy works means little if you don’t know how to apply core NLP skills like transformers, classification, linguistics, question answering, sentiment analysis, topic modeling, machine translation, speech recognition, named entity recognition, and others.

Smart Data Collective

SEPTEMBER 21, 2021

With the amount of increase in data, the complexity of managing data only keeps increasing. It has been found that data professionals end up spending 75% of their time on tasks other than data analysis. Advantages of data fabrication for data management. On-premise and cloud-native environment.

Women in Big Data

OCTOBER 9, 2024

These procedures are central to effective data management and crucial for deploying machine learning models and making data-driven decisions. The success of any data initiative hinges on the robustness and flexibility of its big data pipeline. What is a Data Pipeline?

AWS Machine Learning Blog

DECEMBER 4, 2024

Through simple conversations, business teams can use the chat agent to extract valuable insights from both structured and unstructured data sources without writing code or managing complex data pipelines. The following diagram illustrates the conceptual architecture of an AI assistant with Amazon Bedrock IDE.

Analytics Vidhya

FEBRUARY 17, 2023

Introduction Are you curious about the latest advancements in the data tech industry? Perhaps you’re hoping to advance your career or transition into this field. In that case, we invite you to check out DataHour, a series of webinars led by experts in the field.

AWS Machine Learning Blog

JANUARY 15, 2025

HCLTechs AutoWise Companion solution addresses these pain points, benefiting both customers and manufacturers by simplifying the decision-making process for customers and enhancing data analysis and customer sentiment alignment for manufacturers.

Pickl AI

APRIL 21, 2025

Exploring the Ocean If Big Data is the ocean, Data Science is the multifaceted discipline of extracting knowledge and insights from data, whether it’s big or small. It’s an interdisciplinary field that blends statistics, computer science, and domain expertise to understand phenomena through data analysis.

Smart Data Collective

SEPTEMBER 26, 2021

The raw data can be fed into a database or data warehouse. An analyst can examine the data using business intelligence tools to derive useful information. . To arrange your data and keep it raw, you need to: Make sure the data pipeline is simple so you can easily move data from point A to point B.

Pickl AI

JULY 25, 2023

Data engineers are essential professionals responsible for designing, constructing, and maintaining an organization’s data infrastructure. They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. Read more to know.

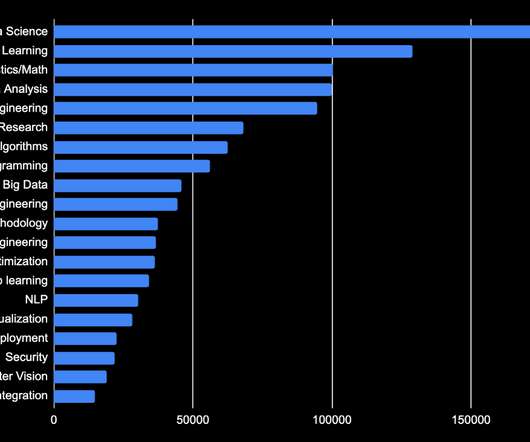

ODSC - Open Data Science

FEBRUARY 2, 2023

Being able to discover connections between variables and to make quick insights will allow any practitioner to make the most out of the data. Analytics and Data Analysis Coming in as the 4th most sought-after skill is data analytics, as many data scientists will be expected to do some analysis in their careers.

Pickl AI

AUGUST 1, 2023

The primary goal of Data Engineering is to transform raw data into a structured and usable format that can be easily accessed, analyzed, and interpreted by Data Scientists, analysts, and other stakeholders. Future of Data Engineering The Data Engineering market will expand from $18.2

Pickl AI

NOVEMBER 4, 2024

Effective data governance enhances quality and security throughout the data lifecycle. What is Data Engineering? Data Engineering is designing, constructing, and managing systems that enable data collection, storage, and analysis. Data pipelines are significant because they can streamline data processing.

AWS Machine Learning Blog

MARCH 1, 2023

To solve this problem, we had to design a strong data pipeline to create the ML features from the raw data and MLOps. Multiple data sources ODIN is an MMORPG where the game players interact with each other, and there are various events such as level-up, item purchase, and gold (game money) hunting.

Pickl AI

AUGUST 16, 2024

Researchers across disciplines will find valuable insights to enhance their Data Analysis skills and produce credible, impactful findings. Introduction Statistical tools are essential for conducting data-driven research across various fields, from social sciences to healthcare.

phData

JANUARY 5, 2023

This can provide organizations with access to new features and capabilities, such as real-time analytics and machine learning, and can help them to improve the accuracy and speed of their data analysis. For example, suppose an organization moves from an on-premises database to a cloud-based database like Snowflake.

phData

FEBRUARY 21, 2025

A data warehouse enables advanced analytics, reporting, and business intelligence. The data warehouse emerged as a means of resolving inefficiencies related to data management, data analysis, and an inability to access and analyze large volumes of data quickly.

Hacker News

JUNE 29, 2023

ABOUT FRESHPAINT [link] Customer data is the fuel that drives all modern businesses. From product analytics, to marketing, to support, to advertising, advanced data analysis in the warehouse, and even sales – customer data is the raw material for each function at a modern business.

Data Science Dojo

JULY 3, 2024

Here’s a list of key skills that are typically covered in a good data science bootcamp: Programming Languages : Python : Widely used for its simplicity and extensive libraries for data analysis and machine learning. R : Often used for statistical analysis and data visualization.

Pickl AI

MAY 15, 2024

As a Data Analyst, you’ve honed your skills in data wrangling, analysis, and communication. But the allure of tackling large-scale projects, building robust models for complex problems, and orchestrating data pipelines might be pushing you to transition into Data Science architecture.

The MLOps Blog

FEBRUARY 5, 2023

We will also get familiar with tools that can help record this data and further analyse it. In the later part of this article, we will discuss its importance and how we can use machine learning for streaming data analysis with the help of a hands-on example. What is streaming data? Happy Learning!

Dataconomy

FEBRUARY 23, 2023

Data engineers play a crucial role in managing and processing big data Ensuring data quality and integrity Data quality and integrity are essential for accurate data analysis. Data engineers are responsible for ensuring that the data collected is accurate, consistent, and reliable.

IBM Journey to AI blog

SEPTEMBER 19, 2023

Overview: Data science vs data analytics Think of data science as the overarching umbrella that covers a wide range of tasks performed to find patterns in large datasets, structure data for use, train machine learning models and develop artificial intelligence (AI) applications.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content