Data Lakes and SQL: A Match Made in Data Heaven

KDnuggets

JANUARY 16, 2023

In this article, we will discuss the benefits of using SQL with a data lake and how it can help organizations unlock the full potential of their data.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

JANUARY 16, 2023

In this article, we will discuss the benefits of using SQL with a data lake and how it can help organizations unlock the full potential of their data.

Data Science Dojo

JULY 6, 2023

Data engineering tools are software applications or frameworks specifically designed to facilitate the process of managing, processing, and transforming large volumes of data. Essential data engineering tools for 2023 Top 10 data engineering tools to watch out for in 2023 1.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Data Science Blog

MAY 20, 2024

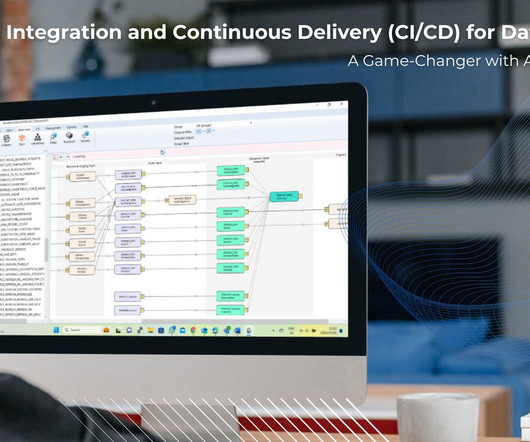

Continuous Integration and Continuous Delivery (CI/CD) for Data Pipelines: It is a Game-Changer with AnalyticsCreator! The need for efficient and reliable data pipelines is paramount in data science and data engineering. It offers full BI-Stack Automation, from source to data warehouse through to frontend.

AWS Machine Learning Blog

AUGUST 8, 2024

Managing and retrieving the right information can be complex, especially for data analysts working with large data lakes and complex SQL queries. This post highlights how Twilio enabled natural language-driven data exploration of business intelligence (BI) data with RAG and Amazon Bedrock.

IBM Data Science in Practice

MAY 19, 2025

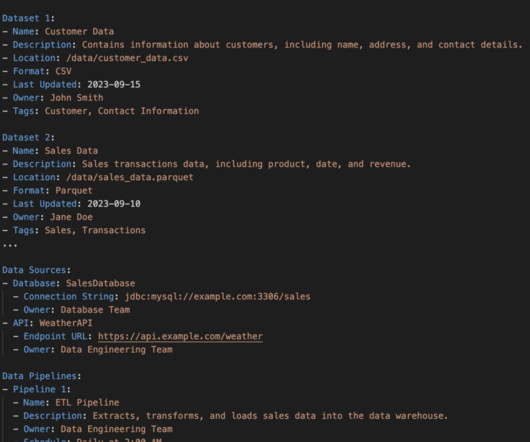

The Data Dilemma: From Chaos toClarity In the world of data management, weve all beenthere: A simple request spirals into a maze of thingslike: A CSV labeled final_v2_final_final.csv A Parquet file in a forgotten S3folder A table with brokenlineage An abandoned SQL notebook from yearsago What starts as a data lake often becomes a dataswamp!

Data Science Dojo

SEPTEMBER 11, 2024

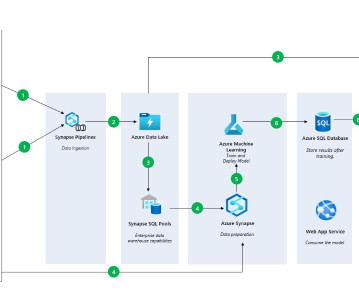

With this full-fledged solution, you don’t have to spend all your time and effort combining different services or duplicating data. Overview of One Lake Fabric features a lake-centric architecture, with a central repository known as OneLake. On the home page, select Synapse Data Engineering.

AWS Machine Learning Blog

JUNE 20, 2024

Our goal was to improve the user experience of an existing application used to explore the counters and insights data. The data is stored in a data lake and retrieved by SQL using Amazon Athena. The following figure shows a search query that was translated to SQL and run. The challenge is to assure quality.

DECEMBER 11, 2024

Organizations are building data-driven applications to guide business decisions, improve agility, and drive innovation. Many of these applications are complex to build because they require collaboration across teams and the integration of data, tools, and services. The following screenshot shows an example of the unified notebook page.

Dataconomy

JUNE 1, 2023

Unified data storage : Fabric’s centralized data lake, Microsoft OneLake, eliminates data silos and provides a unified storage system, simplifying data access and retrieval. OneLake is designed to store a single copy of data in a unified location, leveraging the open-source Apache Parquet format.

AWS Machine Learning Blog

APRIL 3, 2025

Amazon AppFlow was used to facilitate the smooth and secure transfer of data from various sources into ODAP. Additionally, Amazon Simple Storage Service (Amazon S3) served as the central data lake, providing a scalable and cost-effective storage solution for the diverse data types collected from different systems.

Smart Data Collective

FEBRUARY 23, 2022

Traditional relational databases provide certain benefits, but they are not suitable to handle big and various data. That is when data lake products started gaining popularity, and since then, more companies introduced lake solutions as part of their data infrastructure. Athena is serverless and managed by AWS.

Pickl AI

APRIL 6, 2023

Accordingly, one of the most demanding roles is that of Azure Data Engineer Jobs that you might be interested in. The following blog will help you know about the Azure Data Engineering Job Description, salary, and certification course. How to Become an Azure Data Engineer?

phData

SEPTEMBER 19, 2023

With the amount of data companies are using growing to unprecedented levels, organizations are grappling with the challenge of efficiently managing and deriving insights from these vast volumes of structured and unstructured data. What is a Data Lake? Consistency of data throughout the data lake.

DECEMBER 18, 2023

Swami Sivasubramanian, Vice President of AWS Data and Machine Learning Watch Swami Sivasubramanian, Vice President of Data and AI at AWS, to discover how you can use your company data to build differentiated generative AI applications and accelerate productivity for employees across your organization.

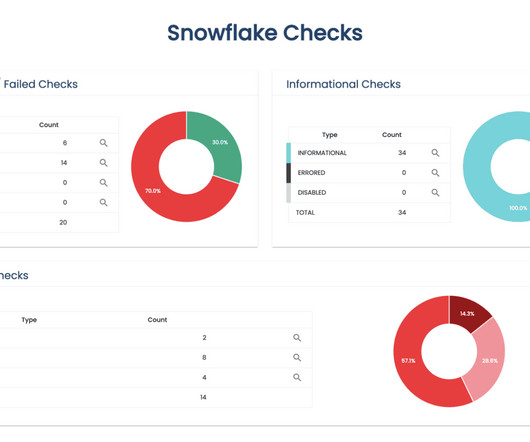

phData

NOVEMBER 8, 2024

Data Versioning and Time Travel Open Table Formats empower users with time travel capabilities, allowing them to access previous dataset versions. Note : Cloud Data warehouses like Snowflake and Big Query already have a default time travel feature. It can also be integrated into major data platforms like Snowflake.

Pickl AI

NOVEMBER 4, 2024

Summary: The fundamentals of Data Engineering encompass essential practices like data modelling, warehousing, pipelines, and integration. Understanding these concepts enables professionals to build robust systems that facilitate effective data management and insightful analysis. What is Data Engineering?

Pickl AI

AUGUST 1, 2023

Aspiring and experienced Data Engineers alike can benefit from a curated list of books covering essential concepts and practical techniques. These 10 Best Data Engineering Books for beginners encompass a range of topics, from foundational principles to advanced data processing methods. What is Data Engineering?

phData

SEPTEMBER 20, 2023

Snowpark is the set of libraries and runtimes in Snowflake that securely deploy and process non-SQL code, including Python , Java, and Scala. As a declarative language, SQL is very powerful in allowing users from all backgrounds to ask questions about data. What is Snowflake’s Snowpark? Why Does Snowpark Matter?

ODSC - Open Data Science

JANUARY 18, 2024

Data engineering is a rapidly growing field, and there is a high demand for skilled data engineers. If you are a data scientist, you may be wondering if you can transition into data engineering. In this blog post, we will discuss how you can become a data engineer if you are a data scientist.

ODSC - Open Data Science

APRIL 25, 2025

EvolvabilityIts Mostly About Data Contracts Editors note: Elliott Cordo is a speaker for ODSC East this May 1315! Be sure to check out his talk, Enabling Evolutionary Architecture in Data Engineering , there to learn about data contracts and plentymore.

AWS Machine Learning Blog

FEBRUARY 21, 2025

Data exploration and model development were conducted using well-known machine learning (ML) tools such as Jupyter or Apache Zeppelin notebooks. Apache Hive was used to provide a tabular interface to data stored in HDFS, and to integrate with Apache Spark SQL. This also led to a backlog of data that needed to be ingested.

AWS Machine Learning Blog

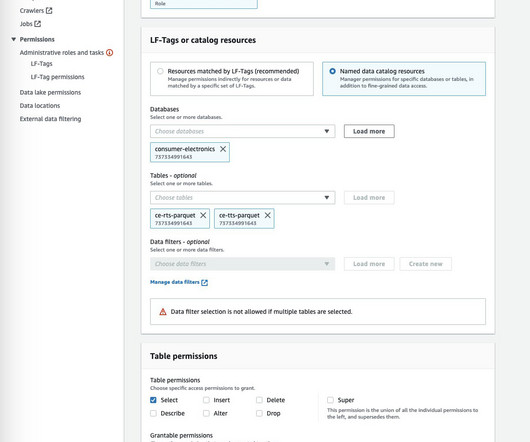

JUNE 5, 2023

Many teams are turning to Athena to enable interactive querying and analyze their data in the respective data stores without creating multiple data copies. Athena allows applications to use standard SQL to query massive amounts of data on an S3 data lake. Create a data lake with Lake Formation.

ODSC - Open Data Science

FEBRUARY 2, 2023

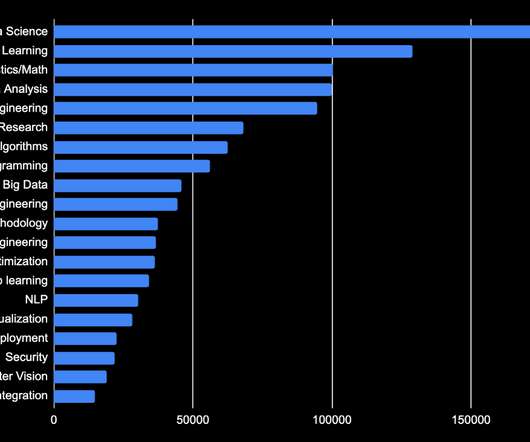

Computer Science and Computer Engineering Similar to knowing statistics and math, a data scientist should know the fundamentals of computer science as well. While knowing Python, R, and SQL are expected, you’ll need to go beyond that. Big Data As datasets become larger and more complex, knowing how to work with them will be key.

Analytics Vidhya

OCTOBER 6, 2023

In today’s rapidly evolving digital landscape, seamless data, applications, and device integration are more pressing than ever. Enter Microsoft Fabric, a cutting-edge solution designed to revolutionize how we interact with technology.

Analytics Vidhya

MARCH 21, 2023

Introduction In today’s world, data is growing exponentially with time with digitalization. to store and analyze this data to get valuable business insights from it. Organizations are using various cloud platforms like Azure, GCP, etc.,

Snorkel AI

MAY 26, 2023

[link] Ahmad Khan, head of artificial intelligence and machine learning strategy at Snowflake gave a presentation entitled “Scalable SQL + Python ML Pipelines in the Cloud” about his company’s Snowpark service at Snorkel AI’s Future of Data-Centric AI virtual conference in August 2022. Welcome everybody.

Snorkel AI

MAY 26, 2023

[link] Ahmad Khan, head of artificial intelligence and machine learning strategy at Snowflake gave a presentation entitled “Scalable SQL + Python ML Pipelines in the Cloud” about his company’s Snowpark service at Snorkel AI’s Future of Data-Centric AI virtual conference in August 2022. Welcome everybody.

The MLOps Blog

JANUARY 23, 2023

However, there are some key differences that we need to consider: Size and complexity of the data In machine learning, we are often working with much larger data. Basically, every machine learning project needs data. Given the range of tools and data types, a separate data versioning logic will be necessary.

IBM Journey to AI blog

SEPTEMBER 19, 2023

And you should have experience working with big data platforms such as Hadoop or Apache Spark. Additionally, data science requires experience in SQL database coding and an ability to work with unstructured data of various types, such as video, audio, pictures and text.

ODSC - Open Data Science

APRIL 3, 2023

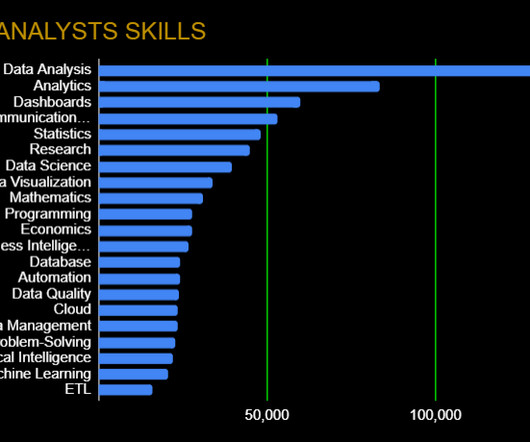

Data analysts often must go out and find their data, process it, clean it, and get it ready for analysis. This pushes into Big Data as well, as many companies now have significant amounts of data and large data lakes that need analyzing. Cloud Services: Google Cloud Platform, AWS, Azure.

IBM Journey to AI blog

JANUARY 5, 2023

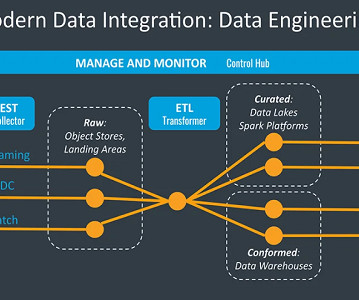

The first generation of data architectures represented by enterprise data warehouse and business intelligence platforms were characterized by thousands of ETL jobs, tables, and reports that only a small group of specialized data engineers understood, resulting in an under-realized positive impact on the business.

ODSC - Open Data Science

MARCH 30, 2023

5 Reasons Why SQL is Still the Most Accessible Language for New Data Scientists Between its ability to perform data analysis and ease-of-use, here are 5 reasons why SQL is still ideal for new data scientists to get into the field. Check a few of them out here.

ODSC - Open Data Science

APRIL 24, 2023

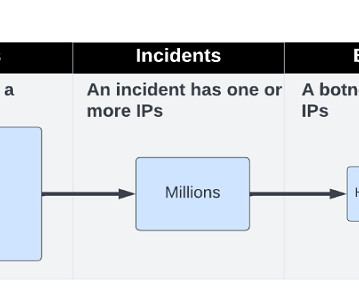

To cluster the data we have to calculate distances between IPs — The number of all possible IP pairs is very large, and we had to solve the scale problem. Data Processing and Clustering Our data is stored in a Data Lake and we used PrestoDB as a query engine. AS ip_1, r.ip AND l.ip < r.ip

IBM Journey to AI blog

JULY 17, 2023

Within watsonx.ai, users can take advantage of open-source frameworks like PyTorch, TensorFlow and scikit-learn alongside IBM’s entire machine learning and data science toolkit and its ecosystem tools for code-based and visual data science capabilities.

phData

DECEMBER 4, 2023

If you answer “yes” to any of these questions, you will need cloud storage, such as Amazon AWS’s S3, Azure Data Lake Storage or GCP’s Google Storage. Copy Into When loading data into Snowflake, the very first and most important rule to follow is: do not load data with SQL inserts!

DagsHub

OCTOBER 23, 2024

Here’s the structured equivalent of this same data in tabular form: With structured data, you can use query languages like SQL to extract and interpret information. In contrast, such traditional query languages struggle to interpret unstructured data. This text has a lot of information, but it is not structured.

The MLOps Blog

JUNE 27, 2023

Alignment to other tools in the organization’s tech stack Consider how well the MLOps tool integrates with your existing tools and workflows, such as data sources, data engineering platforms, code repositories, CI/CD pipelines, monitoring systems, etc. This provides end-to-end support for data engineering and MLOps workflows.

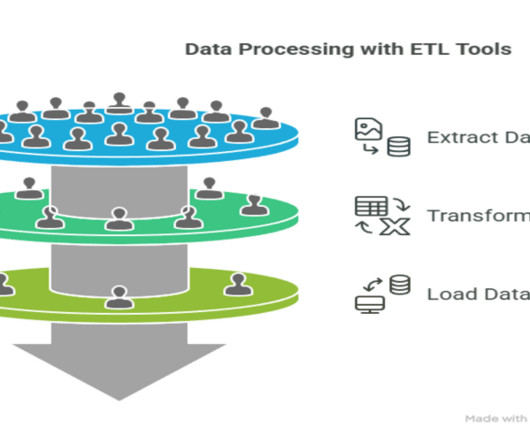

Pickl AI

APRIL 9, 2025

This includes operations like data validation, data cleansing, data aggregation, and data normalization. The goal is to ensure that the data is consistent and ready for analysis. Loading : Storing the transformed data in a target system like a data warehouse, data lake, or even a database.

IBM Journey to AI blog

MARCH 14, 2024

With newfound support for open formats such as Parquet and Apache Iceberg, Netezza enables data engineers, data scientists and data analysts to share data and run complex workloads without duplicating or performing additional ETL.

Alation

JULY 19, 2022

Increasingly, data catalogs not only provide the location of data assets, but also the means to retrieve them. A data scientist can use the catalog to identify several assets that might be relevant to their question and then use a built-in SQL query editor, like Compose, to access the data.

Mlearning.ai

FEBRUARY 16, 2023

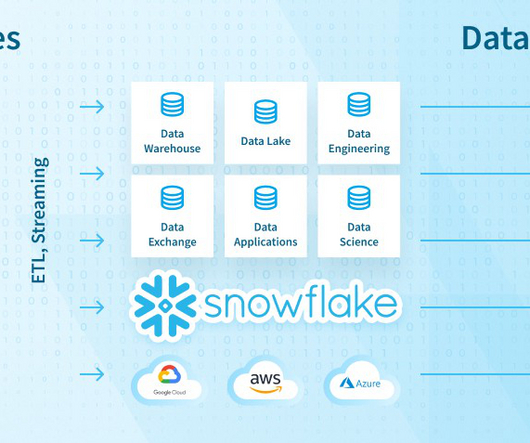

Thus, the solution allows for scaling data workloads independently from one another and seamlessly handling data warehousing, data lakes , data sharing, and engineering. Data warehousing is a vital constituent of any business intelligence operation.

phData

FEBRUARY 24, 2023

Organizations can unite their siloed data and securely share governed data while executing diverse analytic workloads. Snowflake’s engine provides a solution for data warehousing, data lakes, data engineering, data science, data application development, and data sharing.

Mlearning.ai

JULY 10, 2023

Without partitioning, daily data activities will cost your company a fortune and a moment will come where the cost advantage of GCP BigQuery becomes questionable. To create a Scheduled Query, the initial step is to ensure your SQL is accurately entered in the Query Editor.

Pickl AI

SEPTEMBER 5, 2024

Storage Solutions: Secure and scalable storage options like Azure Blob Storage and Azure Data Lake Storage. Key features and benefits of Azure for Data Science include: Scalability: Easily scale resources up or down based on demand, ideal for handling large datasets and complex computations.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content