What Orchestration Tools Help Data Engineers in Snowflake

phData

AUGUST 17, 2023

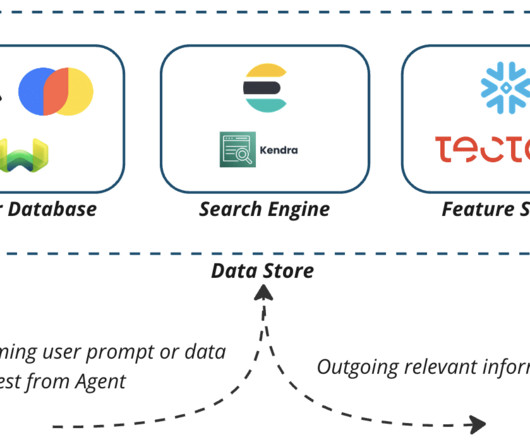

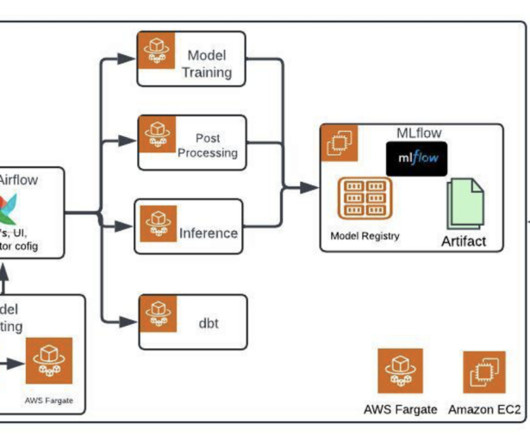

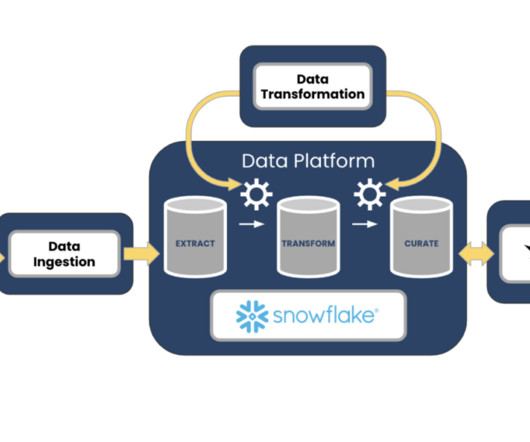

In the rapidly evolving landscape of data engineering, Snowflake Data Cloud has emerged as a leading cloud-based data warehousing solution, providing powerful capabilities for storing, processing, and analyzing vast amounts of data. What are Orchestration Tools?

Let's personalize your content