Data Preparation with SQL Cheatsheet

KDnuggets

JUNE 27, 2022

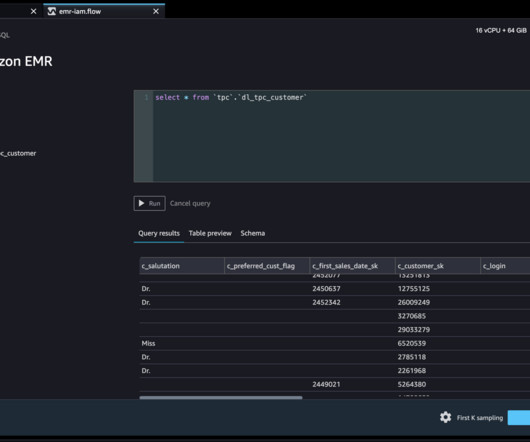

If your raw data is in a SQL-based data lake, why spend the time and money to export the data into a new platform for data prep?

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

JUNE 27, 2022

If your raw data is in a SQL-based data lake, why spend the time and money to export the data into a new platform for data prep?

Data Science Dojo

JANUARY 12, 2023

When it comes to data, there are two main types: data lakes and data warehouses. What is a data lake? An enormous amount of raw data is stored in its original format in a data lake until it is required for analytics applications. Which one is right for your business?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Dataconomy

MARCH 4, 2025

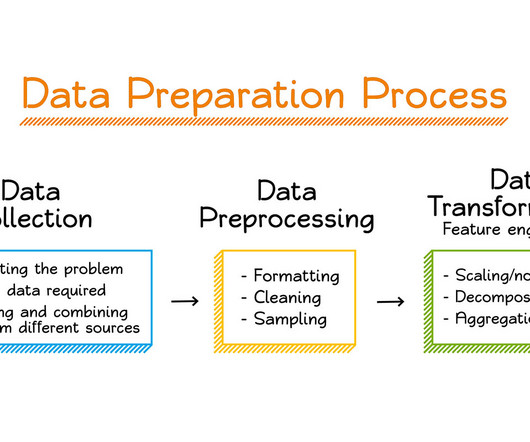

The data mining process The data mining process is structured into four primary stages: data gathering, data preparation, data mining, and data analysis and interpretation. Each stage is crucial for deriving meaningful insights from data.

Data Science Dojo

SEPTEMBER 11, 2024

With this full-fledged solution, you don’t have to spend all your time and effort combining different services or duplicating data. Overview of One Lake Fabric features a lake-centric architecture, with a central repository known as OneLake.

DagsHub

FEBRUARY 29, 2024

Data, is therefore, essential to the quality and performance of machine learning models. This makes data preparation for machine learning all the more critical, so that the models generate reliable and accurate predictions and drive business value for the organization. Why do you need Data Preparation for Machine Learning?

AWS Machine Learning Blog

SEPTEMBER 27, 2024

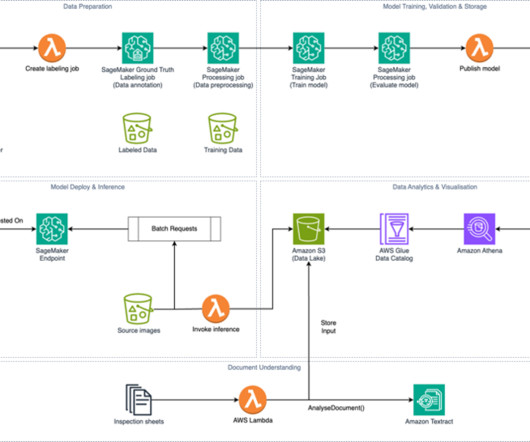

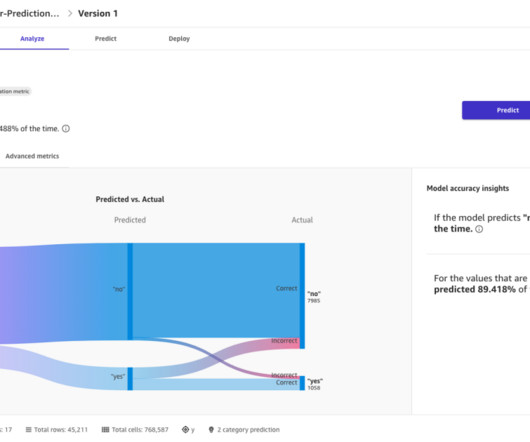

Data preparation SageMaker Ground Truth employs a human workforce made up of Northpower volunteers to annotate a set of 10,000 images. The model was then fine-tuned with training data from the data preparation stage. The sunburst graph below is a visualization of this classification.

DECEMBER 11, 2024

Many of these applications are complex to build because they require collaboration across teams and the integration of data, tools, and services. Data engineers use data warehouses, data lakes, and analytics tools to load, transform, clean, and aggregate data. Big Data Architect.

AWS Machine Learning Blog

AUGUST 15, 2024

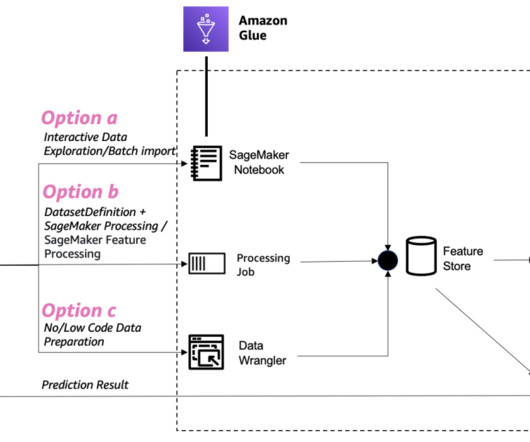

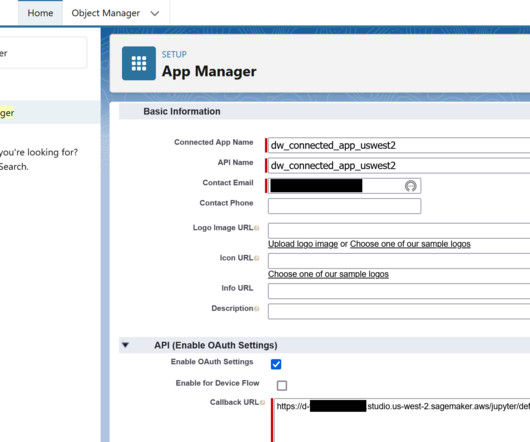

Importing data from the SageMaker Data Wrangler flow allows you to interact with a sample of the data before scaling the data preparation flow to the full dataset. This improves time and performance because you don’t need to work with the entirety of the data during preparation.

AWS Machine Learning Blog

AUGUST 21, 2024

Amazon DataZone is a data management service that makes it quick and convenient to catalog, discover, share, and govern data stored in AWS, on-premises, and third-party sources. The data lake environment is required to configure an AWS Glue database table, which is used to publish an asset in the Amazon DataZone catalog.

AWS Machine Learning Blog

AUGUST 21, 2023

You can streamline the process of feature engineering and data preparation with SageMaker Data Wrangler and finish each stage of the data preparation workflow (including data selection, purification, exploration, visualization, and processing at scale) within a single visual interface.

AWS Machine Learning Blog

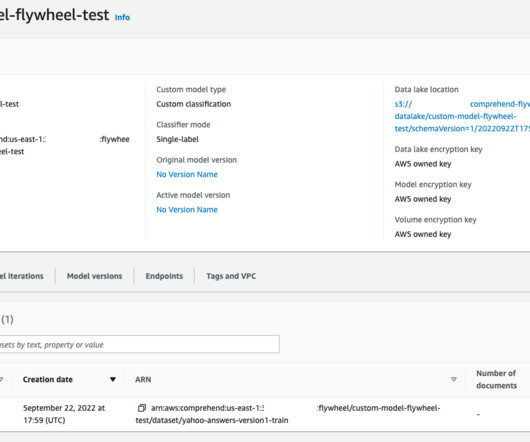

MARCH 1, 2023

Flywheel creates a data lake (in Amazon S3) in your account where all the training and test data for all versions of the model are managed and stored. Periodically, the new labeled data (to retrain the model) can be made available to flywheel by creating datasets. The data can be accessed from AWS Open Data Registry.

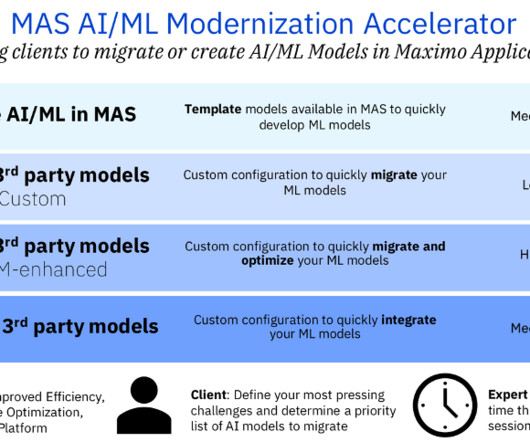

IBM Data Science in Practice

JANUARY 9, 2024

In our scenario, the data is stored in the Cloud Object Storage in Watson Studio. However, in a real use case you could receive this data from third party DBs which could be connected directly to IoT Platform. Step 2: MAS Asset/Device Registration Step 2 is crucial to store information on failure history and installation dates etc.

IBM Journey to AI blog

DECEMBER 7, 2023

Increased operational efficiency benefits Reduced data preparation time : OLAP data preparation capabilities streamline data analysis processes, saving time and resources.

AWS Machine Learning Blog

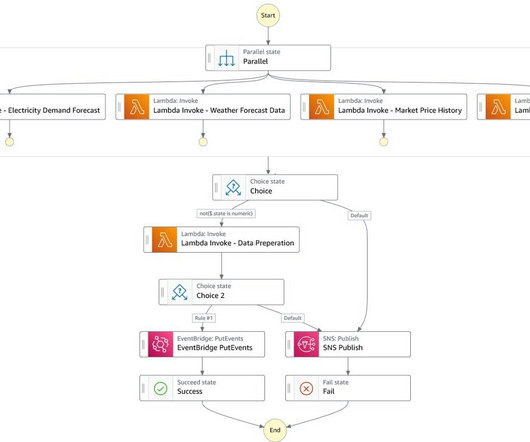

SEPTEMBER 1, 2023

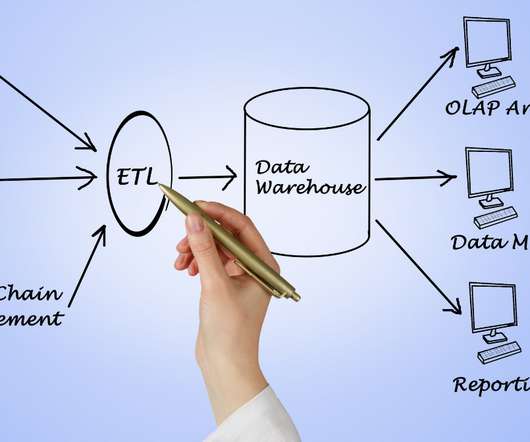

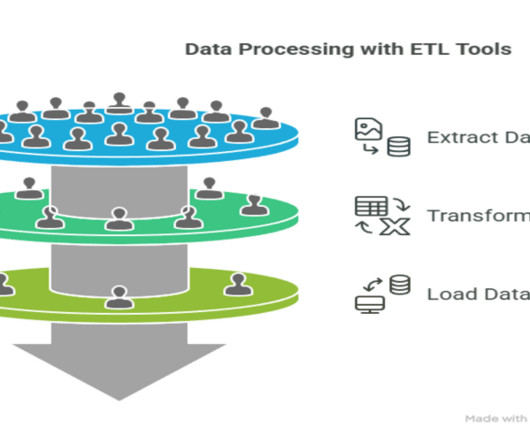

These teams are as follows: Advanced analytics team (data lake and data mesh) – Data engineers are responsible for preparing and ingesting data from multiple sources, building ETL (extract, transform, and load) pipelines to curate and catalog the data, and prepare the necessary historical data for the ML use cases.

AWS Machine Learning Blog

MARCH 8, 2023

Data collection and ingestion The data collection and ingestion layer connects to all upstream data sources and loads the data into the data lake. Therefore, the ingestion components need to be able to manage authentication, data sourcing in pull mode, data preprocessing, and data storage.

Tableau

APRIL 18, 2022

Shine a light on who or what is using specific data to speed up collaboration or reduce disruption when changes happen. Data modeling. Leverage semantic layers and physical layers to give you more options for combining data using schemas to fit your analysis. Data preparation. Data integration.

Tableau

APRIL 18, 2022

Shine a light on who or what is using specific data to speed up collaboration or reduce disruption when changes happen. Data modeling. Leverage semantic layers and physical layers to give you more options for combining data using schemas to fit your analysis. Data preparation. Data integration.

Pickl AI

FEBRUARY 21, 2023

The data locations may come from the data warehouse or data lake with structured and unstructured data. The Data Scientist’s responsibility is to move the data to a data lake or warehouse for the different data mining processes. are the various data mining tools.

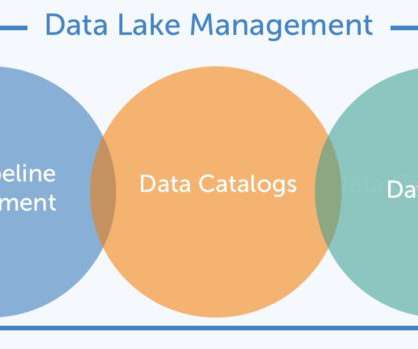

Alation

FEBRUARY 13, 2020

Figure 1 illustrates the typical metadata subjects contained in a data catalog. Figure 1 – Data Catalog Metadata Subjects. Datasets are the files and tables that data workers need to find and access. They may reside in a data lake, warehouse, master data repository, or any other shared data resource.

ODSC - Open Data Science

JUNE 12, 2023

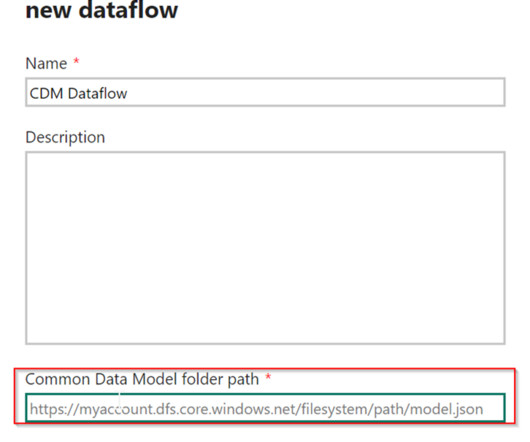

No-code/low-code experience using a diagram view in the data preparation layer similar to Dataflows. Building business-focussed semantic layers in the cloud (the Power BI Service) with data modeling capabilities, such as managing relationships, creating measures, defining incremental refresh, and creating and managing RLS.

DataRobot Blog

OCTOBER 3, 2017

This highlights the two companies’ shared vision on self-service data discovery with an emphasis on collaboration and data governance. 2) When data becomes information, many (incremental) use cases surface.

Alation

SEPTEMBER 23, 2021

Data Catalogs for Data Science & Engineering – Data catalogs that are primarily used for data science and engineering are typically used by very experienced data practitioners. It also catalogs datasets and operations that includes data preparation features and functions.

AWS Machine Learning Blog

JUNE 18, 2024

The output data is transformed to a standardized format and stored in a single location in Amazon S3 in Parquet format, a columnar and efficient storage format. With AWS Glue custom connectors, it’s effortless to transfer data between Amazon S3 and other applications.

phData

SEPTEMBER 28, 2023

Dataflows represent a cloud-based technology designed for data preparation and transformation purposes. Dataflows have different connectors to retrieve data, including databases, Excel files, APIs, and other similar sources, along with data manipulations that are performed using Online Power Query Editor.

Alation

FEBRUARY 20, 2020

Data lakes, while useful in helping you to capture all of your data, are only the first step in extracting the value of that data. We recently announced an integration with Trifacta to seamlessly integrate the Alation Data Catalog with self-service data prep applications to help you solve this issue.

Precisely

JULY 18, 2024

Without access to all critical and relevant data, the data that emerges from a data fabric will have gaps that delay business insights required to innovate, mitigate risk, or improve operational efficiencies. You must be able to continuously catalog, profile, and identify the most frequently used data.

AUGUST 17, 2023

Amazon Redshift uses SQL to analyze structured and semi-structured data across data warehouses, operational databases, and data lakes, using AWS-designed hardware and ML to deliver the best price-performance at any scale. If you want to do the process in a low-code/no-code way, you can follow option C.

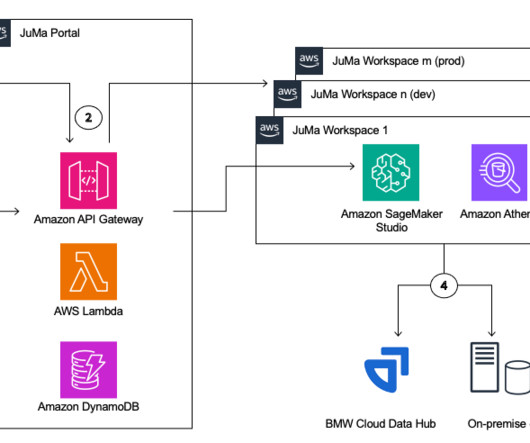

NOVEMBER 24, 2023

JuMa is tightly integrated with a range of BMW Central IT services, including identity and access management, roles and rights management, BMW Cloud Data Hub (BMW’s data lake on AWS) and on-premises databases.

IBM Journey to AI blog

MARCH 14, 2024

Whether it’s for ad hoc analytics, data transformation, data sharing, data lake modernization or ML and gen AI, you have the flexibility to choose. Integrated solutions for zero-ETL data preparation: IBM databases on AWS offer integrated solutions that eliminate the need for ETL processes in data preparation for AI.

IBM Journey to AI blog

MAY 9, 2023

It offers its users advanced machine learning, data management , and generative AI capabilities to train, validate, tune and deploy AI systems across the business with speed, trusted data, and governance. It helps facilitate the entire data and AI lifecycle, from data preparation to model development, deployment and monitoring.

Alation

FEBRUARY 20, 2020

Even something like gamification may emerge as a way to fully engage data shoppers as a community. Behind the scenes, ‘backroom services” will power the storefront, performing such tasks as data acquisition, data preparation, data curation and cataloging, and tracking. Building the EDM.

ODSC - Open Data Science

NOVEMBER 19, 2024

Despite the rise of big data technologies and cloud computing, the principles of dimensional modeling remain relevant. This session delved into how these traditional techniques have adapted to data lakes and real-time analytics, emphasizing their enduring importance for building scalable, efficient data systems.

IBM Journey to AI blog

JULY 17, 2023

Visual modeling: Delivers easy-to-use workflows for data scientists to build data preparation and predictive machine learning pipelines that include text analytics, visualizations and a variety of modeling methods.

AWS Machine Learning Blog

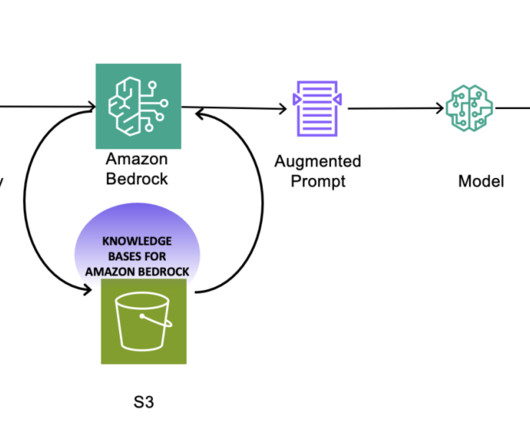

MAY 31, 2024

Data preparation Before creating a knowledge base using Knowledge Bases for Amazon Bedrock, it’s essential to prepare the data to augment the FM in a RAG implementation. Krishna Prasad is a Senior Solutions Architect in Strategic Accounts Solutions Architecture team at AWS.

AWS Machine Learning Blog

AUGUST 4, 2023

Train a recommendation model in SageMaker Studio using training data that was prepared using SageMaker Data Wrangler. The real-time inference call data is first passed to the SageMaker Data Wrangler container in the inference pipeline, where it is preprocessed and passed to the trained model for product recommendation.

AWS Machine Learning Blog

JUNE 22, 2023

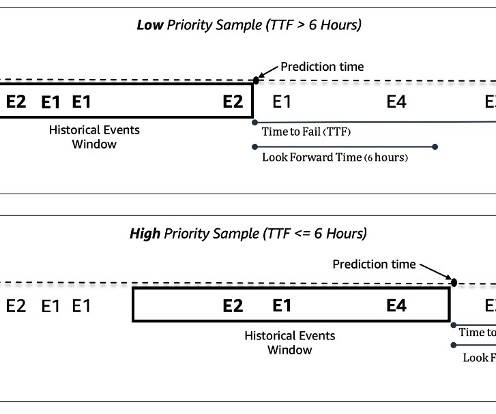

In LnW Connect, an encryption process was designed to provide a secure and reliable mechanism for the data to be brought into an AWS data lake for predictive modeling. Data preprocessing and feature engineering In this section, we discuss our methods for data preparation and feature engineering.

Pickl AI

APRIL 9, 2025

This includes operations like data validation, data cleansing, data aggregation, and data normalization. The goal is to ensure that the data is consistent and ready for analysis. Loading : Storing the transformed data in a target system like a data warehouse, data lake, or even a database.

Pickl AI

NOVEMBER 4, 2024

Role of Data Engineers in the Data Ecosystem Data Engineers play a crucial role in the data ecosystem by bridging the gap between raw data and actionable insights. They are responsible for building and maintaining data architectures, which include databases, data warehouses, and data lakes.

Alation

FEBRUARY 13, 2020

Data Literacy—Many line-of-business people have responsibilities that depend on data analysis but have not been trained to work with data. Their tendency is to do just enough data work to get by, and to do that work primarily in Excel spreadsheets. Who needs data literacy training? Who can provide the training?

Pickl AI

AUGUST 1, 2023

Key Components of Data Engineering Data Ingestion : Gathering data from various sources, such as databases, APIs, files, and streaming platforms, and bringing it into the data infrastructure. Data Processing: Performing computations, aggregations, and other data operations to generate valuable insights from the data.

The MLOps Blog

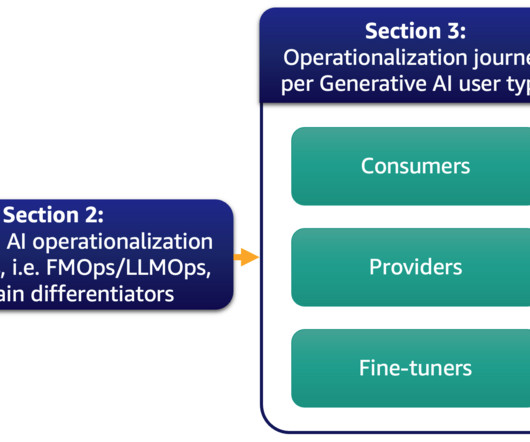

JUNE 27, 2023

See also Thoughtworks’s guide to Evaluating MLOps Platforms End-to-end MLOps platforms End-to-end MLOps platforms provide a unified ecosystem that streamlines the entire ML workflow, from data preparation and model development to deployment and monitoring.

AWS Machine Learning Blog

NOVEMBER 22, 2023

Mai-Lan Tomsen Bukovec, Vice President, Technology | AIM250-INT | Putting your data to work with generative AI Thursday November 30 | 12:30 PM – 1:30 PM (PST) | Venetian | Level 5 | Palazzo Ballroom B How can you turn your data lake into a business advantage with generative AI?

phData

FEBRUARY 24, 2023

Alteryx provides organizations with an opportunity to automate access to data, analytics , data science, and process automation all in one, end-to-end platform. Its capabilities can be split into the following topics: automating inputs & outputs, data preparation, data enrichment, and data science.

Pickl AI

OCTOBER 10, 2024

Informatica’s AI-powered automation helps streamline data pipelines and improve operational efficiency. Common use cases include integrating data across hybrid cloud environments, managing data lakes, and enabling real-time analytics for Business Intelligence platforms.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content