Discover the Most Important Fundamentals of Data Engineering

Pickl AI

NOVEMBER 4, 2024

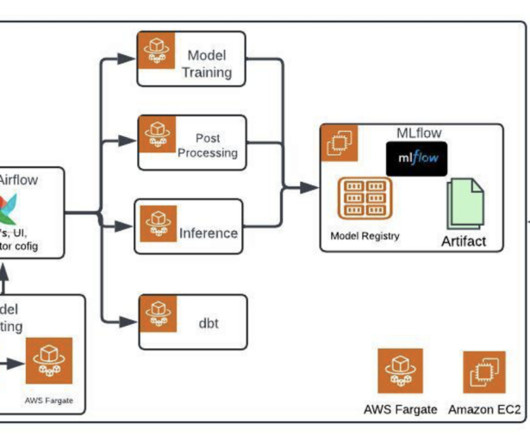

Summary: The fundamentals of Data Engineering encompass essential practices like data modelling, warehousing, pipelines, and integration. Understanding these concepts enables professionals to build robust systems that facilitate effective data management and insightful analysis. What is Data Engineering?

Let's personalize your content