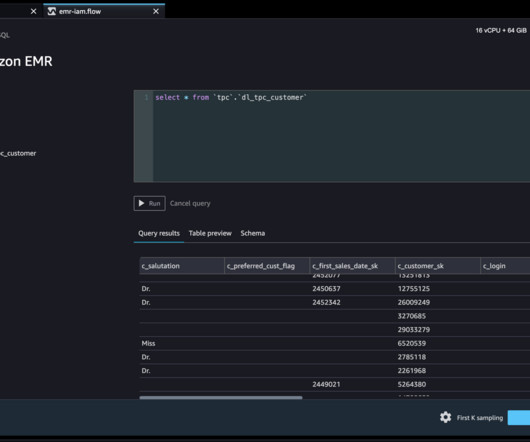

Let Tableau Write Your SQL for You

Tableau

AUGUST 29, 2024

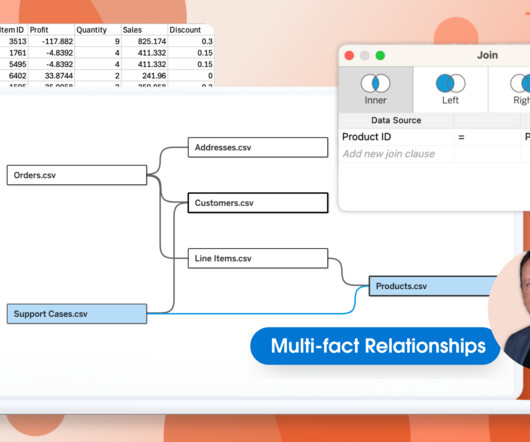

Spencer Czapiewski August 29, 2024 - 9:52pm Kirk Munroe Chief Analytics Officer & Founding Partner at Paint with Data Kirk Munroe, Chief Analytics Officer and Founding Partner at Paint with Data and Tableau DataDev Ambassador, explains the value of using relationships in your Tableau data models. Small data sets.

Let's personalize your content