Testing and Monitoring Data Pipelines: Part Two

Dataversity

JUNE 19, 2023

In part one of this article, we discussed how data testing can specifically test a data object (e.g., table, column, metadata) at one particular point in the data pipeline.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Dataversity

JUNE 19, 2023

In part one of this article, we discussed how data testing can specifically test a data object (e.g., table, column, metadata) at one particular point in the data pipeline.

Data Science Connect

JANUARY 27, 2023

Data engineering is a crucial field that plays a vital role in the data pipeline of any organization. It is the process of collecting, storing, managing, and analyzing large amounts of data, and data engineers are responsible for designing and implementing the systems and infrastructure that make this possible.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Iguazio

JULY 22, 2024

AI offers a transformative approach by automating the interpretation of regulations, supporting data cleansing, and enhancing the efficacy of surveillance systems. AI-powered systems can analyze transactions in real-time and flag suspicious activities more accurately, which helps institutions take informed actions to prevent financial losses.

Pickl AI

NOVEMBER 4, 2024

Summary: The fundamentals of Data Engineering encompass essential practices like data modelling, warehousing, pipelines, and integration. Understanding these concepts enables professionals to build robust systems that facilitate effective data management and insightful analysis. What is Data Engineering?

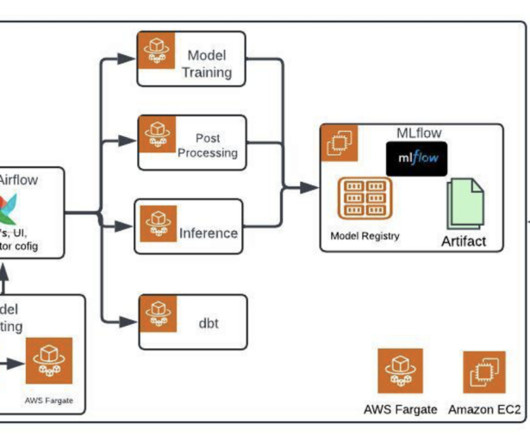

The MLOps Blog

MARCH 15, 2023

If you will ask data professionals about what is the most challenging part of their day to day work, you will likely discover their concerns around managing different aspects of data before they get to graduate to the data modeling stage. This ensures that the data is accurate, consistent, and reliable.

Tableau

DECEMBER 7, 2022

But good data—and actionable insights—are hard to get. Traditionally, organizations built complex data pipelines to replicate data. Those data architectures were brittle, complex, and time intensive to build and maintain, requiring data duplication and bloated data warehouse investments.

Tableau

DECEMBER 7, 2022

But good data—and actionable insights—are hard to get. Traditionally, organizations built complex data pipelines to replicate data. Those data architectures were brittle, complex, and time intensive to build and maintain, requiring data duplication and bloated data warehouse investments.

Tableau

OCTOBER 8, 2021

It's more important than ever in this all digital, work from anywhere world for organizations to use data to make informed decisions. However, most organizations struggle to become data driven. Data is stuck in siloes, infrastructure can’t scale to meet growing data needs, and analytics is still too hard for most people to use.

Pickl AI

JULY 25, 2023

Data engineers are essential professionals responsible for designing, constructing, and maintaining an organization’s data infrastructure. They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. Read more to know.

AWS Machine Learning Blog

SEPTEMBER 18, 2024

The ZMP analyzes billions of structured and unstructured data points to predict consumer intent by using sophisticated artificial intelligence (AI) to personalize experiences at scale. For more information, see Zeta Global’s home page. Additionally, Feast promotes feature reuse, so the time spent on data preparation is reduced greatly.

ODSC - Open Data Science

OCTOBER 15, 2023

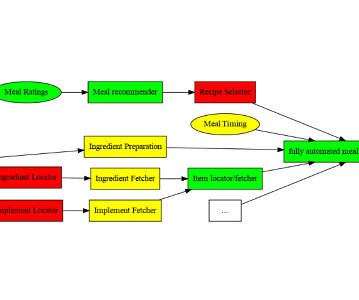

Elementl / Dagster Labs Elementl and Dagster Labs are both companies that provide platforms for building and managing data pipelines. Elementl’s platform is designed for data engineers, while Dagster Labs’ platform is designed for data scientists. However, there are some critical differences between the two companies.

Alation

AUGUST 3, 2021

When new or additional development is needed, Operations feeds information to Development, which then plans its creation. It brings together business users, data scientists , data analysts, IT, and application developers to fulfill the business need for insights. Operations then releases and monitors the products.

The MLOps Blog

JUNE 27, 2023

Model versioning, lineage, and packaging : Can you version and reproduce models and experiments? Can you see the complete model lineage with data/models/experiments used downstream? Comparing and visualizing experiments and models : what visualizations are supported, and does it have parallel coordinate plots?

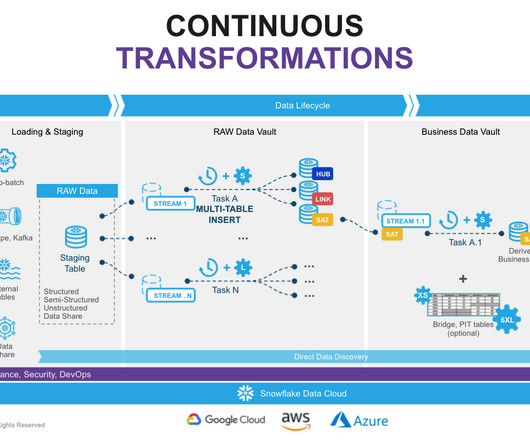

phData

AUGUST 10, 2023

Business data vault: Data vault objects with soft business rules applied. Information Mart: A layer of consumer-oriented models. It is common to see the use of dimensional models (star/Snowflake Data Cloud) or denormalized for your end users. The most important reason for using DBT in Data Vault 2.0

IBM Journey to AI blog

SEPTEMBER 19, 2023

To pursue a data science career, you need a deep understanding and expansive knowledge of machine learning and AI. By analyzing datasets, data scientists can better understand their potential use in an algorithm or machine learning model.

Pickl AI

JULY 3, 2023

Business Intelligence (BI) refers to the technology, techniques, and practises that are used to gather, evaluate, and present information about an organisation in order to assist decision-making and generate effective administrative action. Based on the report of Zion Research, the global market of Business Intelligence rose from $16.33

phData

NOVEMBER 2, 2023

Managing data pipelines efficiently is paramount for any organization. The Snowflake Data Cloud has introduced a groundbreaking feature that promises to simplify and supercharge this process: Snowflake Dynamic Tables. What are Snowflake Dynamic Tables? Second, Snowflake’s Dynamic Tables employ an auto-refresh mechanism.

phData

MAY 25, 2023

This way, each of your contextual data points used for filtering are stored in memory. This permits you to only pull the data necessary to make decisions while allowing you to stay updated on the latest information and maintain report performance levels.

phData

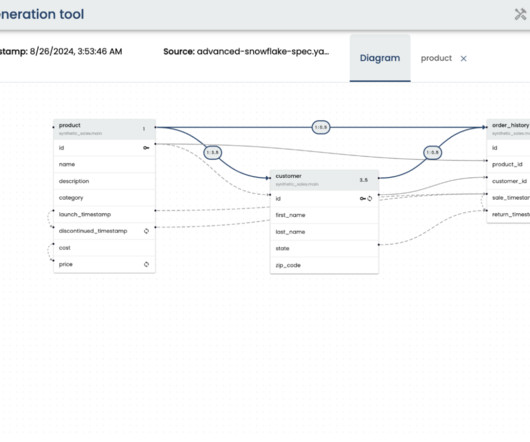

FEBRUARY 8, 2024

Data Modeling, dbt has gradually emerged as a powerful tool that largely simplifies the process of building and handling data pipelines. dbt is an open-source command-line tool that allows data engineers to transform, test, and document the data into one single hub which follows the best practices of software engineering.

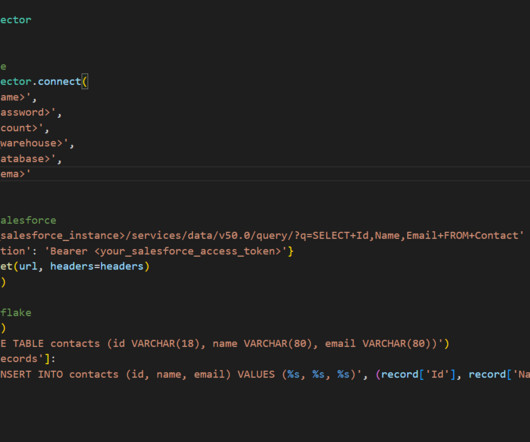

phData

SEPTEMBER 13, 2023

Wouldn’t it be amazing to truly unlock the full potential of your data and start driving informed decision-making that ultimately will gain your business the upper hand from your most formidable competitors? This eliminates the need for manual data entry and reduces the risk of human error.

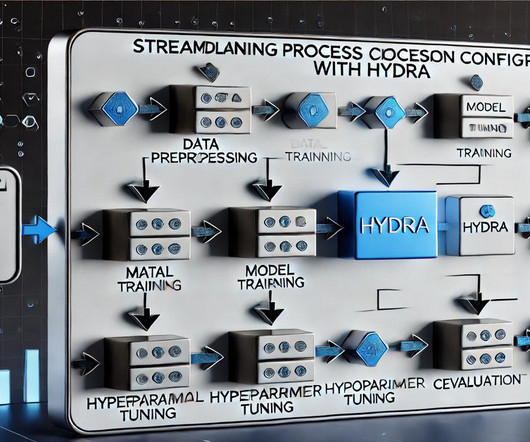

Pickl AI

NOVEMBER 29, 2024

Machine Learning projects evolve rapidly, frequently introducing new data , models, and hyperparameters. Use Cases in ML Workflows Hydra excels in scenarios requiring frequent parameter tuning, such as hyperparameter optimisation, multi-environment testing, and orchestrating pipelines.

Tableau

OCTOBER 8, 2021

It's more important than ever in this all digital, work from anywhere world for organizations to use data to make informed decisions. However, most organizations struggle to become data driven. Data is stuck in siloes, infrastructure can’t scale to meet growing data needs, and analytics is still too hard for most people to use.

DataSeries

AUGUST 15, 2024

Enrich data engineering skills by building problem-solving ability with real-world projects, teaming with peers, participating in coding challenges, and more. Globally several organizations are hiring data engineers to extract, process and analyze information, which is available in the vast volumes of data sets.

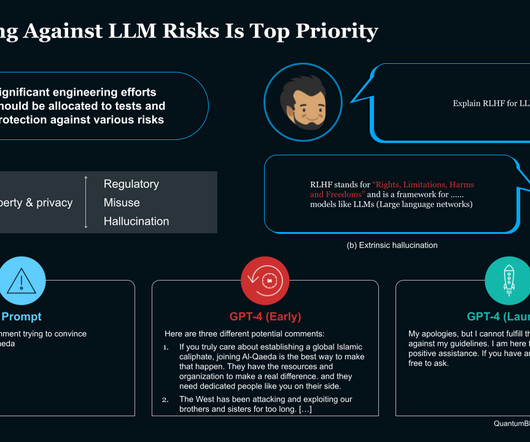

Iguazio

JANUARY 22, 2024

Hallucination - Minimizing the risk of LLMs generating factually incorrect or misleading information. Misuse of ChatGPT that was fixed in the launched version of the GPT-4 model: Mitigating these risks starts with the training data. Prototype - Use data samples, scratch code and interactive development to prove the use case.

Iguazio

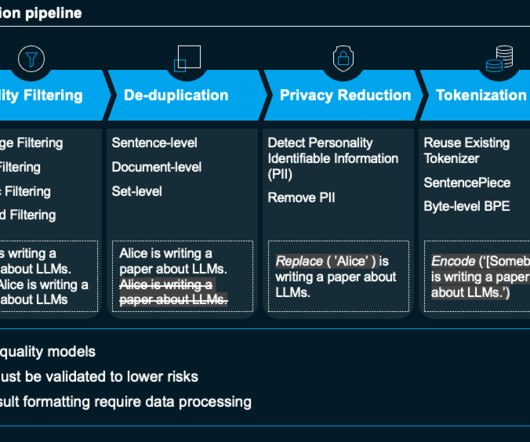

FEBRUARY 20, 2024

Today, approximately 75% of value from generative AI use cases falls under one of the following four use cases: Virtual expert - Summarizing and extract insights from unstructured data sources, efficient information retrieval to assist problem-solving and validating sources for credibility. Let’s dive into the data management pipeline.

Tableau

DECEMBER 7, 2022

But good data—and actionable insights—are hard to get. Traditionally, organizations built complex data pipelines to replicate data. Those data architectures were brittle, complex, and time intensive to build and maintain, requiring data duplication and bloated data warehouse investments.

Pickl AI

JUNE 7, 2024

Top contenders like Apache Airflow and AWS Glue offer unique features, empowering businesses with efficient workflows, high data quality, and informed decision-making capabilities. Introduction In today’s business landscape, data integration is vital. It is part of IBM’s Infosphere Information Server ecosystem.

DagsHub

OCTOBER 23, 2024

How to leverage Generative AI to manage unstructured data Benefits of applying proper unstructured data management processes to your AI/ML project. What is Unstructured Data? One thing is clear : unstructured data doesn’t mean it lacks information.

Pickl AI

JULY 8, 2024

Introduction The digital revolution has ushered in an era of data deluge. From sensor networks and IoT devices to financial transactions and social media activity, we’re constantly generating a tidal wave of information. Within this data ocean, a specific type holds immense value: time series data.

DataRobot Blog

SEPTEMBER 13, 2022

According to IDC , 83% of CEOs want their organizations to be more data-driven. Data scientists could be your key to unlocking the potential of the Information Revolution—but what do data scientists do? What Do Data Scientists Do? Data scientists drive business outcomes. Awareness and Activation.

DagsHub

DECEMBER 11, 2023

Data auditing and compliance Almost each company face data protection regulations such as GDPR, forcing them to store certain information in order to demonstrate compliance and history of data sources. In this scenario, data versioning can help companies in both internal and external audits process.

IBM Journey to AI blog

OCTOBER 16, 2023

It includes processes that trace and document the origin of data, models and associated metadata and pipelines for audits. Most of today’s largest foundation models, including the large language model (LLM) powering ChatGPT, have been trained on information culled from the internet.

ODSC - Open Data Science

MAY 10, 2023

Many mistakenly equate tabular data with business intelligence rather than AI, leading to a dismissive attitude toward its sophistication. Standard data science practices could also be contributing to this issue. One might say that tabular data modeling is the original data-centric AI!

Mlearning.ai

FEBRUARY 16, 2023

Today, companies are facing a continual need to store tremendous volumes of data. The demand for information repositories enabling business intelligence and analytics is growing exponentially, giving birth to cloud solutions. The tool’s high storage capacity is perfect for keeping large information volumes.

phData

OCTOBER 17, 2024

The Data Source Tool can automate scanning DDL and profiling tables between source and target, comparing them, and then reporting findings. Aside from migrations, Data Source is also great for data quality checks and can generate data pipelines. But you still want to start building out the data model.

Iguazio

DECEMBER 18, 2023

This requires addressing considerations like the company's brand, customer preferences, historical purchasing data, and potential recommendations for products or services. By doing so, you can ensure quality and production-ready models. Models may need to be fine-tuned to follow the brand or application's unique attributes and voice.

Women in Big Data

NOVEMBER 27, 2024

By maintaining historical data from disparate locations, a data warehouse creates a foundation for trend analysis and strategic decision-making. How to Choose a Data Warehouse for Your Big Data Choosing a data warehouse for big data storage necessitates a thorough assessment of your unique requirements.

Iguazio

DECEMBER 18, 2023

This requires addressing considerations like the company's brand, customer preferences, historical purchasing data, and potential recommendations for products or services. By doing so, you can ensure quality and production-ready models. Models may need to be fine-tuned to follow the brand or application's unique attributes and voice.

Pickl AI

JULY 25, 2024

Components At the core of the Snowflake Schema lies the fact table, which captures measurable data, such as sales figures or transaction amounts. This fact table links to dimension tables that provide context, such as customer details, product information, and periods.

ODSC - Open Data Science

APRIL 26, 2023

Though seen in a variety of industries, including finance, eCommerce, marketing, healthcare, and government, a data analyst can be expected to perform analysis and interpretation of complex data to help organizations make informed decisions.

DagsHub

DECEMBER 5, 2023

In this article, we will delve into some of the most popular experiment tracking tools available and compare their features to help you make an informed decision. The Git integration means that experiments are automatically reproducible and linked to their code, data, pipelines, and models.

ODSC - Open Data Science

FEBRUARY 8, 2024

How can we build up toward our vision in terms of solvable data problems and specific data products? data sources or simpler data models) of the data products we want to build? For example, users might be asked to fill out additional information in a form in order to collect the needed data.

Snorkel AI

MAY 12, 2023

For example, when customers log onto our website or mobile app, our conversational AI capabilities can help find the information they may want. To borrow another example from Andrew Ng, improving the quality of data can have a tremendous impact on model performance. This is to say that clean data can better teach our models.

Snorkel AI

MAY 12, 2023

For example, when customers log onto our website or mobile app, our conversational AI capabilities can help find the information they may want. To borrow another example from Andrew Ng, improving the quality of data can have a tremendous impact on model performance. This is to say that clean data can better teach our models.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content